[ad_1]

Want a laptop that can easily handle your demanding video editing or data processing workflow? The 16-inch MacBookPro with M3 Max should be your top choice.

Find the laptop’s $3,499 price tag steep? Amazon is knocking $250 off the M3 Max MacBook Pro, making it a little more affordable.

This post contains affiliate links. Cult of Mac may earn a commission when you use our links to buy items.

MacBook Pro with M3 Max has beauty and brains

M3 Max is currently the fastest laptop chip in Apple’s lineup. It features a 14-core CPU, 30-core GPU and ships with 36GB of unified memory. Fabricated on TSMC’s enhanced 3nm node, the SoC delivers a great balance between performance and battery life.

The M3 Max packs more than enough horsepower to handle heavy data processing or editing. And it can deliver this performance without draining your MacBook’s battery in no time.

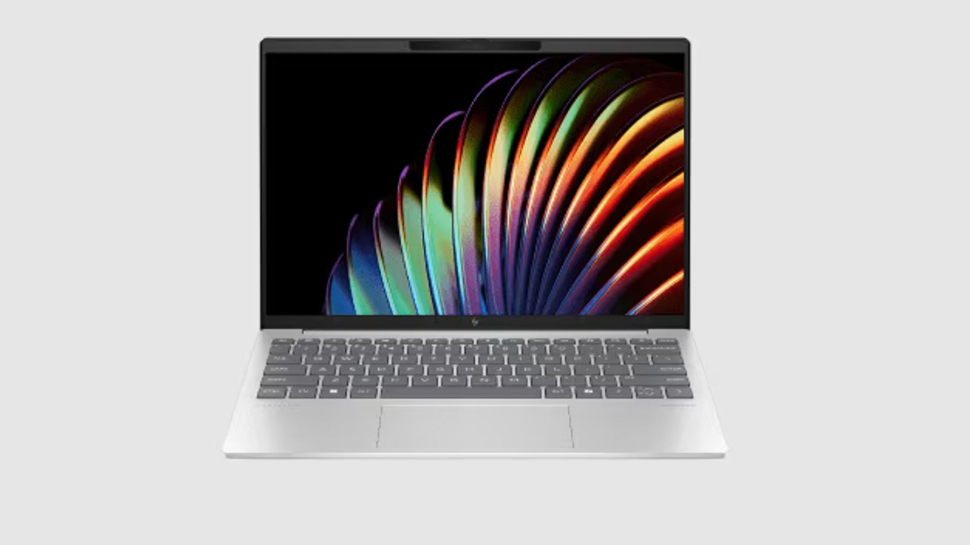

It’s not just the chip. Apple’s 16-inch MacBook Pro is packed with the best hardware available. You get a 120Hz ProMotion display with a peak brightness of 1,000 nits. The display’s notch houses a 1080p FaceTime HD camera, complemented by the six-speaker setup and three-mic array. Oh! And it comes in a stealthy Space Black finish.

As for ports, the laptop packs an SD card slot, 3 USB-C ports, a 3.5mm jack, an HDMI port and MagSafe for fast charging.

Save a sweet $250 on the M3 Max MacBook Pro

With so much power, it’s not surprising that the M3 Max MacBook Pro is expensive. Apple wants $3,499 for the entry-level configuration packing 1TB storage and 36GB memory. But you can get the same variant from Amazon for $250 off, bringing its price down to $3,249.

If your workload is memory intensive, spring for the 48GB unified memory configuration. While the machine’s MSRP is $4,000, Amazon’s deal has knocked it down to $3,749. This is a modest saving, but you can use the money saved to buy some essential accessories for your new MacBook Pro.

M3 Max MacBook Pro configurations are rarely discounted, so you should not miss this deal.

Buy from: Amazon

[ad_2]

Source Article Link