[ad_1]

Image: D. Griffin Jones/Cult of Mac

If you don’t have your phone on silent, you may as well have a fun, custom iPhone ringtone. After all, custom Home Screens and Lock Screens are all the rage — you can create a ringtone that matches your aesthetic.

Between wearing an Apple Watch and leaving my phone muted, I almost never hear my ringtone, but needs and preferences vary. If you don’t wear an Apple Watch and you carry your phone in a bag or purse, a ringtone is the only way you’ll hear a call coming in.

It’s not super straightforward, but here’s how you can make a custom iPhone ringtone out of an MP3 using just your phone. Keep reading below or watch our video.

How to make a custom iPhone ringtone

Sure, you can always pick from one of Apple’s built-in ringtones in Settings > Sounds & Haptics > Ringtone. But if you want to create your own custom iPhone ringtone, follow these instructions:

Screenshot: D. Griffin Jones/Cult of Mac

Time needed: 10 minutes

How to make a custom iPhone ringtone

- Save an MP3 file from a YouTube video

First, the custom iPhone ringtone you want needs to be in MP3 format.

If you can find it on YouTube, that’s great. Copy the URL, go to cobalt.tools, tap Audio, paste the link, and hit >>. Tap the Download button to save the MP3.

Otherwise, make sure the custom ringtone you want is downloaded on your iPhone in the Files app. - Download GarageBand

To make a ringtone out of your MP3, you need to download GarageBand, Apple’s app designed to make and edit music. (Don’t worry, you don’t need to record anything. This is all part of the process.)

It’s completely free on the App Store.

- Create a new song

Next, open GarageBand, swipe over to the Audio Recorder tool, and tap Voice. You don’t need to touch any of the controls — hit the third button on the left of the toolbar that looks like a stack of blocks.

- Add your MP3

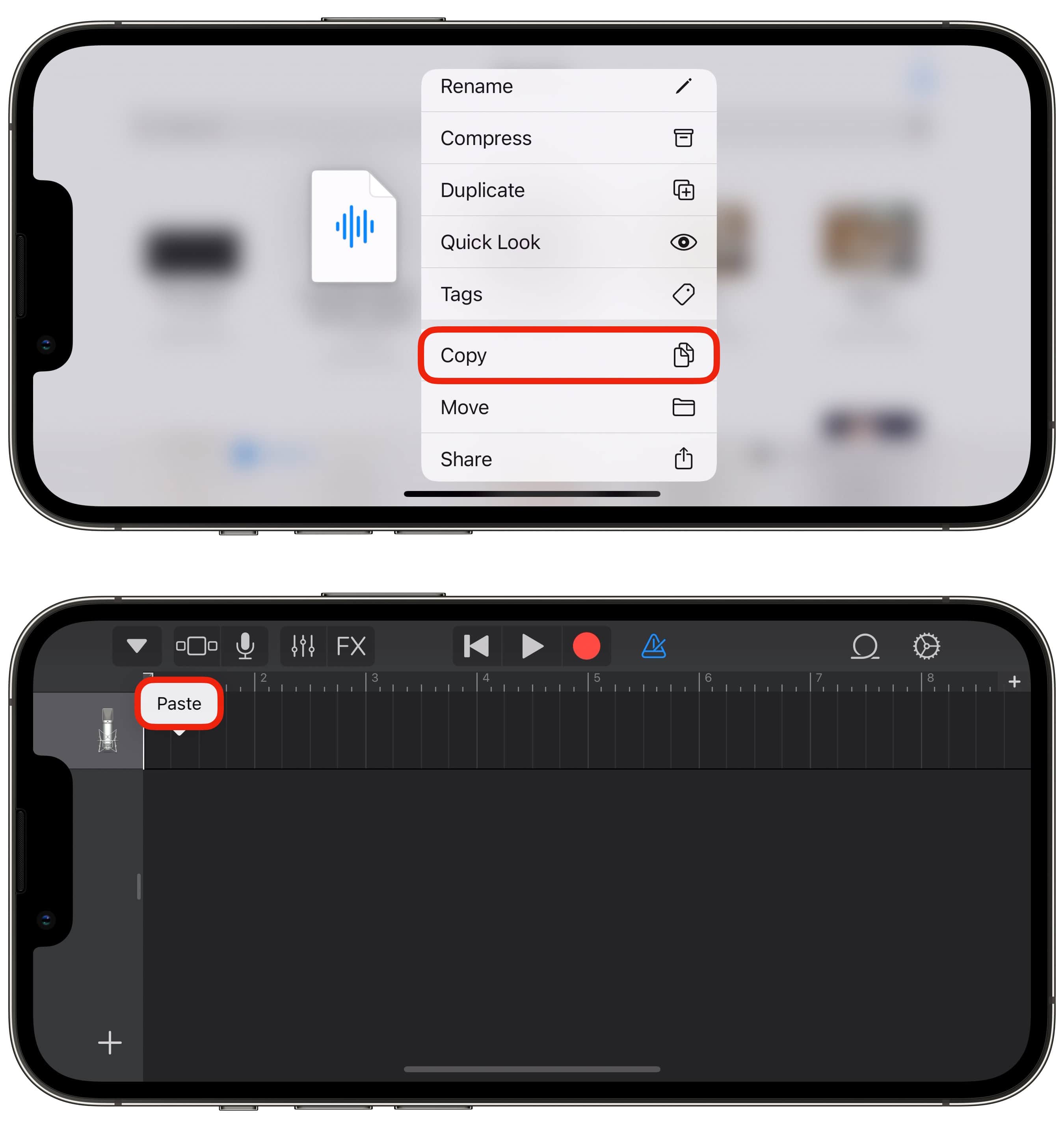

To add the MP3 you want to use as your custom iPhone ringtone, you need to switch over to the Files app. In the Recents tab, the most recent two files should be “My Song” (or whatever you called the GarageBand project you just created) and the MP3 you downloaded earlier.

With one finger, tap and hold on the icon for the MP3 file, then tap Copy. Switch back to GarageBand, tap on the empty timeline, then tap Paste. - Trim the audio clip

If there’s a part of the song you want to trim out — silence at the beginning, a section you don’t want at the end — it’s easy to edit that out.

Tap the left side of the clip and drag the edge inward to cut out the beginning. It should be outlined in yellow. Then, tapping the middle of the clip, you can drag it back to start at the beginning.

Do the same on the right edge to cut out the end. Ringtones are automatically trimmed down to 30 seconds long, so if your MP3 is longer than that, you might as well find the best stopping point yourself.

You can pinch with two fingers to zoom in for more precision. Preview your edits with the Play button in the toolbar. - Rename your file and save it as a custom iPhone ringtone

Tap the ▼ button to the left of the toolbar and tap My Songs. Tap and hold on My Song, the one you just created, and tap Rename.

Tap and hold on it again, and tap Share > Ringtone. Then tap Export. Tap Use sound as… to pick if you want it for your phone ringtone, text tone or for a specific contact.

You can create multiple custom iPhone ringtones to use for various people

And that’s how you create a custom ringtone on your iPhone. If you want to switch it back at any point, you can customize it in Settings > Sounds & Haptics > Ringtone.

You can repeat the directions above to create a bunch of different ringtones. The more you create, the more options that’ll appear in the list. You also can use a really short sound effect as a notification alert for incoming texts, new email, reminders and more.

You can also assign a custom ringtone to a specific contact. Open Contacts (or the Contacts tab of Phone), tap on a contact and tap Edit. Below their contact information like phone number, email and pronouns, you can set a custom ringtone and text tone for them from the ones you’ve created.

More ways to customize your phone

[ad_2]

Source Article Link