In the rapidly evolving world of machine learning, the ability to fine-tune AI models an open-source large language models is a skill that sets apart the proficient from the novices. The Orca 2 model, known for its impressive question-answering capabilities, stands as a fantastic starting point for fine tuning AI and for those eager to dive deeper into the intricacies of machine learning. This article will guide you through the process of enhancing the Orca 2 model using Python, a journey that will not only boost the model’s performance. But also an easy way to add custom knowledge to your AI model allowing it to answer specific queries. This is particularly useful if you are creating customer service AI assistants that need to converse with customers about a company’s specific products and services.

To embark on this journey, the first step is to set up a Python environment. This involves installing Python and gathering the necessary libraries that are essential for the functionality of the Orca 2 model. Once you have your environment ready, create a file, perhaps named app.py, and import the required modules. These include machine learning libraries and other dependencies that will serve as the backbone of your project.

The foundation of any fine-tuning process is the dataset. The quality of your data is critical, so take the time to collect a robust set of questions and answers. It’s important to clean and format this data meticulously, ensuring that it is balanced to avoid any biases. This preparation is crucial as it sets the stage for successful model training.

Fine-tuning open source AI models

Mervin Praison has created a beginner’s guide to fine tuning open source large language models such as Orca 2 as well as providing all the code and instructions you need to be able to easily add custom knowledge to your AI model.

Here are some other articles you may find of interest on the subject of fine tuning AI models :

To simplify your machine learning workflow, consider using the Ludwig toolbox. Ludwig is a toolbox that allows users to train and test deep learning models without the need to write code. It is built on top of TensorFlow. Ludwig allows you to configure the model by specifying input and output features, selecting the appropriate model type, and setting the training parameters. This configuration is vital to tailor the model to your specific needs, especially for question and answer tasks.

One aspect that can significantly impact your model’s performance is the sequence length of your data. Write a function to calculate the optimal sequence length for your dataset. This ensures that the model processes the data efficiently, which is a key factor in achieving the best performance.

With your setup complete and your data prepared, you can now begin training the Orca 2 model. Feed your dataset into the model and let it learn from the information provided. It’s important to monitor the training process to ensure that the model is learning effectively. If necessary, make adjustments to improve the learning process.

After the training phase, it’s essential to save your model. This preserves its state for future use and allows you to revisit your work without starting from scratch. Once saved, test the model’s predictive capabilities on a new dataset. Evaluate its performance carefully and make refinements if needed to ensure that it meets your standards.

The final step in your fine-tuning journey is to share your achievements with the broader machine learning community. One way to do this is by contributing your fine-tuned model to Hugging Face, a platform dedicated to machine learning model collaboration. By sharing your work, you not only contribute to the community’s growth but also demonstrate your skill set and commitment to advancing the field.

Things to consider when fine tuning AI models

When fine tuning AI models, several key factors must be considered to ensure the effectiveness and ethical integrity of the model.

- Data Quality and Diversity: The quality and diversity of the training data are crucial. The data should be representative of the real-world scenarios where the model will be applied. This avoids biases and improves the model’s generalizability. For instance, in a language model, the dataset should include various languages, dialects, and sociolects to prevent linguistic biases.

- Objective Alignment: The model’s objectives should align with the intended application. This involves defining clear, measurable goals for what the model should achieve. For example, if the model is for medical diagnosis, its objectives should align with accurately identifying diseases from symptoms and patient history.

- Ethical Considerations: Ethical implications, such as fairness, transparency, and privacy, must be addressed. Ensuring the model does not perpetuate or amplify biases is essential. For instance, in facial recognition technology, it’s important to ensure the model does not discriminate against certain demographic groups.

- Regularization and Generalization: Overfitting is a common issue where the model performs well on training data but poorly on unseen data. Techniques like dropout, data augmentation, or early stopping can be used to promote generalization.

- Model Complexity: The complexity of the model should be appropriate for the task. Overly complex models can lead to overfitting and unnecessary computational costs, while too simple models might underfit and fail to capture important patterns in the data.

- Evaluation Metrics: Choosing the right metrics to evaluate the model is critical. These metrics should reflect the model’s performance in real-world conditions and align with the model’s objectives. For example, precision and recall are important in models where false positives and false negatives have significant consequences.

- Feedback Loops: Implementing mechanisms for continuous feedback and improvement is important. This could involve regularly updating the model with new data or adjusting it based on user feedback to ensure it remains effective and relevant.

- Compliance and Legal Issues: Ensuring compliance with relevant laws and regulations, such as GDPR for data privacy, is essential. This includes considerations around data usage, storage, and model deployment.

- Resource Efficiency: The computational and environmental costs of training and deploying AI models should be considered. Efficient model architectures and training methods can reduce these costs.

- Human-in-the-loop Systems: In many applications, it’s beneficial to have a human-in-the-loop system where human judgment is used alongside the AI model. This can improve decision-making and provide a safety check against potential errors or biases in the model.

By following these steps, you can master the fine-tuning of the Orca 2 model for question and answer tasks. This process will enhance the model’s performance for your specific applications and provide you with a structured approach to fine-tuning any open-source model. As you progress, you’ll find yourself on a path to professional growth in the machine learning field, equipped with the knowledge and experience to tackle increasingly complex challenges.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.

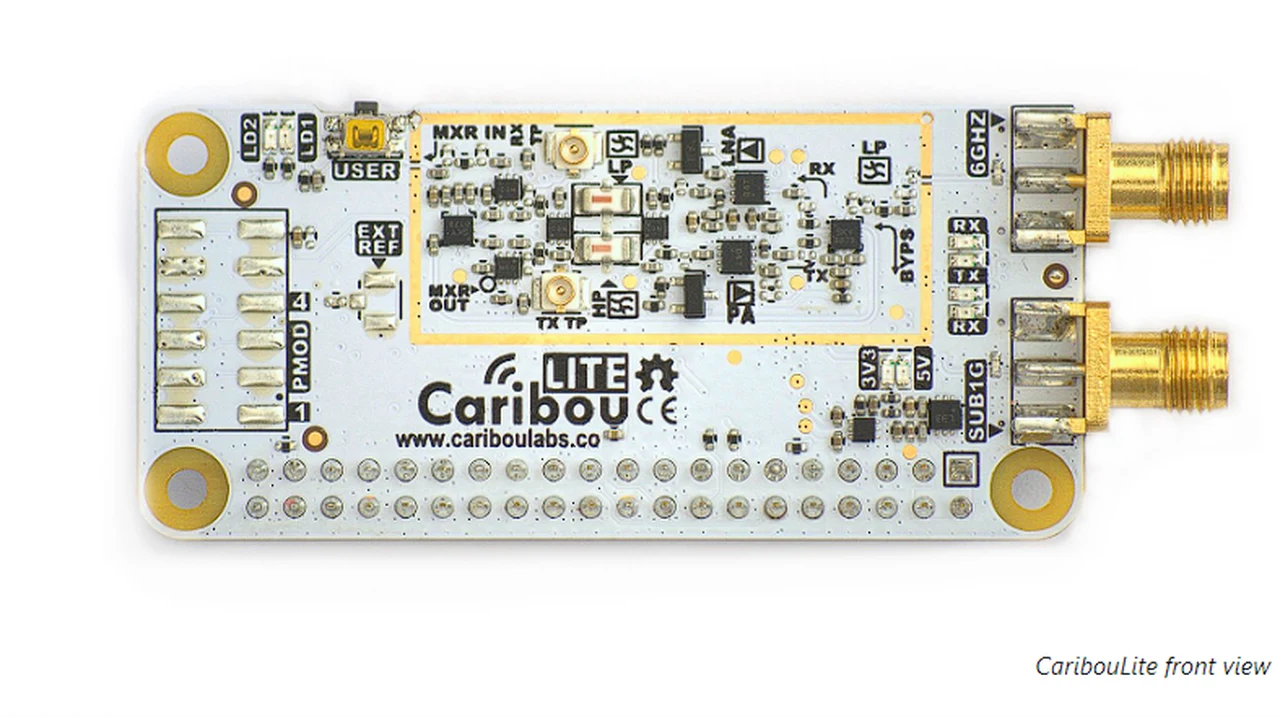

Transform your Raspberry Pi into a powerful tool for radio communication, capable of exploring frequencies that stretch up to an impressive 6 GHz. The CaribouLite Raspberry Pi radio HAT makes this possible, offering a dual-channel software-defined radio (SDR) platform that’s both versatile and affordable. This innovative accessory is designed for hobbyists, educators, and researchers who are passionate about radio exploration and innovation.

Transform your Raspberry Pi into a powerful tool for radio communication, capable of exploring frequencies that stretch up to an impressive 6 GHz. The CaribouLite Raspberry Pi radio HAT makes this possible, offering a dual-channel software-defined radio (SDR) platform that’s both versatile and affordable. This innovative accessory is designed for hobbyists, educators, and researchers who are passionate about radio exploration and innovation.