[ad_1]

Hello Nature readers, would you like to get this Briefing in your inbox free every week? Sign up here.

Some models are more likely to associate African American English with negative traits than Standard American English.Credit: Jaap Arriens/NurPhoto via Getty

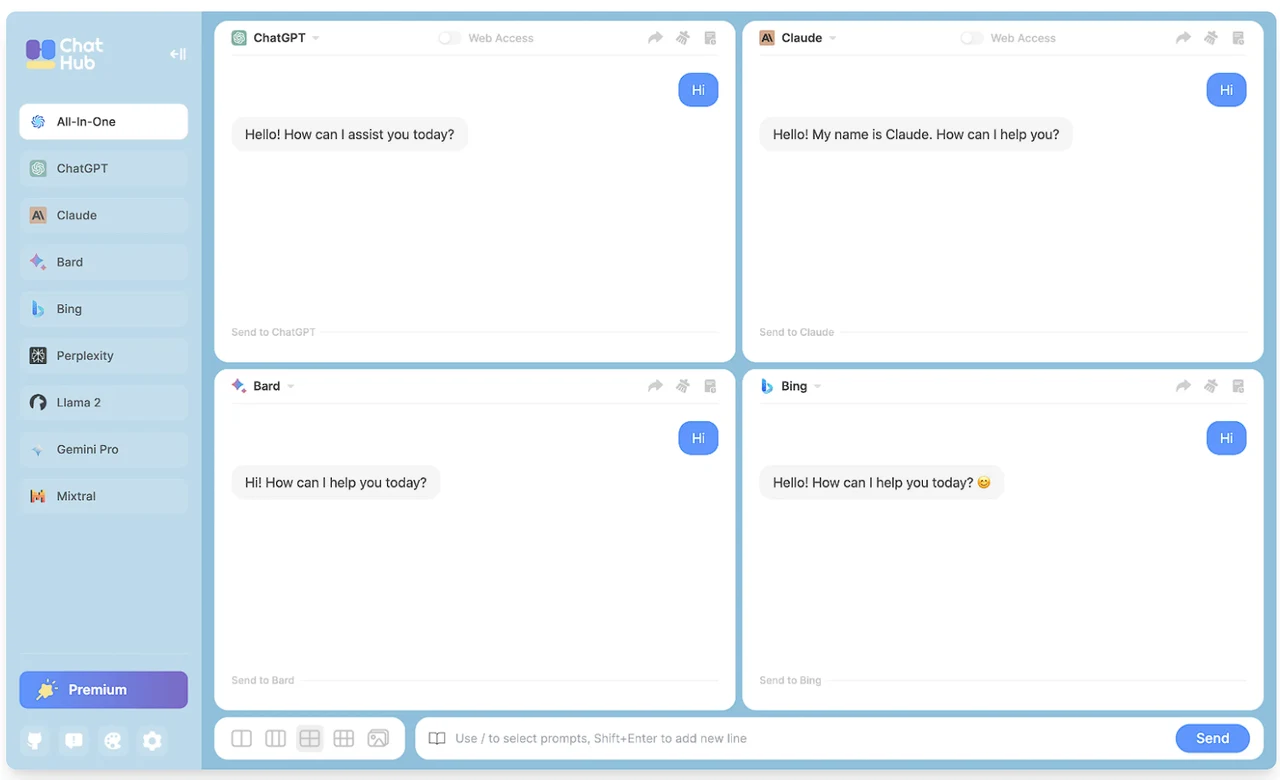

Some large language models (LLMs), including those that power chatbots such as ChatGPT, are more likely to suggest the death penalty to a fictional defendant presenting a statement written in African American English (AAE) compared with one written in Standardized American English. AAE is a dialect spoken by millions of people in the United States that is associated with the descendants of enslaved African Americans. “Even though human feedback seems to be able to effectively steer the model away from overt stereotypes, the fact that the base model was trained on Internet data that includes highly racist text means that models will continue to exhibit such patterns,” says computer scientist Nikhil Garg.

Reference: arXiv preprint (not peer-reviewed)

A drug against idiopathic pulmonary fibrosis, created from scratch by AI systems, has entered clinical trials. Researchers at Insilico Medicine identified a target enzyme using an AI system trained on patients’ biomolecular data and scientific literature text. They then used a different algorithm to suggest a molecule that would block this enzyme. After some tweaks and laboratory tests, researchers had a drug that appeared to reduce inflammation and lung scarring. Medicinal chemist Timothy Cernak says he was initially cautious about the results because there’s a lot of hype about AI-powered drug discovery. “I think Insilico’s been involved in hyping that, but I think they built something really robust here.”

Chemical & Engineering News | 4 min read

Reference: Nature Biotechnology paper

Researchers built a pleurocystitid robot to investigate how the ancient sea creature moved. Pleurocystitids lived 450 million years ago and were probably one of the first echinoderms (animals including starfish and sea urchins) that could move from place to place using a muscular ‘tail’. The robot moved more effectively on a sandy ‘seabed’ surface when it had a longer tail, which matches fossil evidence that pleurocystitids evolved longer tails over time.

The tail of the pleurocystitid replica (nicknamed ‘Rhombot’) was built out of wires that contract in response to electrical stimulation to simulate the flexibility and rigidity of a natural muscular tail.(Carnegie Mellon University – College of Engineering)

Features & opinion

Scientists hope that getting AI systems to comb through heaps of raw biomolecular data could reveal the answer to one of the biggest biological questions: what does it mean to be alive? AI models could, with enough data and computing power, build mathematical representations of cells that could be used to run virtual experiments — as well as map out what combination of biochemistry is required to sustain life. Researchers could even use it to design entirely new cells, that, for example, can explore a diseased organ and report on its condition. “It’s very ‘Fantastic Voyage’-ish,” admits biophysicist Stephen Quake. “But who knows what the future is going to hold?”

The New York Times | 9 min read

The editors of Nature Reviews Physics and Nature Human Behaviour have teamed up to explore the pros and cons of using AI systems such as ChatGPT in science communication. Apart from making up convincing inaccuracies, write the editors, chatbots have “an obvious, yet underappreciated” downside: they have nothing to say. Ask an AI system to write an essay or an opinion piece and you’ll get “clichéd nothingness”.

In Nature Human Behaviour, six experts discuss how AI systems can help communicators to make jargon understandable or translate science into various languages. At the same time, AI “threatens to erase diverse interpretations of scientific work” by overrepresenting the perspectives of those who have shaped research for centuries, write anthropologist Lisa Messeri and psychologist M. J. Crockett.

In Nature Reviews Physics, seven other experts delve into the key role of science communication in building trust between scientists and the public. “Regular, long-term dialogical interaction, preferably face-to-face, is one of the most effective ways to build a relationship based on trust,” notes science-communication researcher Kanta Dihal. “This is a situation in which technological interventions may do more harm than good.”

Nature Reviews Physics editorial | 4 min read, Nature Human Behaviour feature | 10 min read & Nature Reviews Physics viewpoint | 16 min read

Technology journalist James O’Malley used freedom-of-information requests to unveil how one of London’s Underground stations spent a year as a testing ground for AI-powered surveillance. Initially, the technology was meant to reduce the number of people jumping the ticket barriers, but it was also used to alert staff if someone had fallen over or was spending a long time standing close to the platform edge. Making every station ‘smart’ would undoubtedly make travelling safer and smoother, argues O’Malley. At the same time, there are concerning possibilities for bias and discrimination. “It would be trivial from a software perspective to train the cameras to identify, say, Israeli or Palestinian flags — or any other symbol you don’t like.”

Odds and Ends of History blog | 14 min read

Image of the week

Simon R Anuszczyk and John O Dabiri/Bioinspir. Biomim. (CC BY 4.0)

A 3D-printed ‘hat’ allows this cyborg jellyfish to swim almost five times faster than its hat-less counterparts. The prosthesis could also house ocean monitoring equipment such as salinity, temperature and oxygen sensors. Scientists use electronic implants to control the animal’s speed and eventually want to make it fully steerable, in order to gather deep ocean data that can otherwise only be obtained at great cost. “Since [jellyfish] don’t have a brain or the ability to sense pain, we’ve been able to collaborate with bioethicists to develop this biohybrid robotic application in a way that’s ethically principled,” says engineer and study co-author John Dabiri. (Popular Science | 3 min read)

Reference: Bioinspiration & Biomimetics paper

[ad_2]

Source Article Link