[ad_1]

- El gigante estadounidense de los semiconductores revela el lanzamiento del HBM4 en 2026, seguido del HBM4E

- Probablemente será utilizado por la GPU Rubin R100 de Nvidia y el sucesor de AMD del Instinct MI400x.

- Micron llega tarde a un mercado muy concurrido liderado por SK Hynix

Micron ha revelado más pasos en su plan para capturar una parte significativa del mercado de memorias de gran ancho de banda en rápida expansión.

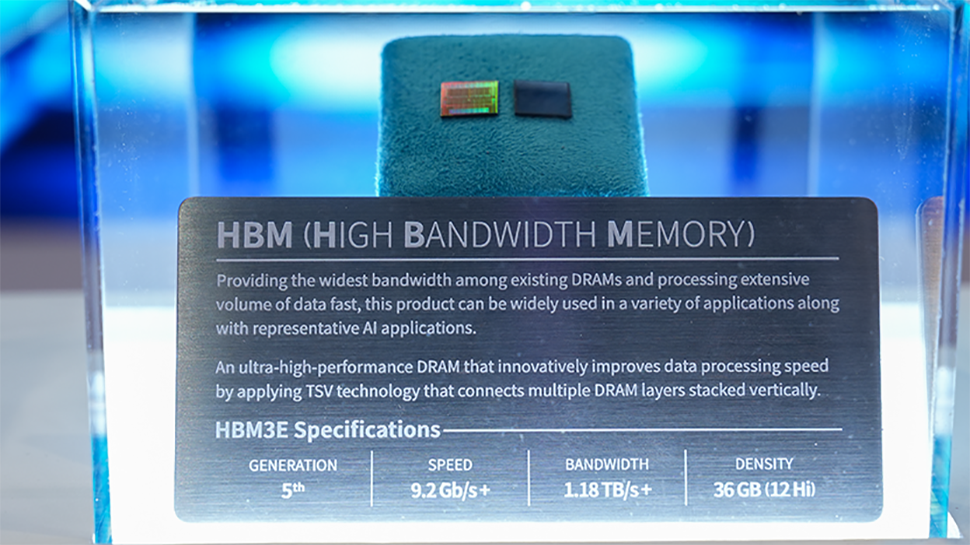

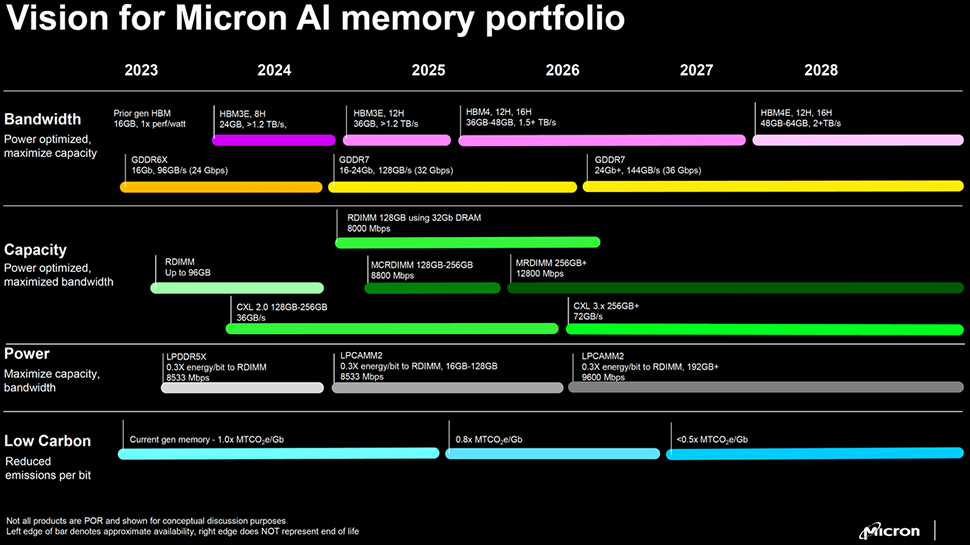

El gigante estadounidense de semiconductores reveló durante su convocatoria de resultados del primer trimestre de 2025 que planea presentar productos de memoria HBM4 en 2026, seguidos de HBM4E en 2027/2028 con piezas de 64 GB y 2 TB/s diseñadas para aplicaciones avanzadas de inteligencia artificial y centros de datos.

Sanjay Mehrotra, presidente y director ejecutivo de Micron, destacó la creciente importancia de HBM en los planes de la compañía y afirmó: “El mercado de HBM mostrará un fuerte crecimiento en los próximos años. En 2028, esperamos que el mercado total direccionable de HBM (TAM) aumente crecerá cuatro veces desde el nivel de 16 mil millones de dólares en 2024 y superará los 100 mil millones de dólares en 2030. Nuestra previsión para TAM para HBM en 2030 será mayor “Según nuestra previsión, el tamaño de toda la industria de DRAM, incluida HBM, en el año 2024”.

Llegando a la GPU insignia

Al expresar su entusiasmo por la próxima generación de HBM, Mehrotra añadió: “Al aprovechar una base sólida y continuas inversiones en tecnología de proceso 1β probada, esperamos que el HBM4 de Micron mantenga el tiempo de comercialización y el liderazgo en eficiencia energética, al tiempo que mejora el rendimiento en más de un 50 % en comparación”. a HBM3E.”

La versión HBM4E, que se espera que llegue a finales de 2027, incluirá un núcleo lógico personalizable que utiliza la tecnología de fabricación avanzada de TSMC. Esta característica de diseño permitirá a algunos clientes modificar la capa lógica para adaptarla mejor a sus necesidades, con el objetivo de mejorar el rendimiento y la eficiencia.

Se espera que las próximas soluciones de memoria se utilicen en las principales GPU, como NVIDIARobin R100 y AMDEl sucesor del Instinct MI400x. Micron ya ha demostrado tracción en el mercado con su tecnología HBM3E. “Estamos orgullosos de compartir que el HBM3E 8H de Micron está integrado en las plataformas Blackwell B200 y GB200 de Nvidia”, dijo Mehrotra durante la llamada.

Si bien Micron es relativamente nuevo en el espacio de HBM, que actualmente está dominado por el gigante surcoreano de la memoria SK Hynix, su vecino y principal competidor. SamsungSin embargo, la empresa sigue siendo optimista sobre su posición competitiva.

“Basándonos en nuestro éxito en el diseño para el cliente y nuestro éxito en forjar alianzas profundas con clientes, facilitadores de la industria y socios tecnológicos clave como TSMC, esperamos ser un proveedor líder de HBM, con la hoja de ruta tecnológica más sólida y confiable y un historial de ejecución líder en la industria. ”, dijo Mehrotra.

También te puede gustar

[ad_2]

Source Article Link