If you are looking for a project to keep you busy this weekend you might be interested to know that it is possible to run artificial intelligence in the form of large language models (LLM) on small single board computers (SBC) such as the Raspberry Pi and others. With the launch of the new Raspberry Pi 5 this month its now possible to carry out more power intensive tasks to its increased performance.

Although before you start it’s worth remembering that running AI models, particularly large language models (LLMs), on a Raspberry Pi or other SBCs presents an interesting blend of challenges and opportunities. While you trade off computational power and convenience, you gain in terms of cost-effectiveness, privacy, and hands-on learning. It’s a field ripe for exploration, and for those willing to navigate its limitations, the potential for innovation is significant.

One of the best ways of accessing ChatGPT from your Raspberry Pi setting up a connection to the OpenAI API, building programs using Python, JavaScript and other programming languages to connect to ChatGPT remotely. Although if you are looking for a a more locally installed more secure version which runs AI directly on your mini PC you will need to select a lightweight LLM that is capable of running and answering your queries more effectively.

Running AI models on a Raspberry Pi

Watch the video below to learn more about how this can be accomplished thanks to Data Slayer if you are interested in learning more about how to utilize the power of your mini PC I deftly recommend you check out his other videos.

Other articles we have written that you may find of interest on the subject of Raspberry Pi 5 :

Before diving in, it’s important to outline the challenges. Running a full-scale LLM on a Raspberry Pi is not as straightforward as running a simple Python script. These challenges are primarily:

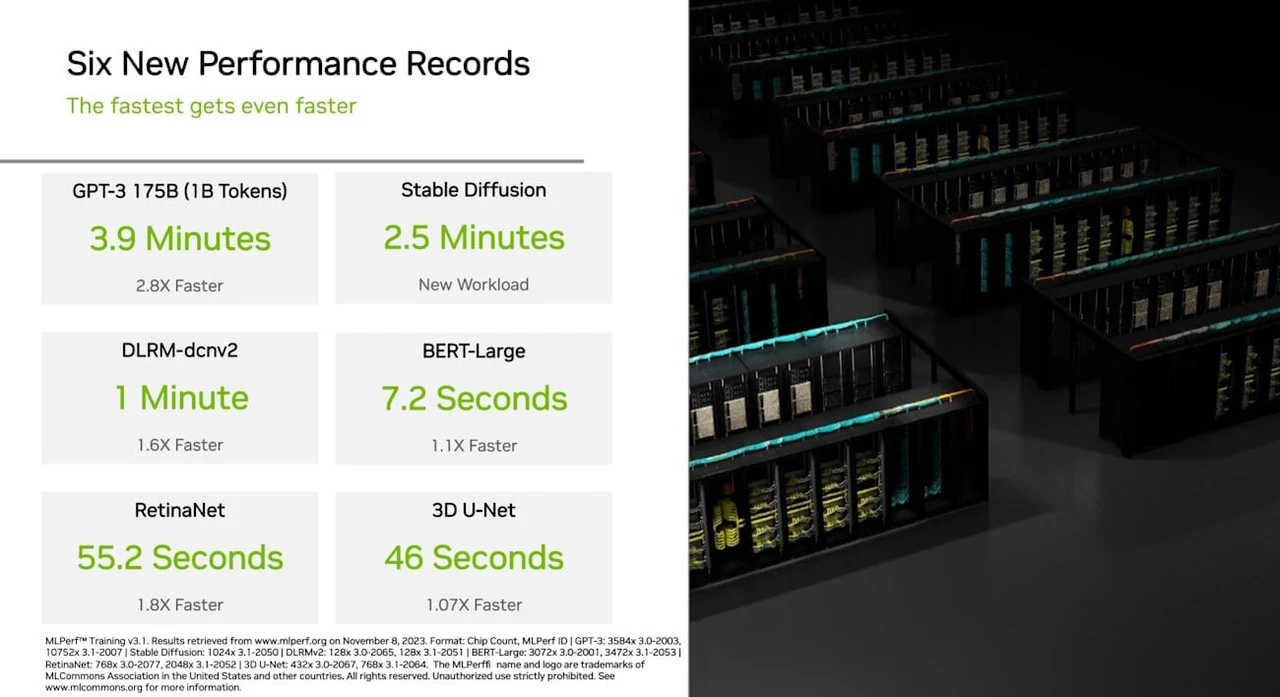

- Limited Hardware Resources: Raspberry Pi offers less computational power compared to typical cloud-based setups.

- Memory Constraints: RAM can be a bottleneck.

- Power Consumption: LLMs are known to be energy-hungry.

Benefits of running LLM is on single board computers

Firstly, there’s the compelling advantage of affordability. Deploying AI models on cloud services can accumulate costs over time, especially if you require significant computational power or need to handle large data sets. Running the model on a Raspberry Pi, on the other hand, is substantially cheaper in the long run. Secondly, you gain the benefit of privacy. Your data never leaves your local network, a perk that’s especially valuable for sensitive or proprietary information. Last but not least, there’s the educational aspect. The hands-on experience of setting up the hardware, installing the software, and troubleshooting issues as they arise can be a tremendous learning opportunity.

Drawbacks due to the lack of computational power

However, these benefits come with distinct drawbacks. One major issue is the limited hardware resources of Raspberry Pis and similar SBCs. These devices are not designed to be powerhouses; they lack the robust computational capabilities of a dedicated server or even a high-end personal computer. This limitation is particularly pronounced when it comes to running Large Language Models (LLMs), which are notorious for their appetite for computational resources. Memory is another concern; Raspberry Pis often come with a limited amount of RAM, making it challenging to run data-intensive models. Furthermore, power consumption can escalate quickly, negating some of the cost advantages initially gained by avoiding cloud services.

Setting up your mini PC

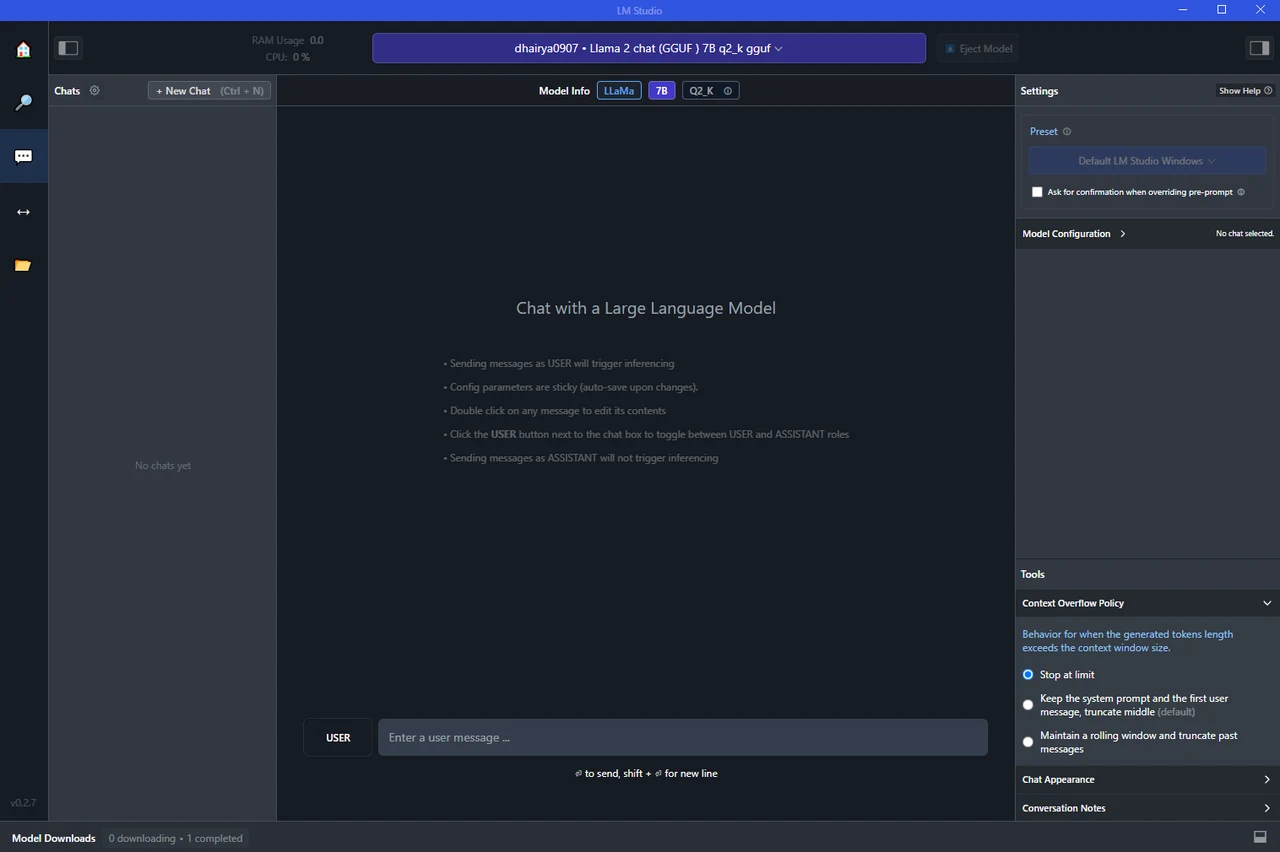

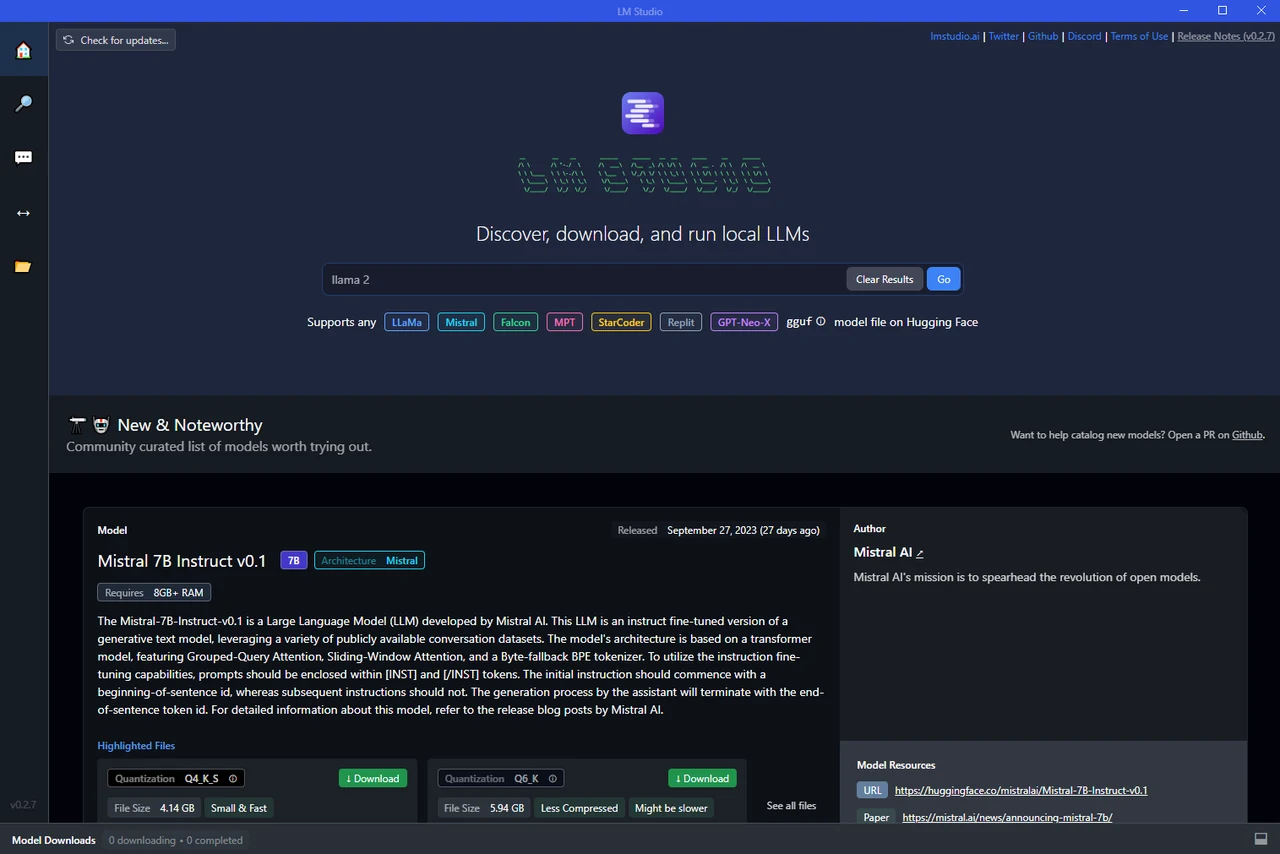

Despite these challenges, there have been advancements that make it possible to run LLMs on small computers like Raspberry Pi. One notable example is the work of Georgie Gregov, who ported the Llama model, a collection of private LLMs shared by Facebook, to C++. This reduced the size of the model significantly, making it possible to run on tiny devices like Raspberry Pi.

Running an LLM on a Raspberry Pi is a multi-step process. First, the Ubuntu server is loaded onto the Raspberry Pi. An external drive is then mounted to the Pi, and the model is downloaded to the drive. The next step involves cloning a git repo, compiling it, and moving the model into the repo file. Finally, the LLM is run on the Raspberry Pi. While the process might be a bit slow, it can handle concrete questions well.

It’s important to note that LLMs are still largely proprietary and closed-source. While Facebook has released an open-source version of its Llama model, many others are not publicly available. This can limit the accessibility and widespread use of these models. One notable example is the work of Georgie Gregov, who ported the Llama model, a collection of private LLMs shared by Facebook, to C++. This reduced the size of the model significantly, making it possible to run on tiny devices like Raspberry Pi.

Running AI models on compact platforms like Raspberry Pi and other single-board computers (SBCs) presents a fascinating mix of advantages and limitations. On the positive side, deploying AI locally on such devices is cost-effective in the long run, eliminating the recurring expenses associated with cloud-based services. There’s also an increased level of data privacy, as all computations are carried out within your own local network. Additionally, the hands-on experience of setting up and running these models offers valuable educational insights, especially for those interested in the nitty-gritty of both hardware and software.

However, these benefits come with their own set of challenges. The most glaring issue is the constraint on hardware resources, particularly when attempting to run Large Language Models (LLMs). These models are computational and memory-intensive, and a Raspberry Pi’s limited hardware isn’t built to handle such heavy loads. Power consumption can also become an issue, potentially offsetting some of the initial cost benefits.

In a nutshell, while running AI models on Raspberry Pi and similar platforms is an enticing proposition that offers affordability, privacy, and educational value, it’s not without its hurdles. The limitations in computational power, memory, and energy efficiency can be significant, especially when dealing with larger, more complex models like LLMs. Nevertheless, for those willing to tackle these challenges, the field holds considerable potential for innovation and hands-on learning.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.