[ad_1]

At Let Loose 2024, Apple revealed big changes coming to its Final Cut software, ones that effectively turn your iPad into a mini production studio. Chief among these is the launch of Final Cut Pro for iPad 2. It’s a direct upgrade to the current app that is capable of taking full advantage of the new M4 chipset. According to the company, it can render videos up to twice as fast as Final Cut Pro running on an M1 iPad.

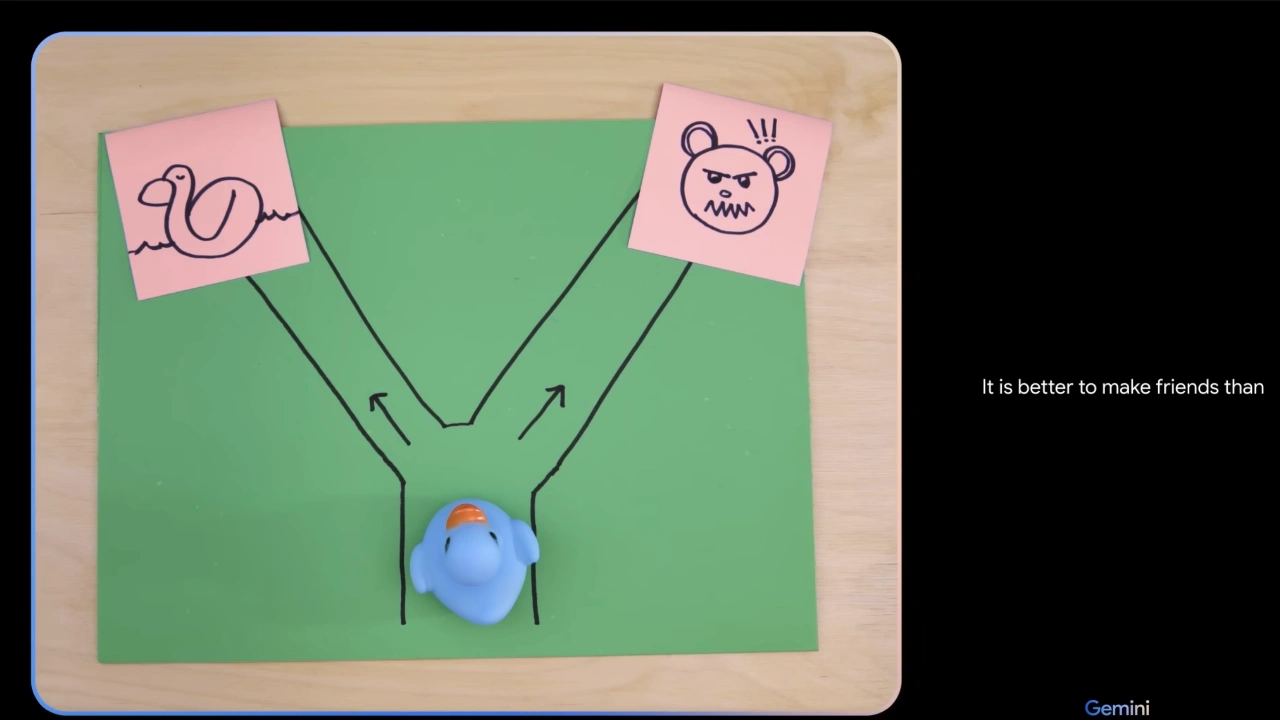

Apple is also introducing a feature called Live Multicam. This allows users to connect their tablet to up to four different iPhones or iPads at once and watch a video feed from all the sources in real time. You can even adjust the “exposure, focus, [and] zoom” of each live feed directly from your master iPad.

Looking at Apple’s demo video, selecting a source expands the footage to fill up the entire screen where you can then make the necessary adjustments. Tapping the Minimize icon in the bottom right corner lets creators return to the four-split view. Apple states that previews from external devices are sent to Final Cut Pro so you can quickly begin editing.

Impactful upgrades

You can’t connect your iPhone to the multicam studio using the regular camera app, which won’t support the setup. Users will instead have to install a new app called Final Cut Camera on their mobile device. Besides the Live Multicam compatibility, Apple says you can tweak settings like white balance, shutter speed, and more to obtain professional-grade recordings. The on-screen interface even lets videographers monitor their footage via a zebra stripe pattern tool and an audio meter.

Going back to the Final Cut Pro update, there are other important features we’ve yet to mention. The platform “now supports external projects”. This means you can create a video project on and import media to “an external storage” drive without sacrificing space on an iPad. Apple is also adding more customization tools to the software like 12 additional color-grading presets and more dynamic backgrounds.

Final Cut Pro for Mac is set to receive a substantial upgrade too. Although it won’t support the four iPhone video feeds, version 10.8 does introduce several tools. For example, Enhance Light and Color offers a quick way to improve color balance and contrast in a clip among other things. Users can also give video effects and color corrections a custom name for easy identification. It’s not a total overhaul, but these changes will take some of the headache out of video editing.

Availability

There are different availability dates for the three products. Final Cut Pro for iPad 2 launches this spring and will be a “free update for existing users”. For everyone else, it will be $5/£5/$8 AUD a month or $50/£50/$60 AUD a year for access. Final Cut Camera is set to release in the spring as well and will be free for everyone. Final Cut Pro for Mac 10.8 is another free update for existing users. On the Mac App Store, it’ll cost you $300/£300/$500 AUD.

We don’t blame you if you were totally unaware of the Final Cut Pro changes as they were overshadowed by Apple’s new iPad news. Speaking of which, check out TechRadar’s guide on where to preorder Apple’s 2024 iPad Pro and Air tablets.

You might also like

[ad_2]

Source Article Link