In an era where digital innovation is paramount, AI assistants based on Generative Pre-trained Transformers (GPT) are redefining user interactions on websites. This guide delves into the stages of creating advanced custom GPT AI assistants, a tool that not only responds to queries but also actively engages users in meaningful interactions. By harnessing the power of OpenAI’s Assistants API, this journey introduces a world where AI can transform business interactions and user experiences.

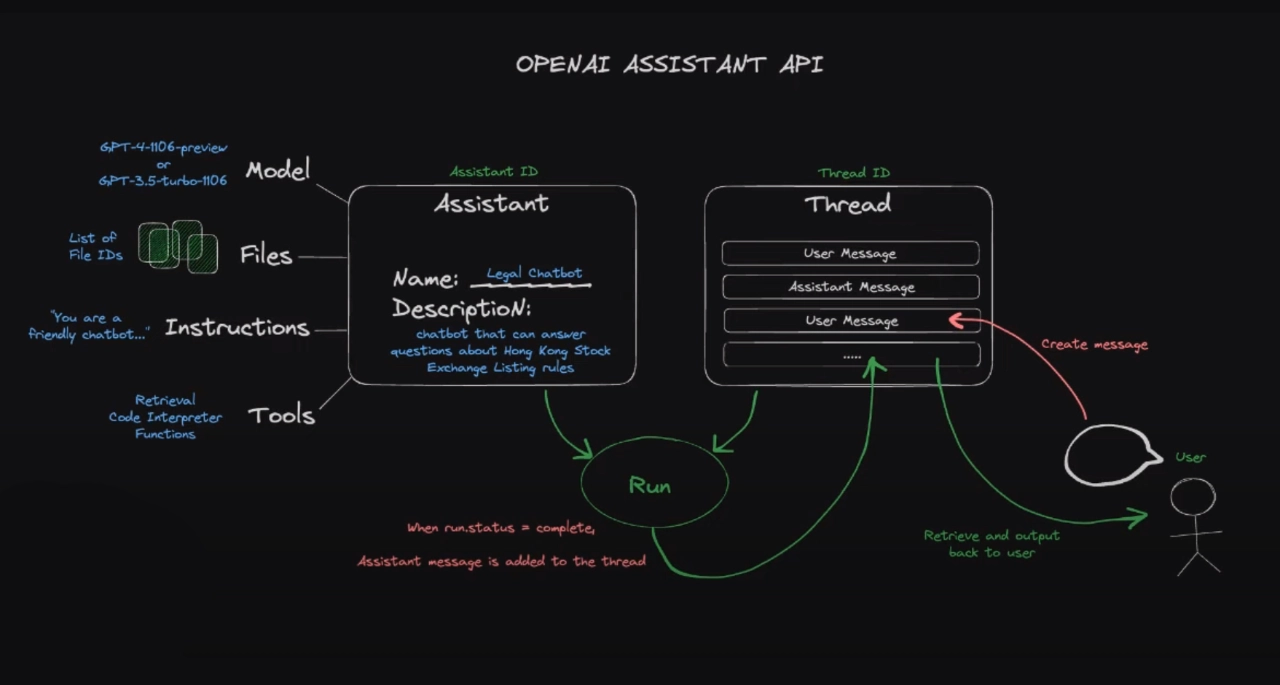

At the core of building custom GPT AI assistants is the Assistants API. This powerful interface allows the integration of various tools such as Code Interpreter, Retrieval, and Function calling, vital in tailoring responses to specific user needs. The Assistants API, still in its beta phase, is constantly evolving, offering a playground for developers to experiment and refine their AI assistants. A typical integration involves creating an assistant, initiating conversation threads, and dynamically responding to user queries, showcasing the flexibility and adaptability of this technology.

Integrating an OpenAI custom GPT into your website

You have the capability to attach up to 20 files to each Assistant, with each file having a maximum size of 512 MB. Additionally, the collective size of all files uploaded by your organization is capped at 100GB. Should you need more storage, an increase in this limit can be requested through the OpenAI help center.

Other articles we have written that you may find of interest on the subject of Assistants API :

Unlike standard chatbots, the Assistant API offers unparalleled customization here are a few of their benefits when compared to the ChatGPT GPT AI model versions :

- Customizability: Users can build bespoke AI assistants by defining custom instructions and choosing an appropriate model, tailoring the assistant to specific application needs.

- Diverse Toolset: The API supports tools such as Code Interpreter, Retrieval, and Function calling, enabling the assistant to perform a range of functions from interpreting code to retrieving information and executing specific actions.

- Interactive Conversation Flow: The API allows for creating a dynamic conversation flow. A ‘Thread’ is created when a user initiates a conversation, to which ‘Messages’ are added as the user asks questions. The Assistant then runs on this Thread, triggering context-relevant responses.

- Continuous Development: Currently in beta, the API is continually being enhanced with more functionality, indicating an evolving platform that will grow in capabilities and tools.

- Accessibility and Learning: The Assistants playground, an additional feature, offers a user-friendly environment for exploring the API’s capabilities and learning to build AI assistants without any coding requirement, making it accessible even for those with limited technical expertise.

- Feedback Integration: OpenAI encourages feedback through its Developer Forum, suggesting a user-centric approach to development and improvements.

This is especially beneficial for niche industries, where the AI can perform specific tasks like calculating potential savings or capturing leads. The key lies in effectively managing the AI’s knowledge base and actions, a process that involves integrating multiple APIs as demonstrated in the tutorial above. Other areas covered in the tutorial include :

Integrating APIs and Coding Your Assistant

Setting up the environment for your custom GPT AI assistant is akin to orchestrating a symphony of different technologies. Each API, with its unique integration process, plays a crucial role in the functionality of your AI assistant. The coding phase is where the magic happens, as you program your GPT to handle knowledge documents and define custom actions, transforming it into a multifaceted tool for your website.

Implementing AI on your website

The transition from coding to implementation is seamless. Embeddable scripts allow for easy integration of the GPT AI assistant into your website, empowering it to engage in conversations, answer FAQs, and perform specific calculations, like assessing solar savings for customers. This step is crucial in making the AI assistant a tangible, interactive element of your digital presence.

The culmination of this process is launching your GPT AI assistant on a server, ensuring it can handle high traffic volumes and provide consistent, reliable interactions. This stage signifies the readiness of your AI assistant to handle real-world interactions, making it a robust tool for lead generation and customer engagement.

Building advanced custom GPT AI Assistants

The Assistants API, currently in its beta phase, is a robust tool designed by OpenAI to aid developers in creating versatile AI assistants. These assistants are capable of performing an array of tasks, each fine-tuned with specific instructions to modify their personality and abilities. One of the key features of the Assistants API is its ability to access multiple tools simultaneously. These tools can range from OpenAI-hosted options like Code Interpreter and Knowledge Retrieval, to custom tools developed and hosted by users via Function calling.

Another significant aspect of the Assistants API is its use of persistent Threads. Threads facilitate the development of AI applications by maintaining a history of messages and truncating them when the conversation exceeds the model’s context length. This means that a Thread is created once and can be continually appended with Messages as users engage in conversation.

Moreover, the Assistants can interact with Files in various formats. This interaction can occur either during their creation or within Threads between the Assistants and users. When utilizing tools, Assistants have the capability to not only reference files in their messages but also create new files, such as images or spreadsheets. This comprehensive suite of features underlines the Assistants API’s potential as a pivotal tool in the realm of AI-assisted development.

Creating an advanced custom GPT AI assistant for your website is more than just a technological endeavor; it’s a strategic move towards enhancing digital interaction and business growth. With the right tools and understanding, such as the Assistants API and integrations with other APIs, you can craft an AI assistant that not only answers questions but also adds tangible value to your business or clients. For more information and full documentation on how to use the Assistants API created by OpenAI jump over to the official website.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.