[ad_1]

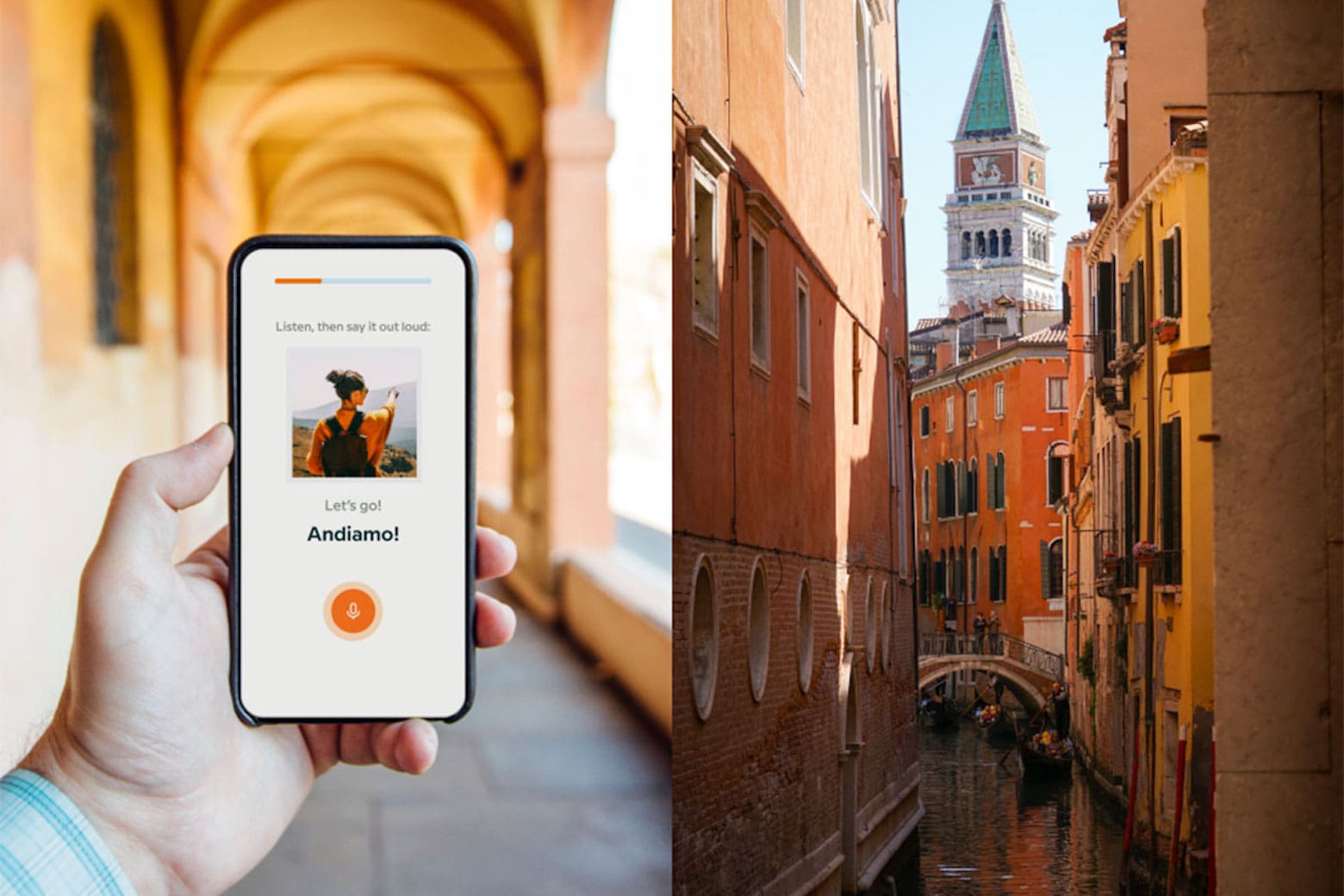

Si desea aprovechar al máximo sus viajes, Mejora tu CV O simplemente amplía tus habilidades, aprender un nuevo idioma es una excelente manera de subir de nivel. Una de las formas más sencillas de hacerlo (y de adaptar el aprendizaje a tu horario) es utilizar una práctica aplicación de idiomas como Babbel. Y puedes conseguirlo por menos con esta oferta de aplicación de idiomas Babbel por tiempo limitado.

Hasta el 17 de junio puedes asegurar Acceso de por vida a Babbel por sólo $149,97 (Precio regular de $ 599) de Cult of Mac Deals. ¡No pierdas la oportunidad de dominar varios idiomas por un precio reducido!

Aprende un nuevo idioma en menos de un mes

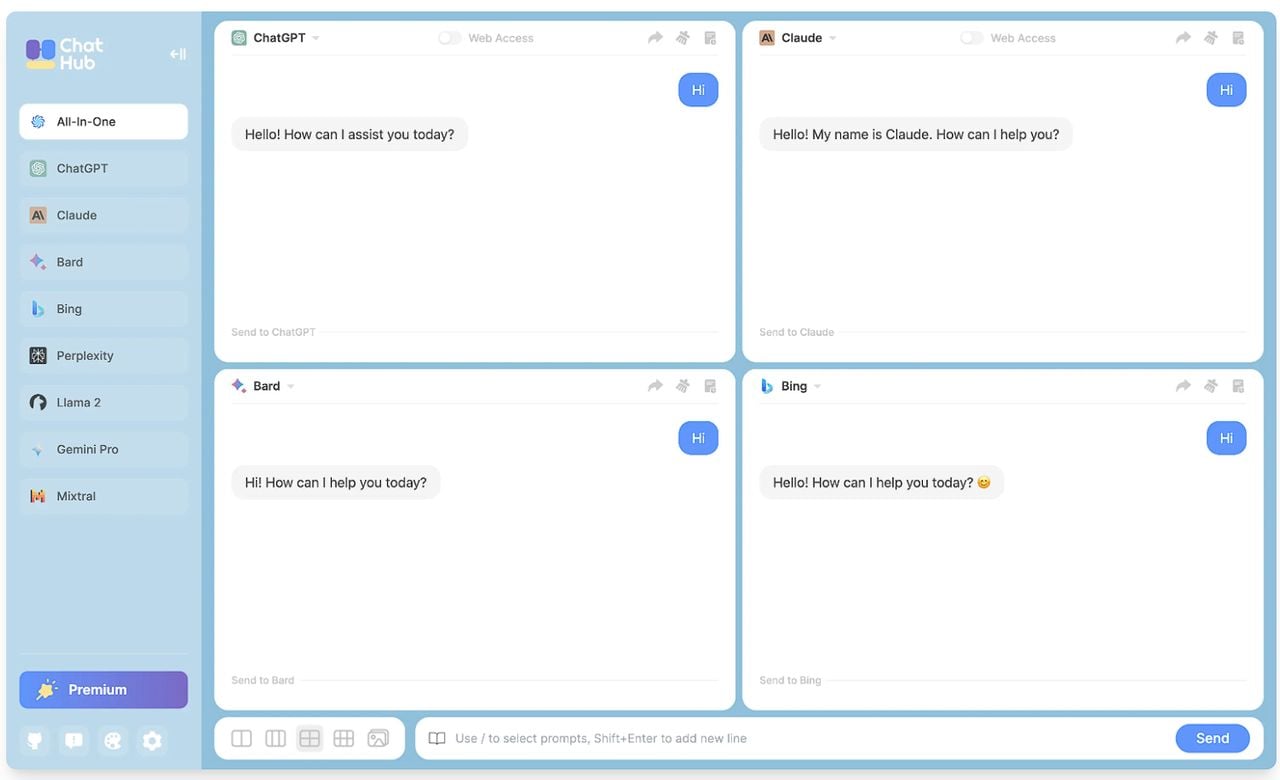

Aprender varios idiomas se vuelve mucho más fácil con la aplicación de idiomas adecuada. Con más de 10 millones de usuarios en todo el mundo, Babbel es la aplicación de aprendizaje de idiomas con mayores ingresos del mundo. Por un precio único, podrás aprender idiomas de por vida.

Esto le brinda acceso ilimitado a más de 10,000 horas de lecciones de idiomas de alta calidad, para siempre. Esta oferta cubre los 14 cursos de idiomas de Babbel. El conjunto completo incluye: danés, holandés, inglés, francés, alemán e indonesio. italianoNoruego, polaco, portugués, ruso, español, sueco y turco.

Cuando decimos alta calidad, lo decimos en serio. La aplicación ofrece lecciones sencillas y concisas desarrolladas por expertos lingüísticos para ayudarte a comprender y hablar tu nuevo idioma de forma rápida y segura. Esto significa que no necesitarás dedicar horas seguidas a perfeccionar tus habilidades lingüísticas.

A lo largo del camino, Babbel utilizará tecnología de reconocimiento de voz para analizar tu pronunciación y brindarte retroalimentación, asegurándote de que desarrolles hábitos de habla adecuados.

Aplicación de aprendizaje de idiomas altamente valorada

Babbel tiene valoraciones de usuarios excepcionalmente altas, con 4,7 de 5 estrellas en la App Store Después de casi 600.000 reseñas. GooglePlayTiene 4,6 de 5 estrellas (después de casi un millón de reseñas).

La aplicación de idiomas también recibió excelentes críticas de los críticos, junto con una gran cantidad de premios de la industria. Esto incluye características en Diario de Wall Street, Forbes, cnn y CNET y se llama rápido Compañía “La empresa más innovadora en el ámbito de la educación.“Como dicen los expertos en PCMag En resumen, “Babbel supera las expectativas al ofrecer cursos de autoaprendizaje de alta calidad”.

Ahorre en una suscripción de por vida por menos con esta oferta de aplicación de idiomas de Babbel

Los nuevos usuarios ahora pueden obtener acceso de por vida a Aplicación de aprendizaje de idiomas Babbel por solo $149,97 (Precio habitual: $599). Pero date prisa, porque este descuento del 74% se acaba pronto.

Compra desde: Ofertas de culto a Mac

Los precios están sujetos a cambios. Todas las ventas son gestionadas por StackSocial, nuestro socio director. Ofertas de culto a MacPara soporte al cliente, por favor Envíe un correo electrónico a StackSocial directamentePublicamos originalmente esta publicación sobre la oferta de la aplicación de idiomas Babbel el 9 de septiembre de 2022. Hemos actualizado la información de precios.

[ad_2]

Source Article Link