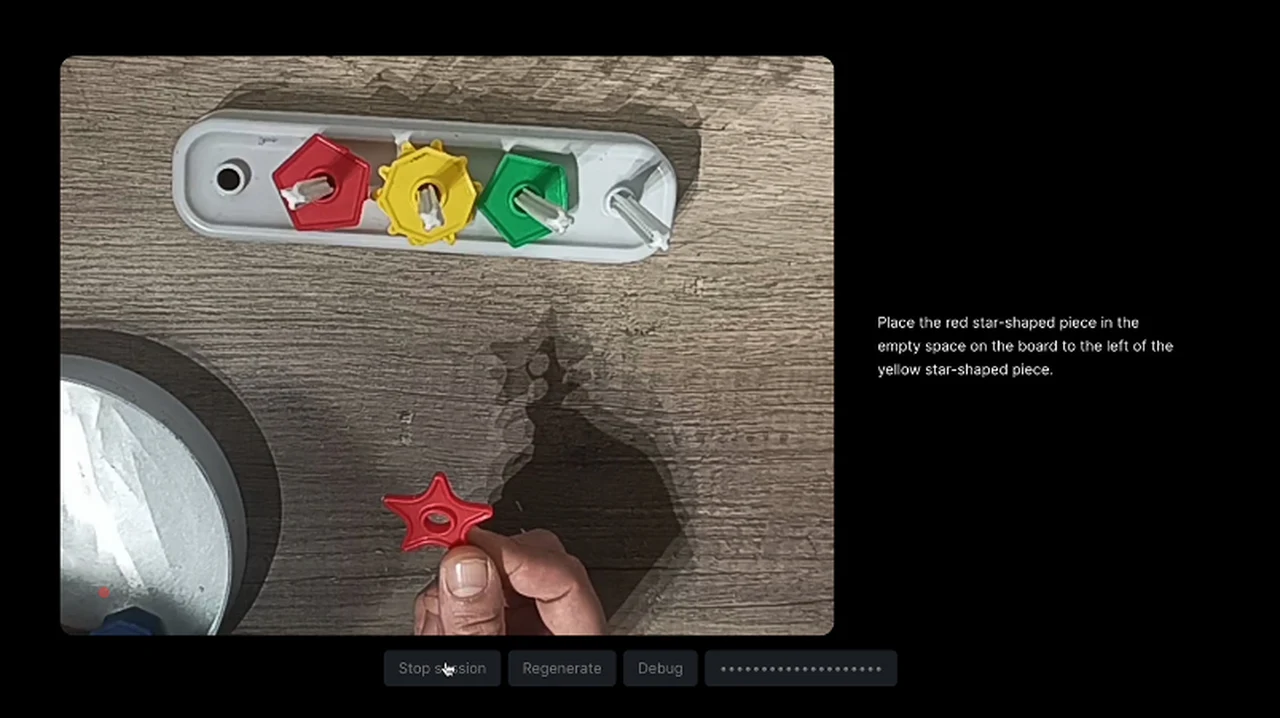

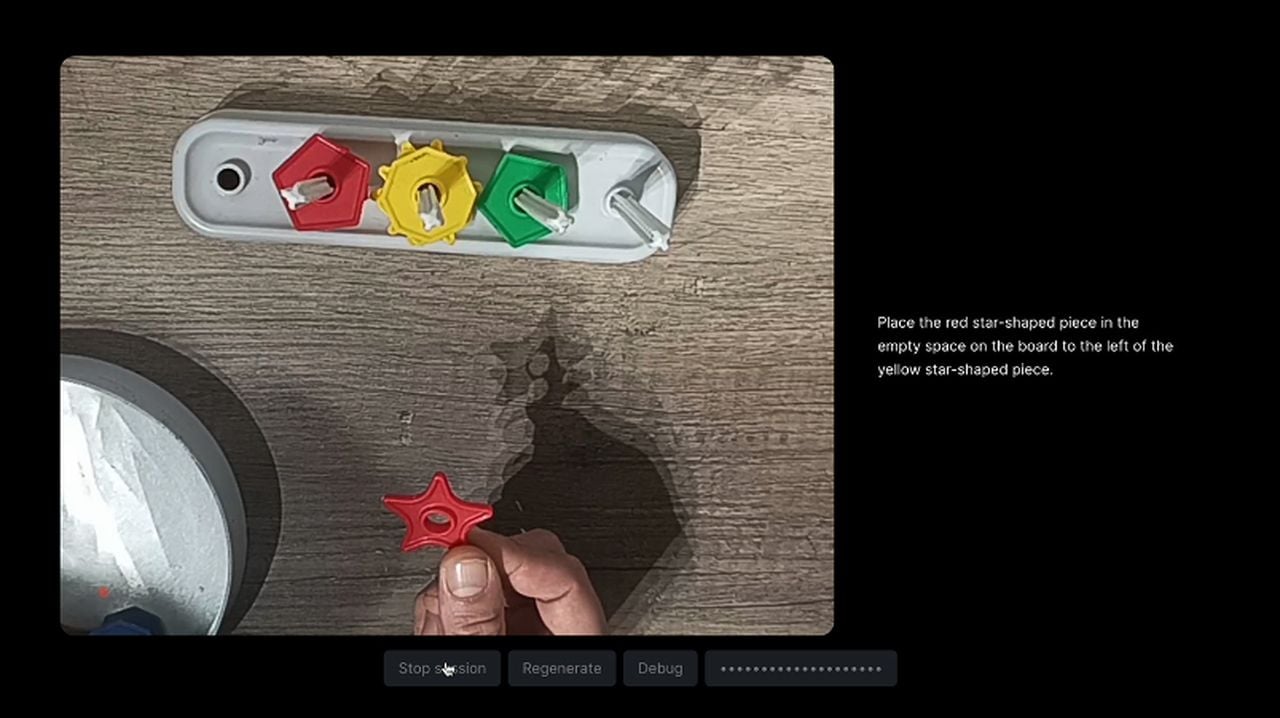

Website and user interface designers might be interested in a new application that allows you to transform sketches into coded user interfaces. Currently in its early development stages the AI Draw a UI app provides an insight into how AI can be used to create user interfaces for a wide variety of different applications from website designs to mobile apps. The creation of user interfaces (UI) stands out as a task blending aesthetics, functionality, and user engagement. The introduction of Draw a UI marks a unique moment in UI design, showcasing the intricate relationship between creativity and technology.

In the world of digital applications, the user interface (UI) is akin to a bridge that connects human interaction with the digital realm. It’s the first thing users encounter and, consequently, forms the cornerstone of their experience. In this digital age, where applications pervade every aspect of our lives, understanding the nuances of UI design becomes imperative.

This innovative tool takes UI design to unprecedented heights by converting UI sketches into deployable HTML code in real-time, directly within your web browser. This advancement is a significant stride in making UI design more accessible and efficient for everyone. Its drag-and-drop feature streamlines the design process, especially for those with limited coding expertise, making the creation of UI designs more intuitive. You’ll be pleased to know that it also offers code customization options, allowing you to tailor the generated code to your specific needs and preferences.

AI user interfaces design

Other articles we have written that you may find of interest on the subject of design using artificial intelligence :

GPT-4 Vision to make UIs

One of the most impressive features of Draw a UI user interfaces design app is its integration with the GPT-4 Vision API. This cutting-edge technology augments the tool’s capability to interpret visual content, enabling it to produce corresponding HTML code with exceptional accuracy. This feature is particularly beneficial for those who prefer to sketch their UI designs manually before converting them into code. The HTML output from Draw a UI is structured using Tailwind CSS, a modern, utility-first CSS framework. This ensures that the designs are not only visually appealing but also responsive, adapting effortlessly to various screen sizes and devices.

While Draw a UI showcases remarkable potential, it’s important to note that it is currently a demo tool and not yet intended for production use. The absence of authentication methods, crucial for code security and integrity, is a key limitation at this stage. For those curious about exploring “Draw a UI,” the tool can be installed locally. This process requires access to the GPT-4 Vision API, which powers the tool’s ability to interpret visual content. Detailed instructions are provided to ensure a smooth setup experience.

The Role user interfaces design in user experience

- First Impressions: The UI is often the first point of contact between the user and the application. A well-designed interface not only captivates users but also establishes a tone for their entire experience.

- Usability: At its core, a good UI is about usability. It’s about creating a seamless path for users to accomplish their goals, whether it’s booking a flight or checking the weather.

- Accessibility: Inclusivity is key. UI design should cater to a diverse audience, ensuring accessibility for people with different abilities.

Considerations in UI Design

- Simplicity: The mantra ‘less is more’ holds particularly true in UI design. A clutter-free, intuitive design is paramount.

- Consistency: Keeping design elements consistent across the application enhances user familiarity and comfort.

- Feedback: Immediate feedback for user actions, like a confirmation after a button press, is crucial in keeping users informed and engaged.

The technical side of user interface design

When designing the user interface it’s important to consider a wide variety of different factors some of which are listed below. Each area must be considered to create an ergonomic and user-friendly user interface that can be used across a wide variety of different devices and platforms.

The digital world today is a mosaic of devices, each with varying screen sizes and resolutions. Responsive design in UI is not just a feature; it’s a necessity. It ensures that a digital application is accessible and functional across different devices, from the smallest smartphones to the largest desktop monitors.

Responsive design employs fluid grid layouts that adjust to the screen size, ensuring content is readable and accessible regardless of the device. Media queries, a staple of responsive design, allow designers to apply specific styles based on the device’s characteristics, such as its width, height, or orientation. This adaptability enhances the user experience by providing a seamless interaction across all platforms.

Animations in UI design are not just decorative elements; they serve functional purposes as well. Subtle animations can guide users through tasks, provide feedback on their actions, and clarify the flow of application usage. When implemented thoughtfully, animations can make complex interactions feel simple and intuitive.

By incorporating animations, designers can create a more engaging and interactive experience. Animations like button expansions, loading indicators, and transition effects not only add aesthetic value but also provide useful cues to the user, making the digital experience more dynamic and responsive to their actions.

In the world of UI design, performance is synonymous with user satisfaction. A UI that is slow to respond can lead to user frustration, abandonment of the application, and negative perceptions of the brand. Ensuring that the UI is optimized for performance, with minimal load times and quick response to user inputs, is as crucial as its visual design.

Optimizing for Efficiency

Performance optimization involves various techniques, from reducing image sizes and using efficient code to leveraging browser caching and minimizing HTTP requests. A well-optimized UI ensures that resources are used judiciously, leading to faster interactions and a smoother user experience.

Responsive design, animation, and performance are integral components of modern UI design. Each plays a unique role in enhancing the user experience, ensuring that digital applications are not only visually appealing but also functionally robust and user-friendly. In the rapidly evolving digital landscape, attention to these aspects is paramount in creating interfaces that resonate with users and stand the test of time.

A/B Testing: The Art of Comparison and Choice

A/B testing, at its core, is a comparative analysis method. It involves creating two versions of a UI – Version A and Version B. These versions are typically similar, with one or two key differences that could impact user behavior. For instance, Version A might feature a green call-to-action button, while Version B uses a red one.

Users are randomly exposed to either version without prior knowledge of the test. Their interactions with each version are closely monitored and analyzed. Metrics like click-through rates, conversion rates, time spent on the page, and user engagement levels are gathered to determine which version performs better.

The outcome of A/B testing provides concrete, data-driven insights. It helps in making informed decisions about which elements of the UI work best in achieving desired user actions and improving overall user experience. This method takes guesswork out of the equation, allowing designers to base their decisions on actual user data.

Gathering Insights

User feedback is an indispensable part of the UI design process. It involves collecting opinions and experiences directly from the users. This can be done through various means such as surveys, interviews, user testing sessions, or feedback forms within the application.

The Role of Feedback in UI Refinement

Incorporating user feedback is crucial for several reasons:

- Identifying Pain Points: Users can highlight issues and pain points that designers might not have foreseen.

- Understanding User Needs: Feedback provides a deeper understanding of what users actually need and value in the UI.

- Continuous Improvement: UI design is not a one-time task but a continuous process of iteration. User feedback is the driving force behind this iterative process, ensuring that the UI evolves to meet changing user needs and preferences.

By prioritizing user feedback, designers cultivate a user-centric approach to UI design. This approach ensures that the final product is not just aesthetically pleasing but also functionally relevant and user-friendly.

While a visually appealing UI can draw users in, its true effectiveness lies in its functionality. The goal is to strike a balance where the design is not only pleasing to the eye but also facilitates ease of use.

A/B testing and user feedback are instrumental in the UI design process. They provide a structured approach to understanding user preferences and behaviors, allowing designers to make informed decisions and continuously improve the UI. In the dynamic field of digital applications, these methods are key to creating interfaces that resonate with users and drive engagement.

The Business Implications of UI Design

- Brand Identity: The UI is a reflection of a company’s brand. A distinctive and thoughtful design can set an application apart in a crowded market.

- User Retention: An intuitive and efficient UI can significantly enhance user satisfaction, leading to higher retention rates.

- Conversion Rates: In eCommerce, for example, a well-designed UI can streamline the shopping process, directly impacting conversion rates.

Draw a UI harnesses the capabilities of the OpenAI GPT model and the GPT-4 Vision API, providing instant code generation, drag-and-drop design functionality, and customization options. Although currently a demo, its potential for future development and application is immense. This tool not only symbolizes the ongoing evolution in web development but also opens doors to exciting future possibilities in this domain.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.