The vdeo below compares the Google NotebookLM with Google Bard, both are powered by Google’s Gemini Pro. In the realm of digital tools and artificial intelligence, Google has been a pioneering force, constantly pushing the boundaries of what’s possible. Two of its latest offerings, NotebookLM and Google Bard, both powered by the advanced Gemini Pro technology, have sparked significant interest, particularly in scientific circles.

If you’re curious about how these tools fare against each other, especially when it comes to handling scientific literature and data, you will be pleased to know that a recent video sheds light on this very topic.

Unveiling NotebookLM

At the forefront of this comparison is NotebookLM, a tool designed to enhance your research and study process. NotebookLM stands out with its ability to let users upload a variety of personal documents – ranging from PDFs and Google Docs to videos and audio files. This function enables the language model to reference these materials directly, offering a more tailored experience. Initially, access to NotebookLM was limited to the US, but with a VPN, users in Europe can now explore its capabilities.

A Nod to Privacy

For those concerned about the privacy of their data, the video reassures that personal documents uploaded to NotebookLM are not used for training the model. This means your data remains private, accessible only to you or your chosen collaborators. This aspect is crucial, considering the sensitivity of data in the scientific community.

Methodology Behind the Test

The presenter in the video adopts a meticulous approach to testing NotebookLM. They upload 13 scientific papers on a topic named ‘Halison’ and observe how NotebookLM and Bard respond to various queries. This direct comparison provides a clear insight into the strengths and limitations of each tool.

Comparative Insights

When it comes to general knowledge questions, Bard tends to deliver answers in a more conversational, Wikipedia-like style. NotebookLM, on the other hand, provides responses that are more concise and scientifically oriented. However, when delving into complex queries about Halison’s mechanism, it’s observed that the additional sources fed into NotebookLM don’t significantly enhance its responses over Bard’s.

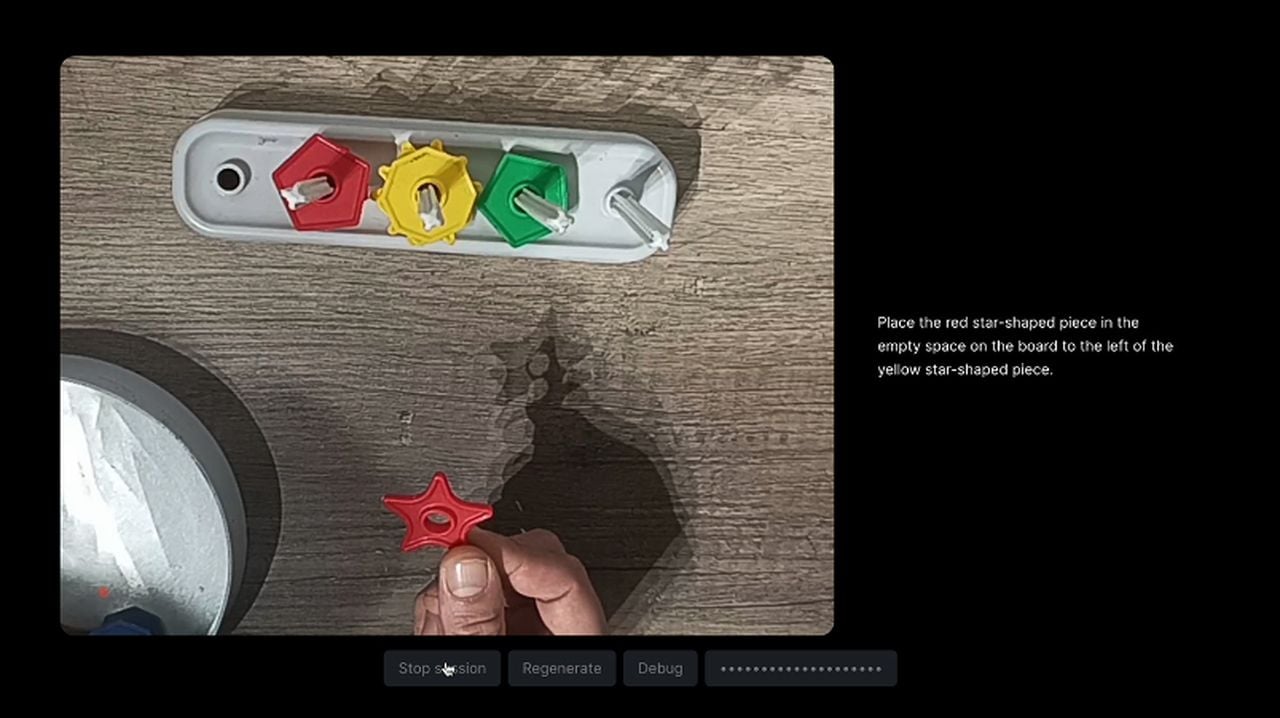

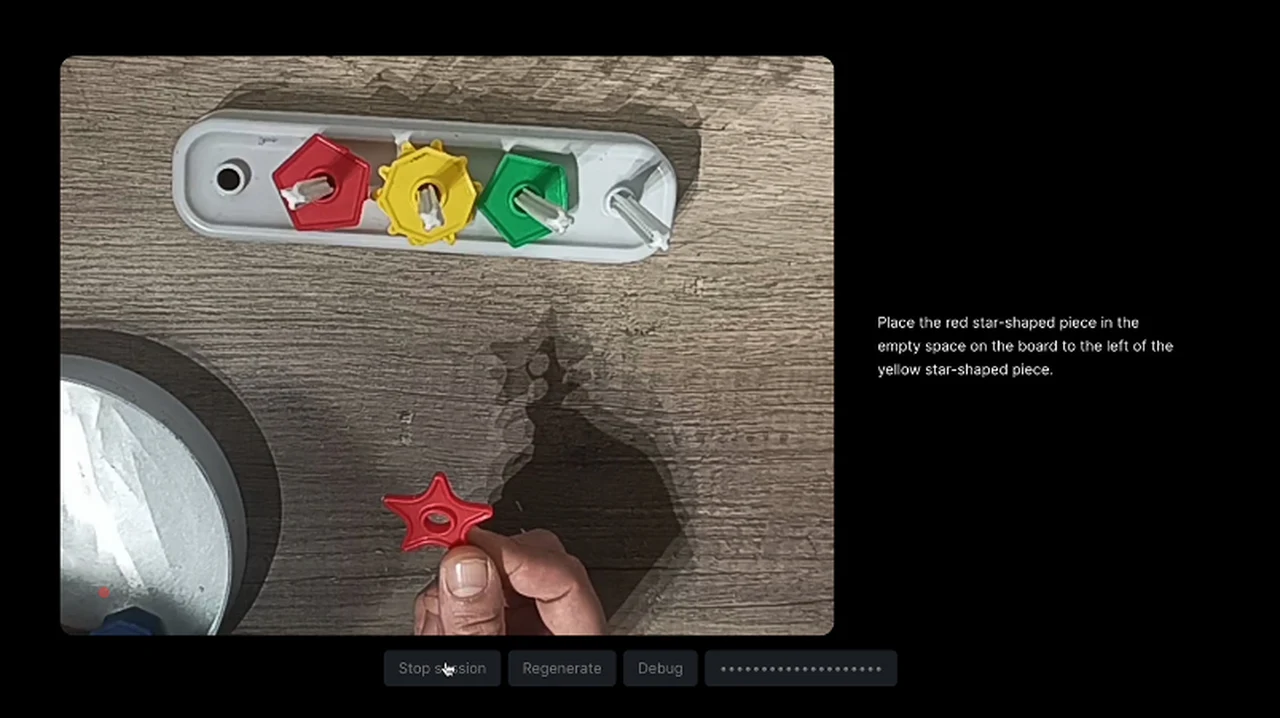

Tackling Scientific Data: A Challenge

A notable limitation of NotebookLM emerges in its handling of figures and diagrams within scientific papers. While proficient with textual sources, it struggles to interpret graphical data correctly. This is particularly evident in the analysis of a specific paper on Halison, where NotebookLM’s inability to process visual information hampers its effectiveness.

Textual Analysis: NotebookLM’s Forte

Despite its challenges with visual data, NotebookLM shows a strong ability to handle purely textual sources. This prowess, however, is somewhat overshadowed by its current limitations in processing multimodal data, which is often crucial in scientific research.

Looking Ahead: The Potential for Growth

While the presenter concludes that NotebookLM is not yet fully equipped for prime time in scientific research, there’s an undeniable potential for growth. Its future development, especially in handling multimodal data effectively, could greatly enhance its utility in the scientific community.

As technology continues to evolve, tools like NotebookLM and Bard are testaments to the ongoing innovation in the field of artificial intelligence. Each tool, with its unique capabilities and limitations, offers a glimpse into the future of scientific research and data analysis. If you are wondering how these tools can be integrated into your research, keep an eye on their development, as they hold the promise of transforming the way we handle scientific data.

Source AI Matej

Filed Under: Guides

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.