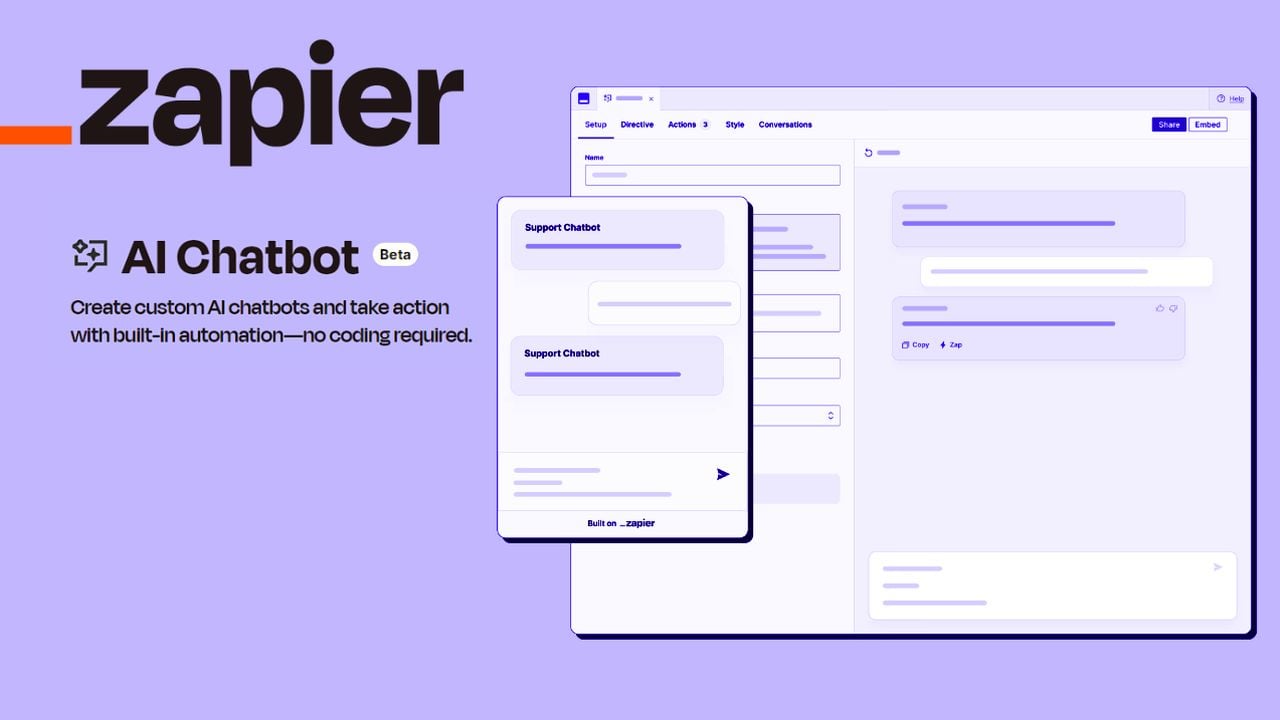

Zapier, a well-known automation platform, has recently upgraded its AI chatbot capabilities, offering businesses new ways to enhance customer service and boost productivity. These updates are especially beneficial for companies looking to leverage chatbot technology to improve customer engagement and streamline their operations.

At the heart of these enhancements is a new interface that is fully integrated into the Zapier platform, making it easier than ever to create, customize, and deploy AI chatbots. This interface is user-friendly and provides a range of pre-built templates, which can save businesses a considerable amount of time during the setup process.

The update has significantly expanded the customization options available to users. Now, businesses can tailor their chatbots to match their brand’s look and feel, as well as their specific operational needs. This level of personalization ensures that each chatbot can offer a unique and engaging experience to users.

Zapier AI chatbots

Here are some other articles you may find of interest on the subject of no-code automation :

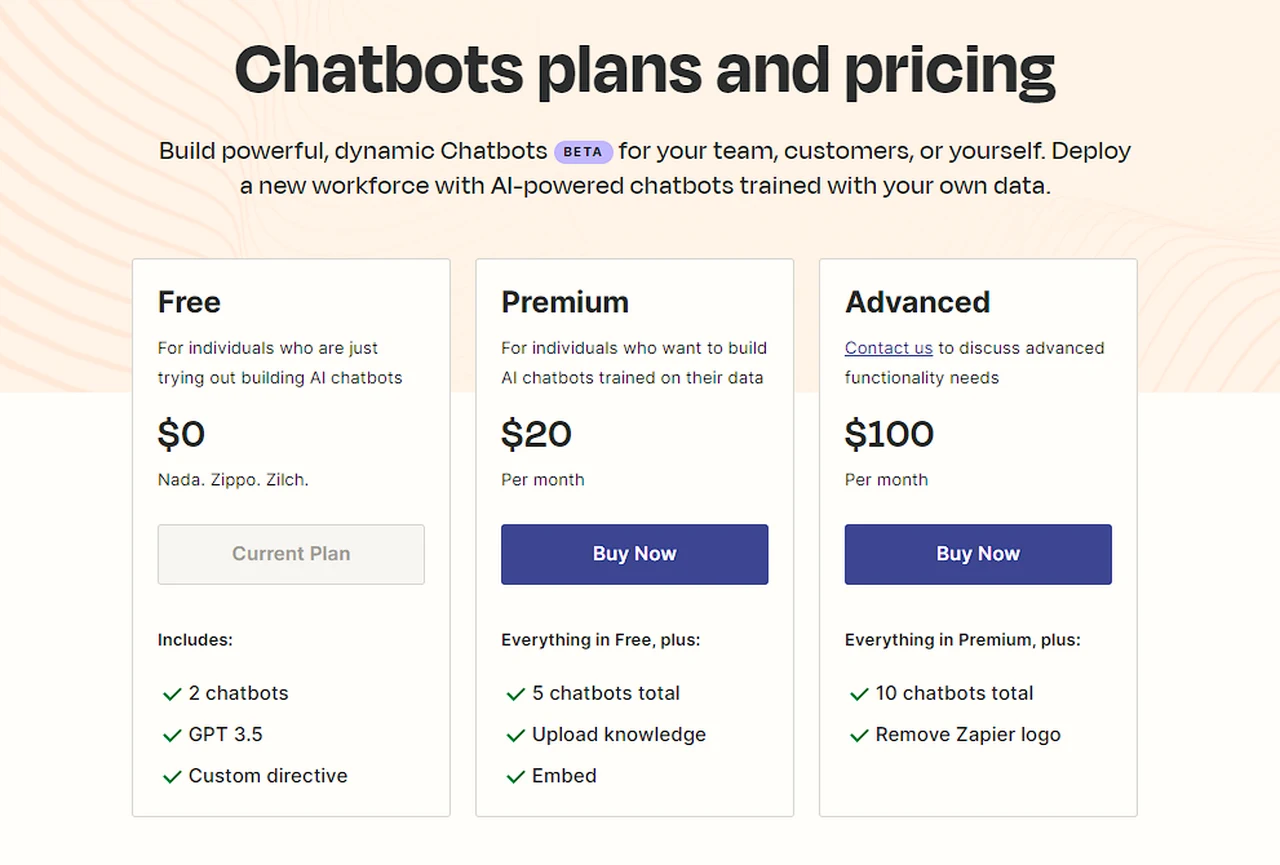

Zapier has also introduced a new pricing structure for its chatbot services, which includes different tiers to suit various needs and budgets. The tiers range from free to premium and advanced options, with each level offering more complex features and capabilities. The premium tier, for example, provides access to more sophisticated AI models and the ability to integrate with your existing data sources, which can greatly improve the chatbot’s conversational abilities.

Zapier AI chatbots pricing

For organizations that have a high volume of customer interactions, the advanced plan is particularly attractive. It includes a larger number of chatbots and additional features, making it the perfect solution for businesses that need a robust chatbot system.

One of the most significant advantages of these new chatbot features is the potential for improved efficiency. By utilizing website data to train chatbots, they can autonomously handle a wide range of customer queries, from answering simple questions to guiding users through more complex processes. This not only enhances the overall customer experience but also frees up valuable time for your team to focus on other important tasks.

Zapier’s latest update is set to make a substantial impact on the way businesses interact with their customers. With its intuitive interface, enhanced customization, and flexible pricing, the new chatbot features are designed to create smarter and more responsive chatbots that align with your business objectives. Whether you’re looking to improve customer service or optimize your operations, Zapier’s chatbot enhancements offer the tools needed to succeed in today’s digital landscape.

Filed Under: Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.