In the rapidly evolving world of artificial intelligence (AI), the ability to quickly and efficiently prototype AI applications is more important than ever. For those who are excited about the potential of AI but are not well-versed in coding, Ironclad’s Rivet offers a compelling solution. This platform allows users to create complex AI prototypes without writing a single line of code, using a graphical interface that simplifies the entire process.

Ironclad’s Rivet is a tool that is changing the way we approach AI application development. It is designed for individuals who may not have a background in programming but still want to participate in creating sophisticated AI systems. The graphical interface of Rivet focuses on the design and functionality of the application, removing the need to understand complex programming languages.

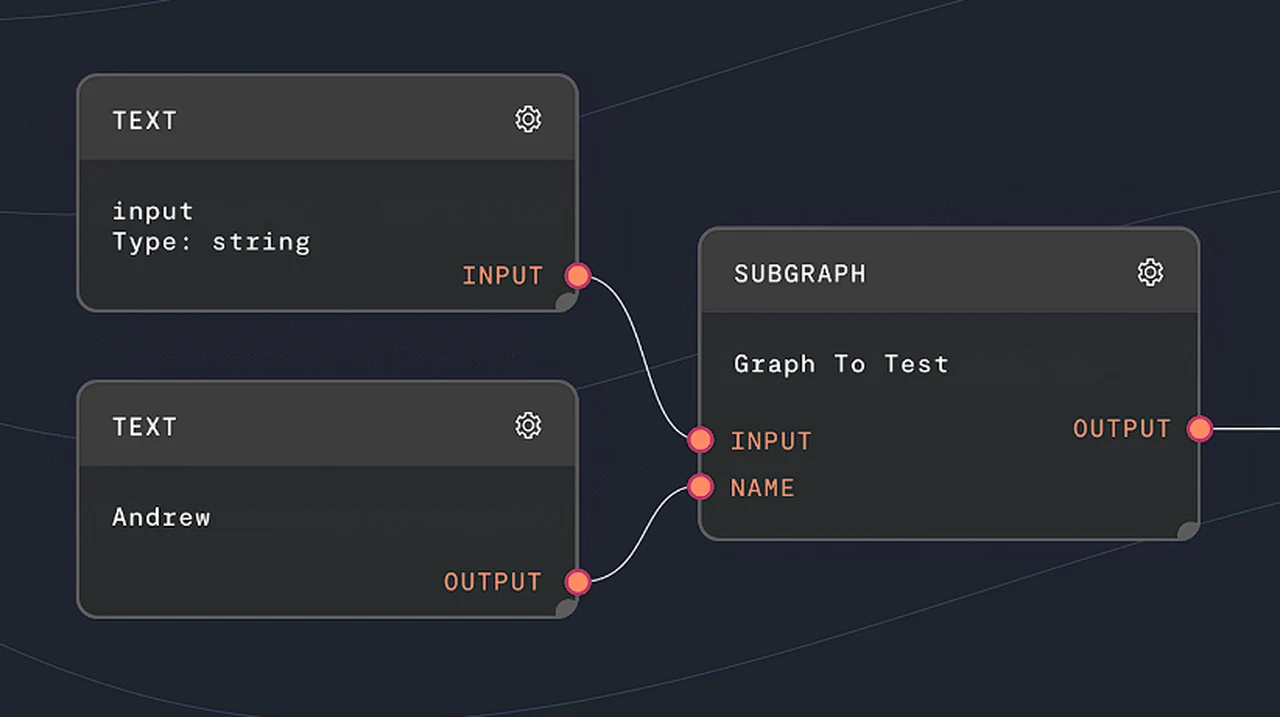

Getting started with Rivet is incredibly simple. The installation process is quick and straightforward, and once installed on your laptop, you’ll be greeted with a user-friendly interface. This interface allows you to begin building your AI application by simply dragging and dropping different components to where they need to be.

Build AI apps visually with no-code

Here are some other articles you may find of interest on the subject of no code AI tools and projects :

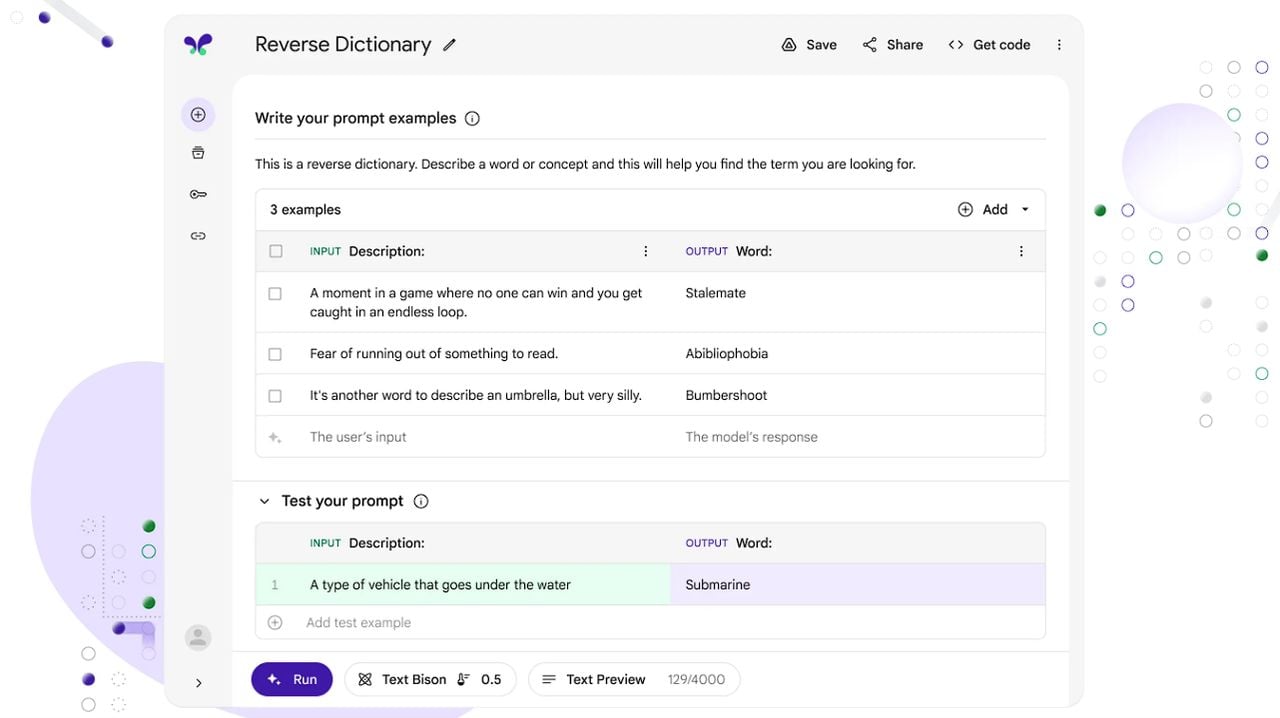

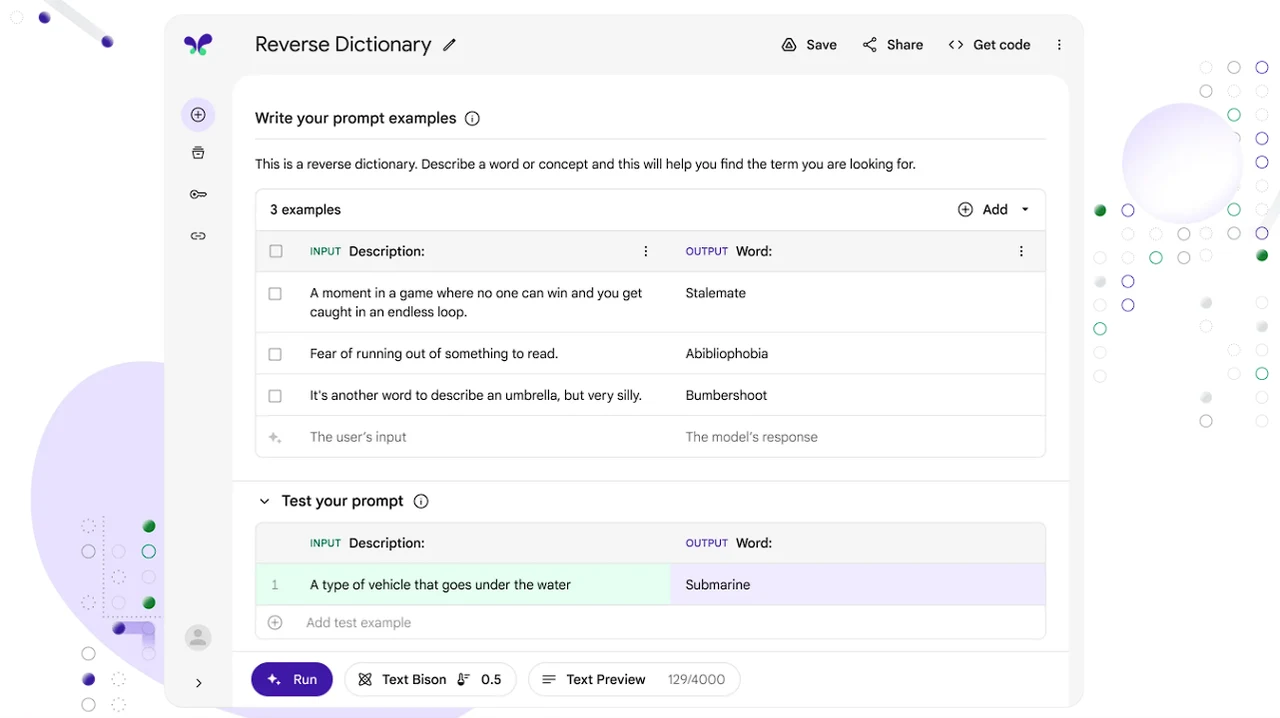

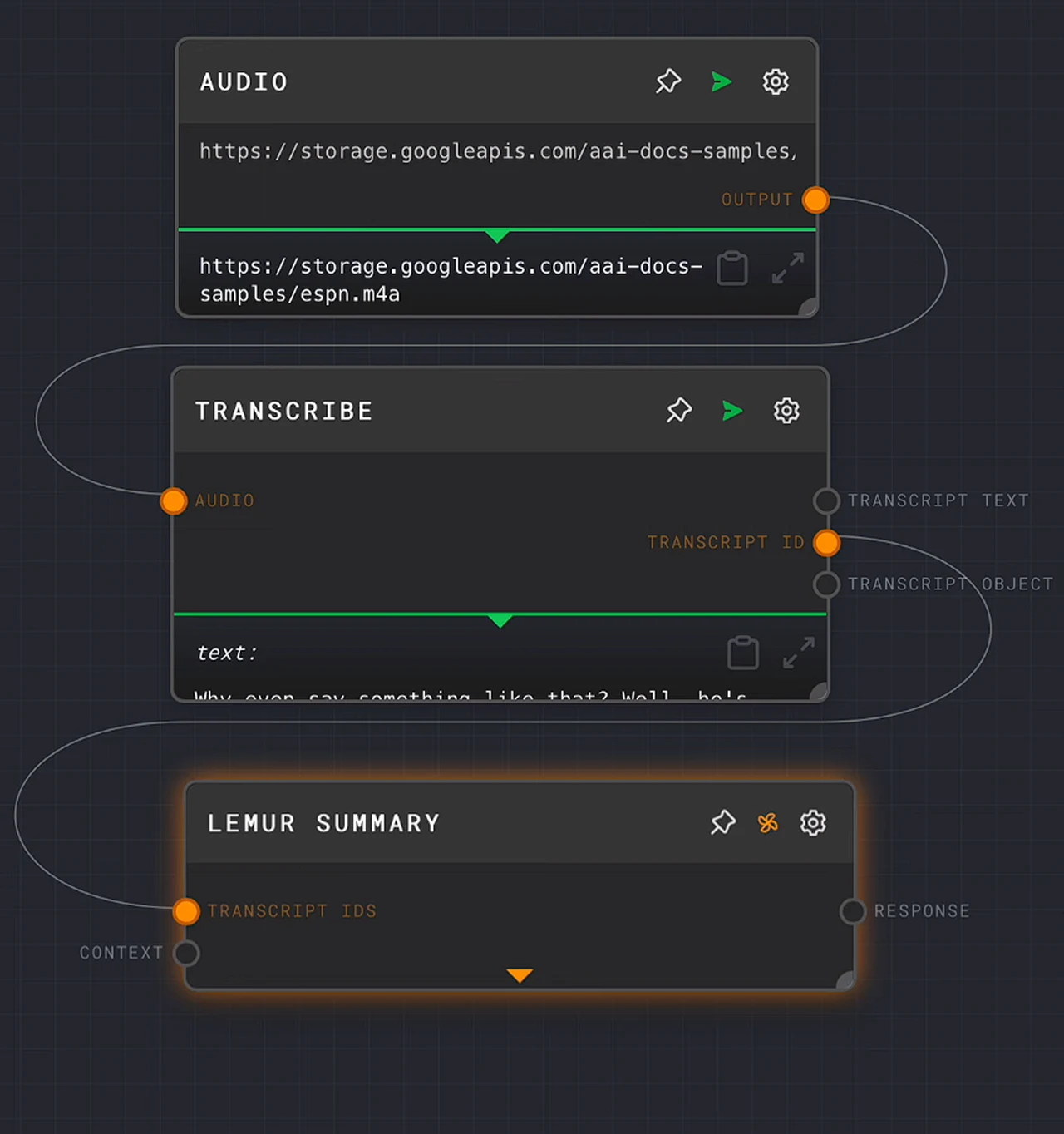

One of the key features of Rivet is its ability to integrate with other services, such as Assembly AI. This integration can significantly enhance your application’s capabilities, such as adding audio transcription and interactive Q&A features. To use these services, you’ll need to obtain an API key from Assembly AI’s website, which ensures a secure connection between Rivet and the services provided by the plugin.

Rivet’s environment is designed to be modular, meaning you can create separate graphs for different functions within your application. For example, you might have one graph for handling audio transcription and another for managing the Q&A functionality. This modular approach not only makes your application more flexible but also allows it to scale more easily as you add more features.

The process of using Rivet is intuitive. You start by inputting an audio URL, which the Assembly AI plugin then transcribes. Following this, you can ask a question, and Rivet will provide an answer in text form. This demonstrates the seamless integration of services like Assembly AI into your application, making it easier to build powerful AI features.

An important aspect of creating effective AI responses is prompt engineering. This involves crafting questions in a way that elicits the most accurate and relevant answers from your AI model. Mastering this technique is vital for ensuring that your AI prototypes perform optimally and deliver the results you’re looking for.

For those who want to take their Rivet graphs to the next level, integrating them into larger projects is possible with technologies like Node.js. Node.js is a popular runtime environment that allows you to incorporate the graphs you’ve created in Rivet into web applications or other software projects, significantly expanding the potential uses of your AI prototypes.

Ironclad’s Rivet provides a powerful and accessible platform for those interested in AI prototyping but who may lack coding expertise. By utilizing the steps provided, from the initial installation to becoming proficient in prompt engineering, you can develop and refine AI functionalities such as audio transcription and interactive Q&A.

The combination of Rivet’s graphical development environment with integrations like Assembly AI and Node.js ensures that your entry into AI prototyping is efficient and effective. With tools like Rivet, the barrier to entering the world of AI is lower than ever, opening up opportunities for innovation and creativity in a field that continues to grow and influence our world in countless ways.

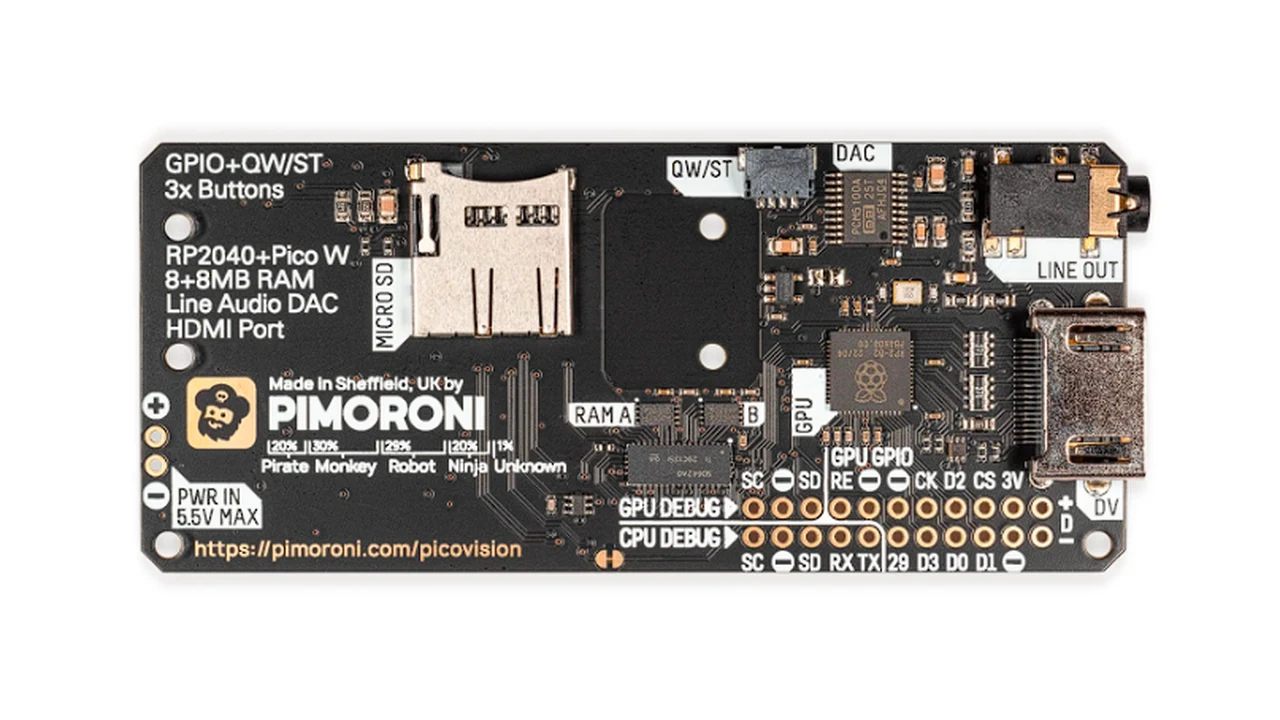

Image Credit : Ironclad

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.