[ad_1]

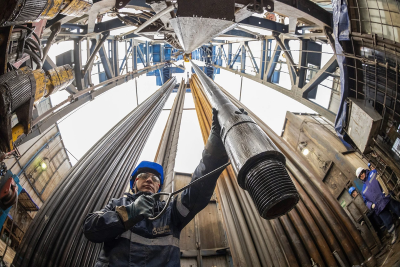

One of world’s largest oil platforms, the North Sea’s Gullfaks C, sits on immense foundations, constructed from 246,000 cubic metres of reinforced concrete, penetrating 22 metres into the sea bed and smothering about 16,000 square metres of sea floor. The platform’s installation in 1989 was a feat of engineering. Now, Gullfaks C has exceeded its expected 30-year lifespan and is due to be decommissioned in 2036. How can this gargantuan structure, and others like it, be taken out of action in a safe, cost-effective and environmentally beneficial way? Solutions are urgently needed.

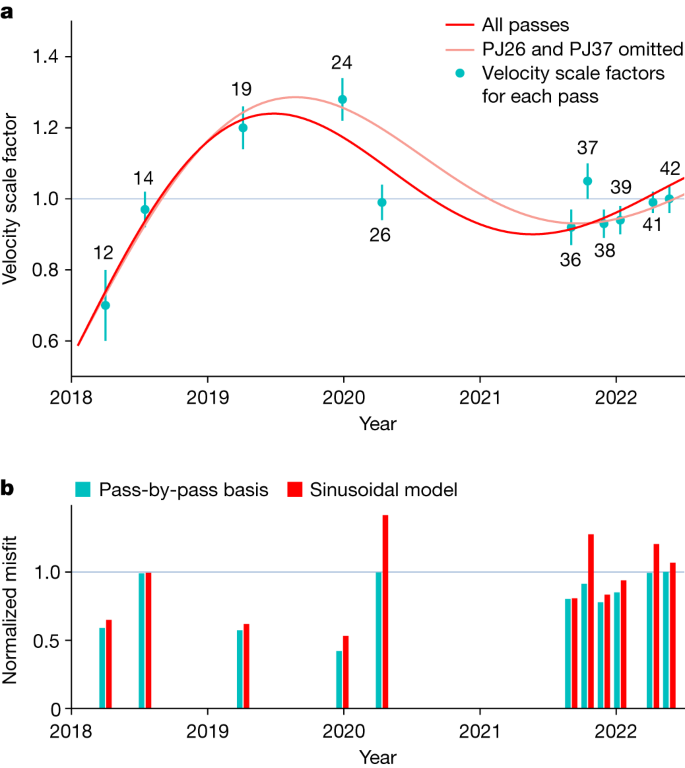

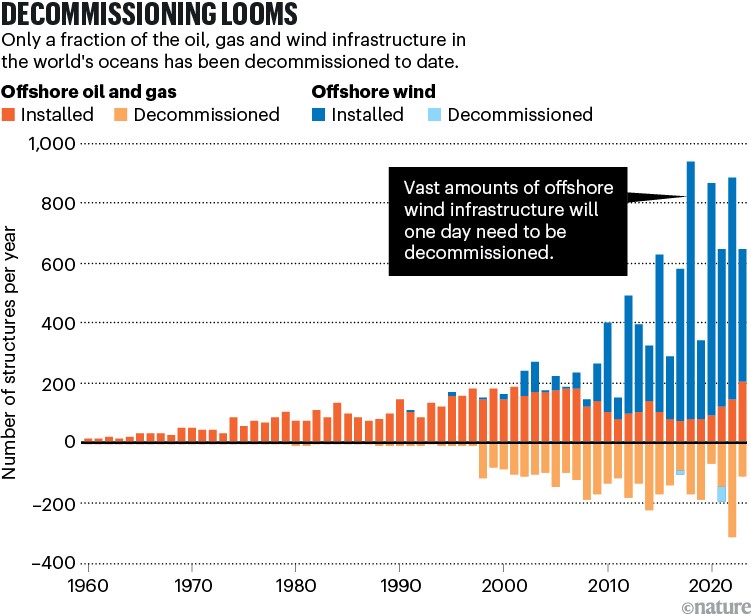

Many of the world’s 12,000 offshore oil and gas platforms are nearing the end of their lives (see ‘Decommissioning looms’). The average age of the more than 1,500 platforms and installations in the North Sea is 25 years. In the Gulf of Mexico, around 1,500 platforms are more than 30 years old. In the Asia–Pacific region, more than 2,500 platforms will need to be decommissioned in the next 10 years. And the problem won’t go away. Even when the world transitions to greener energy, offshore wind turbines and wave-energy devices will, one day, also need to be taken out of service.

Source: S. Gourvenec et al. Renew. Sustain. Energy Rev. 154, 111794 (2022).

There are several ways to handle platforms that have reached the end of their lives. For example, they can be completely or partly removed from the ocean. They can be toppled and left on the sea floor. They can be moved elsewhere, or abandoned in the deep sea. But there’s little empirical evidence about the environmental and societal costs and benefits of each course of action — how it will alter marine ecosystems, say, or the risk of pollution associated with moving or abandoning oil-containing structures.

So far, politics, rather than science, has been the driving force for decisions about how to decommission these structures. It was public opposition to the disposal of a floating oil-storage platform called Brent Spar in the North Sea that led to strict legislation being imposed in the northeast Atlantic in the 1990s. Now, there is a legal requirement to completely remove decommissioned energy infrastructure from the ocean in this region. By contrast, in the Gulf of Mexico, the idea of converting defunct rigs into artificial reefs holds sway despite a lack of evidence for environmental benefits, because the reefs are popular sites for recreational fishing.

A review of decommissioning strategies is urgently needed to ensure that governments make scientifically motivated decisions about the fate of oil rigs in their regions, rather than sleepwalking into default strategies that could harm the environment. Here, we outline a framework through which local governments can rigorously assess the best way to decommission offshore rigs. We argue that the legislation for the northeast Atlantic region should be rewritten to allow more decommissioning options. And we propose that similar assessments should inform the decommissioning of current and future offshore wind infrastructure.

Challenges of removing rigs

For the countries around the northeast Atlantic, leaving disused oil platforms in place is an emotive issue as well as a legal one. Environmental campaigners, much of the public and some scientists consider anything other than the complete removal of these structures to be littering by energy companies1. But whether rig removal is the best approach — environmentally or societally — to decommissioning is questionable.

Energy crisis: five questions that must be answered in 2023

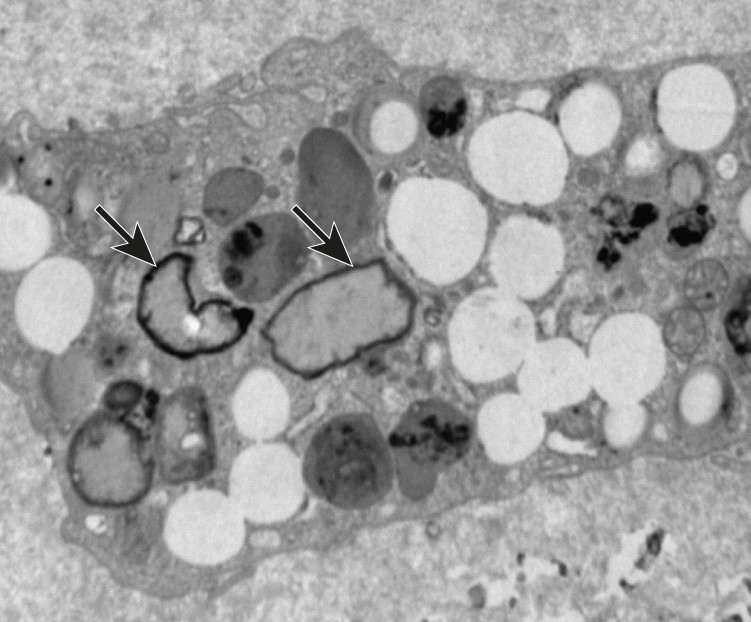

There has been little research into the environmental impacts of removing platforms, largely owing to lack of foresight2. But oil and gas rigs, both during and after their operation, can provide habitats for marine life such as sponges, corals, fish, seals and whales3. Organisms such as mussels that attach to structures can provide food for fish — and they might be lost if rigs are removed4. Structures left in place are a navigational hazard for vessels, making them de facto marine protected areas — regions in which human activities are restricted5. Another concern is that harmful heavy metals in sea-floor sediments around platforms might become resuspended in the ocean when foundations are removed6.

Removing rigs is also a formidable logistical challenge, because of their size. The topside of a platform, which is home to the facilities for oil or gas production, can weigh more than 40,000 tonnes. And the underwater substructure — the platform’s foundation and the surrounding fuel-storage facilities — can be even heavier. In the North Sea, substructures are typically made of concrete to withstand the harsh environmental conditions, and can displace more than one million tonnes of water. In regions such as the Gulf of Mexico, where conditions are less extreme, substructures can be lighter, built from steel tubes. But they can still weigh more than 45,000 tonnes, and are anchored to the sea floor using two-metre-wide concrete pilings.

Huge forces are required to break these massive structures free from the ocean floor. Some specialists even suggest that the removal of the heaviest platforms is currently technically impossible.

And the costs are astronomical. The cost to decommission and remove all oil and gas infrastructure from UK territorial waters alone is estimated at £40 billion (US$51 billion). A conservative estimate suggests that the global decommissioning cost for all existing oil and gas infrastructure could be several trillion dollars.

Mixed evidence for reefing

In the United States, attitudes to decommissioning are different. A common approach is to remove the topside, then abandon part or all of the substructure in such a way that it doesn’t pose a hazard to marine vessels. The abandoned structures can be used for water sports such as diving and recreational fishing.

This approach, known as ‘rigs-to-reefs’, was first pioneered in the Gulf of Mexico in the 1980s. Since its launch, the programme has repurposed around 600 rigs (10% of all the platforms built in the Gulf), and has been adopted in Brunei, Malaysia and Thailand.

How to stop cities and companies causing planetary harm

Converting offshore platforms into artificial reefs is reported to produce almost seven times less air-polluting emissions than complete rig removal7, and to cost 50% less. Because the structures provide habitats for marine life5, proponents argue that rigs increase the biomass in the ocean8. In the Gulf of California, for instance, increases in the number of fish, such as endangered cowcod (Sebastes levis) and other commercially valuable rockfish, have been reported in the waters around oil platforms6.

But there is limited evidence that these underwater structures actually increase biomass9. Opponents argue that the platforms simply attract fish from elsewhere10 and leave harmful chemicals in the ocean11. And because the hard surface of rigs is different from the soft sediments of the sea floor, such structures attract species that would not normally live in the area, which can destabilize marine ecosystems12.

Evidence from experts

With little consensus about whether complete removal, reefing or another strategy is the best option for decommissioning these structures, policies cannot evolve. More empirical evidence about the environmental and societal costs and benefits of the various options is needed.

To begin to address this gap, we gathered the opinions of 39 academic and government specialists in the field across 4 continents13,14. We asked how 12 decommissioning options, ranging from the complete removal of single structures to the abandonment of all structures, might impact marine life and contribute to international high-level environmental targets. To supplement the scant scientific evidence available, our panel of specialists used local knowledge, professional expertise and industry data.

The substructures of oil rigs can provide habitats for a wealth of marine life.Credit: Brent Durand/Getty

The panel assessed the pressures that structures exert on their environment — factors such as chemical contamination and change in food availability for marine life — and how those pressures affect marine ecosystems, for instance by altering biodiversity, animal behaviour or pollution levels. Nearly all pressures exerted by leaving rigs in place were considered bad for the environment. But some rigs produced effects that were considered beneficial for humans — creating habitats for commercially valuable species, for instance. Nonetheless, most of the panel preferred, on balance, to see infrastructure that has come to the end of its life be removed from the oceans.

But the panel also found that abandoning or reefing structures was the best way to help governments meet 37 global environmental targets listed in 3 international treaties. This might seem counter-intuitive, but many of the environmental targets are written from a ‘what does the environment do for humans’ perspective, rather than being focused on the environment alone.

Importantly, the panel noted that not all ecosystems respond in the same way to the presence of rig infrastructure. The changes to marine life caused by leaving rigs intact in the North Sea will differ from those brought about by abandoning rigs off the coast of Thailand. Whether these changes are beneficial enough to warrant alternatives to removal depends on the priorities of stakeholders in the region — the desire to protect cowcod is a strong priority in the United States, for instance, whereas in the North Sea, a more important consideration is ensuring access to fishing grounds. Therefore, rig decommissioning should be undertaken on a local, case-by-case basis, rather than using a one-size-fits-all approach.

Legal hurdles in the northeast Atlantic

If governments are to consider a range of decommissioning options in the northeast Atlantic, policy change is needed.

Current legislation is multi-layered. At the global level, the United Nations Convention on the Law of the Sea (UNCLOS; 1982) states that no unused structures can present navigational hazards or cause damage to flora and fauna. Thus, reefing is allowed.

Satellite images reveal untracked human activity on the oceans

But the northeast Atlantic is subject to stricter rules, under the OSPAR Convention. Named after its original conventions in Oslo and Paris, OSPAR is a legally binding agreement between 15 governments and the European Union on how best to protect marine life in the region (see go.nature.com/3stx7gj) that was signed in the face of public opposition to sinking Brent Spar. The convention includes Decision 98/3, which stipulates complete removal of oil and gas infrastructure as the default legal position, returning the sea floor to its original state. This legislation is designed to stop the offshore energy industry from dumping installations on mass.

Under OSPAR Decision 98/3, leaving rigs as reefs is prohibited. Exceptions to complete removal (derogations) are occasionally allowed, but only if there are exceptional concerns related to safety, environmental or societal harms, cost or technical feasibility. Of the 170 structures that have been decommissioned in the northeast Atlantic so far, just 10 have been granted derogations. In those cases, the concrete foundations of the platforms have been left in place, but the top part of the substructures removed.

Enable local decision-making

The flexibility of UNCLOS is a more pragmatic approach to decommissioning than the stringent removal policy stipulated by OSPAR.

We propose that although the OSPAR Decision 98/3 baseline position should remain the same — complete removal as the default — the derogation process should change to allow alternative options such as reefing, if a net benefit to the environment and society can be achieved. Whereas currently there must be an outstanding reason to approve a derogation under OSPAR, the new process would allow smaller benefits and harms to be weighed up.

The burden should be placed on industry officials to demonstrate clearly why an alternative to complete removal should be considered not as littering, but as contributing to the conservation of marine ecosystems on the basis of the best available scientific evidence. The same framework that we used to study global-scale evidence in our specialist elicitation can be used to gather and assess local evidence for the pros and cons of each decommissioning option. Expert panels should comprise not only scientists, but also members with legal, environmental, societal, cultural and economic perspectives. Regions outside the northeast Atlantic should follow the same rigorous assessment process, regardless of whether they are already legally allowed to consider alternative options.

For successful change, governments and legislators must consider two key factors.

Get buy-in from stakeholders

OSPAR’s 16 signatories are responsible for changing its legislation but it will be essential that the more flexible approach gets approval from OSPAR’s 22 intergovernmental and 39 non-governmental observer organizations. These observers, which include Greenpeace, actively contribute to OSPAR’s work and policy development, and help to implement its convention. Public opinion in turn will be shaped by non-governmental organizations15 — Greenpeace was instrumental in raising public awareness about the plan to sink Brent Spar in the North Sea, for instance.

EU climate policy is dangerously reliant on untested carbon-capture technology

Transparency about the decision-making process will be key to building confidence among sceptical observers. Oil and gas companies must maintain an open dialogue with relevant government bodies about plans for decommissioning. In turn, governments must clarify what standards they will require to consider an alternative to removal. This includes specifying what scientific evidence should be collated, and by whom. All evidence about the pros and cons of each decommissioning option should be made readily available to all.

Oil and gas companies should identify and involve a wide cross-section of stakeholders in decision-making from the earliest stages of planning. This includes regulators, statutory consultees, trade unions, non-governmental organizations, business groups, local councils and community groups and academics, to ensure that diverse views are considered.

Conflict between stakeholders, as occurred with Brent Spar, should be anticipated. But this can be overcome through frameworks similar to those between trade unions and employers that help to establish dialogue between the parties15.

The same principle of transparency should also be applied to other regions. If rigorous local assessment reveals reefing not to be a good option for some rigs in the Gulf of Mexico, for instance, it will be important to get stakeholder buy-in for a change from the status quo.

Future-proof designs

OSPAR and UNCLOS legislation applies not only to oil and gas platforms but also to renewable-energy infrastructure. To avoid a repeat of the challenges that are currently being faced by the oil and gas industry, decommissioning strategies for renewables must be established before they are built, not as an afterthought. Structures must be designed to be easily removed in an inexpensive way. Offshore renewable-energy infrastructure should put fewer pressures on the environment and society — for instance by being designed so that it can be recycled, reused or repurposed.

If developers fail to design infrastructure that can be removed in an environmentally sound and cost-effective way, governments should require companies to ensure that their structures provide added environmental and societal benefits. This could be achieved retrospectively for existing infrastructure, taking inspiration from biodiversity-boosting panels that can be fitted to the side of concrete coastal defences to create marine habitats (see go.nature.com/3v99bsb).

Governments should also require the energy industry to invest in research and development of greener designs. On land, constraints are now being placed on building developments to protect biodiversity — bricks that provide habitats for bees must be part of new buildings in Brighton, UK, for instance (see go.nature.com/3pcnfua). Structures in the sea should not be treated differently.

If it is designed properly, the marine infrastructure that is needed as the world moves towards renewable energy could benefit the environment — both during and after its operational life. Without this investment, the world could find itself facing a decommissioning crisis once again, as the infrastructure for renewables ages.

[ad_2]

Source Article Link