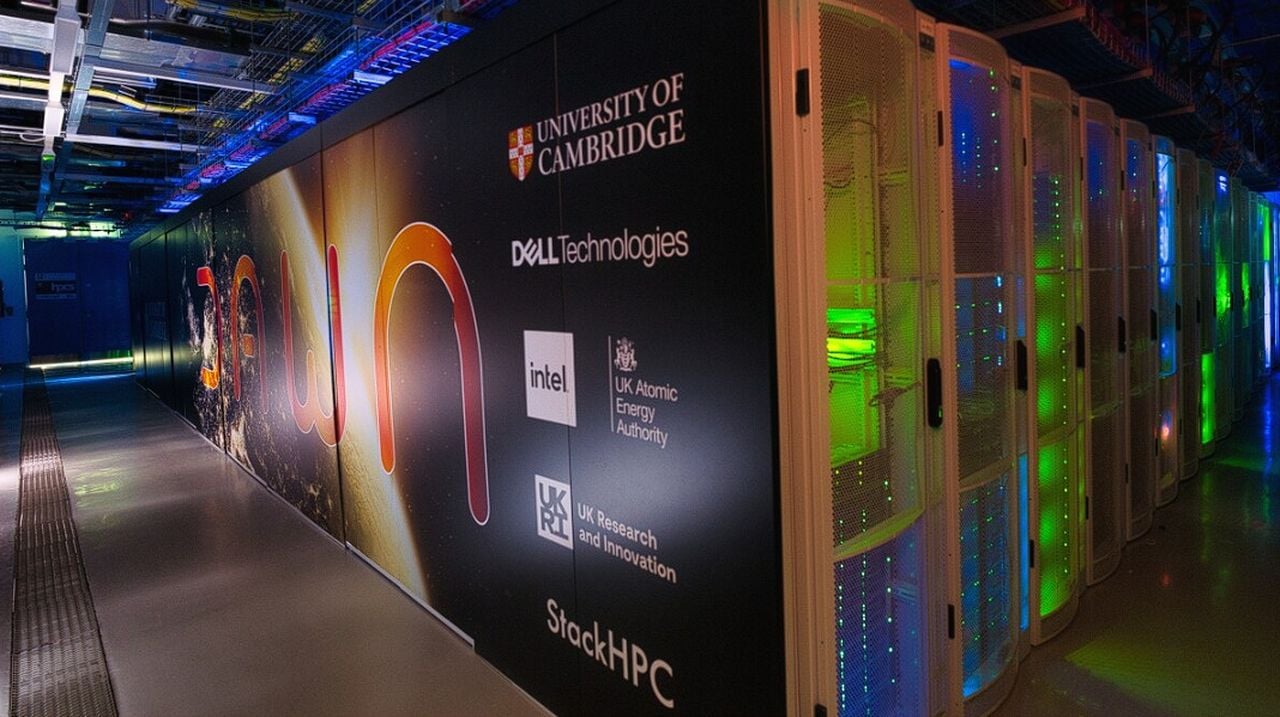

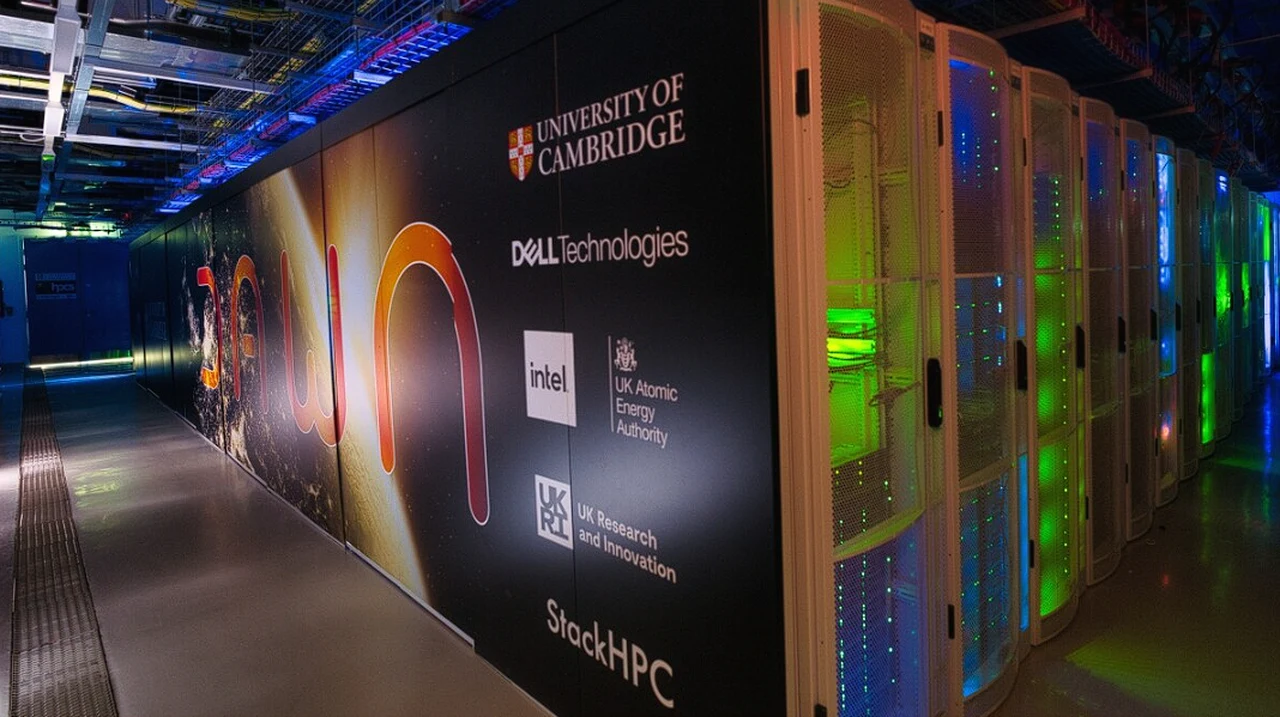

In a major milestone for the UK’s technological capabilities, Dell Technologies, Intel, and the University of Cambridge have announced the deployment of the Dawn Phase 1 supercomputer, currently the fastest AI supercomputer in the country. This groundbreaking machine, designed to combine artificial intelligence (AI) and high-performance computing (HPC), is expected to address global challenges and bolster the UK’s position as a technology leader.

Dawn Phase 1 is more than just a supercomputer; it’s a crucial component of the recently launched UK AI Research Resource (AIRR). The AIRR is a national facility designed to investigate the feasibility of AI-related systems and architectures, and Dawn Phase 1 will play a pivotal role in this exploration. The deployment of this supercomputer not only represents a significant technological achievement but also brings the UK closer to the exascale computing threshold, equivalent to a quintillion floating point operations per second.

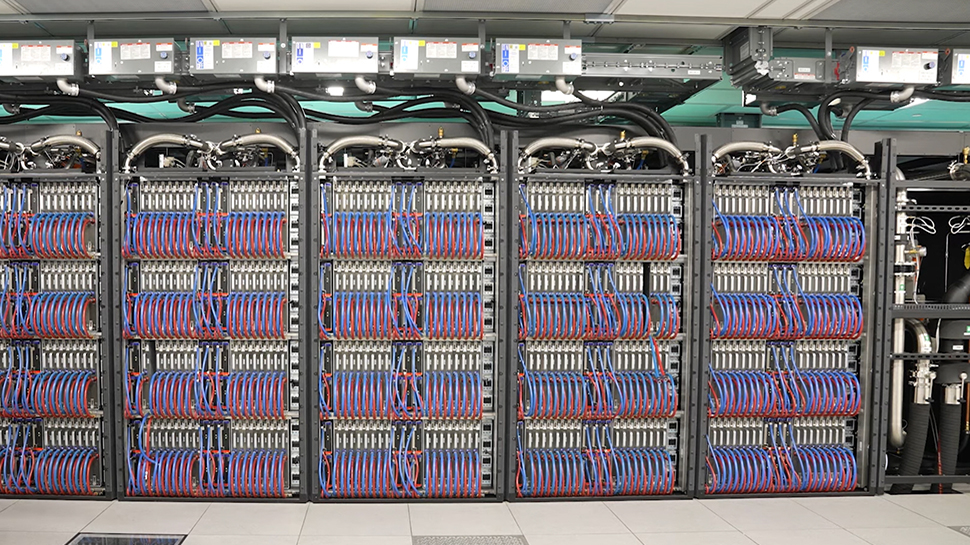

The supercomputer is currently operational at the Cambridge Open Zettascale Lab, where it utilises Dell PowerEdge XE9640 servers and the Intel Data Center GPU Max Series accelerator. This state-of-the-art technology, combined with the supercomputer’s liquid cooling system, makes it uniquely suited for handling AI and HPC workloads.

The deployment of Dawn Phase 1 is the result of a strategic co-design partnership between Dell Technologies, Intel, the University of Cambridge, and UK Research and Innovation. This collaboration is a testament to the importance of partnerships and investments in technology for the UK’s AI growth potential. It’s a clear demonstration of how innovative collaborations can lead to technological breakthroughs.

One of the most exciting aspects of Dawn Phase 1 is its potential applications across a wide range of sectors. The supercomputer is poised to support large workloads in academic research and industrial domains, including healthcare, engineering, green fusion energy, climate modelling, and frontier science. This broad application potential underscores the transformative power of AI and HPC when harnessed effectively.

Looking ahead, a Phase 2 supercomputer, expected to deliver ten times the performance of Dawn Phase 1, is planned for 2024. This next phase of development will further strengthen the UK’s technological capabilities and its position as a global leader in AI and HPC.

Other articles you may find of interest on the subject of supercomputers :

In addition to the upcoming Phase 2, the integration of Dawn Phase 1 and the Isambard AI supercomputer will form the AIRR. This integration will create a national facility designed to help researchers maximise the potential of AI, further enhancing the UK’s AI research capabilities.

The construction of Dawn Phase 1 is based on Dell PowerEdge XE9640 servers with liquid cooling technology. Each server combines two 4th Gen Intel Xeon Scalable processors and four Intel Data Center GPU Max accelerators. This powerful combination of hardware is complemented by an AI- and simulation-optimised cloud supercomputing software environment provided by Scientific OpenStack from UK SME StackHPC.

While the technical details and performance numbers for Dawn Phase 1 will be released in mid-November at the Supercomputing 23 (SC23) conference in Denver, Colorado, it’s clear that this supercomputer represents a significant leap forward for the UK’s AI capabilities. By harnessing the power of AI and HPC, Dawn Phase 1 has the potential to drive transformative change across a range of sectors and place the UK at the forefront of global technological innovation.

Dawn Phase 1 Supercomputer 2023

The Dawn Supercomputer at the University of Cambridge is distinct from the one at Lawrence Livermore National Laboratory. This particular Dawn Supercomputer is a part of the Cambridge Service for Data Driven Discovery (CSD3), a state-of-the-art data analytics supercomputing facility. It caters to a wide range of computational and data-intensive research across various disciplines.

CSD3 is notable for its focus on supporting research that requires handling and analysis of large datasets, high-performance computing (HPC), and machine learning. The facility is designed to be highly flexible and scalable, meeting the needs of different research communities. It’s a collaborative project, involving not just the University of Cambridge but also partners from the wider research community.

The Dawn Supercomputer specifically, within this context, is known for its high-performance capabilities, particularly suited for tasks involving data analytics, artificial intelligence, and machine learning. Its architecture, combining traditional CPU-based computing with more recent GPU-based approaches, makes it well-suited for a variety of computational tasks. This includes scientific simulations, data processing, and the training of complex machine learning models.

The integration of the Dawn Supercomputer into CSD3 represents the University of Cambridge’s commitment to advancing research in data-intensive fields. It provides researchers with the necessary computational tools to tackle some of the most challenging and important questions in science and technology today.

A previous supercomputer also called Dawn was a high-performance computing system hosted at the Lawrence Livermore National Laboratory (LLNL) in the United States. It served as a precursor and testing platform for the more advanced Sequoia supercomputer, part of the IBM Blue Gene series of supercomputers. Dawn was operational in the late 2000s and early 2010s.

Filed Under: Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.