Meta’s CEO Mark Zuckerberg has stepped into the spotlight to discuss the company’s latest endeavors and the competitive landscape of virtual reality and augmented reality, with the release of Apple’s Vision Pro. As a main competitor to the Meta’s Quest 3 Zuckerberg explains why the headset is a strong competitor that offers exceptional value and quality in an interesting interview with the Morning Brew Daily team.

Zuckerberg’s vision for Meta is not limited to outdoing rivals like Apple. He is steering the company toward a future where computing is redefined, embracing an open and collaborative approach that differs from the closed-off systems some competitors use. This strategy is not just about Meta; it’s about fostering a spirit of cooperation across the industry to drive collective progress.

Interview with Mark Zuckerberg

The integration of advanced technologies such as artificial intelligence (AI) and neural interfaces into Meta’s future products is a key part of Zuckerberg’s strategy. Imagine wearing smart glasses or wristbands that allow you to interact with technology in a natural and intuitive way, potentially transforming how you go about your daily life and work. Zuckerberg’s direct involvement in these decisions keeps Meta at the forefront of technological innovation.

Here are some other articles you may find of interest on the subject of Apple Vision Pro :

The potential for AI to reshape the job market is another area where Zuckerberg sees significant opportunities. He envisions a future where AI helps people pursue their passions more freely, changing the nature of work itself. His support for open-source AI projects reflects a commitment to making technology accessible to all and preventing any single company from dominating the field.

Looking ahead, Zuckerberg predicts that smart glasses will become the main mobile device for many people, complementing the use of headsets at home. This shift points to a major change in how we interact with technology, aiming for a more integrated and natural experience in our daily lives.

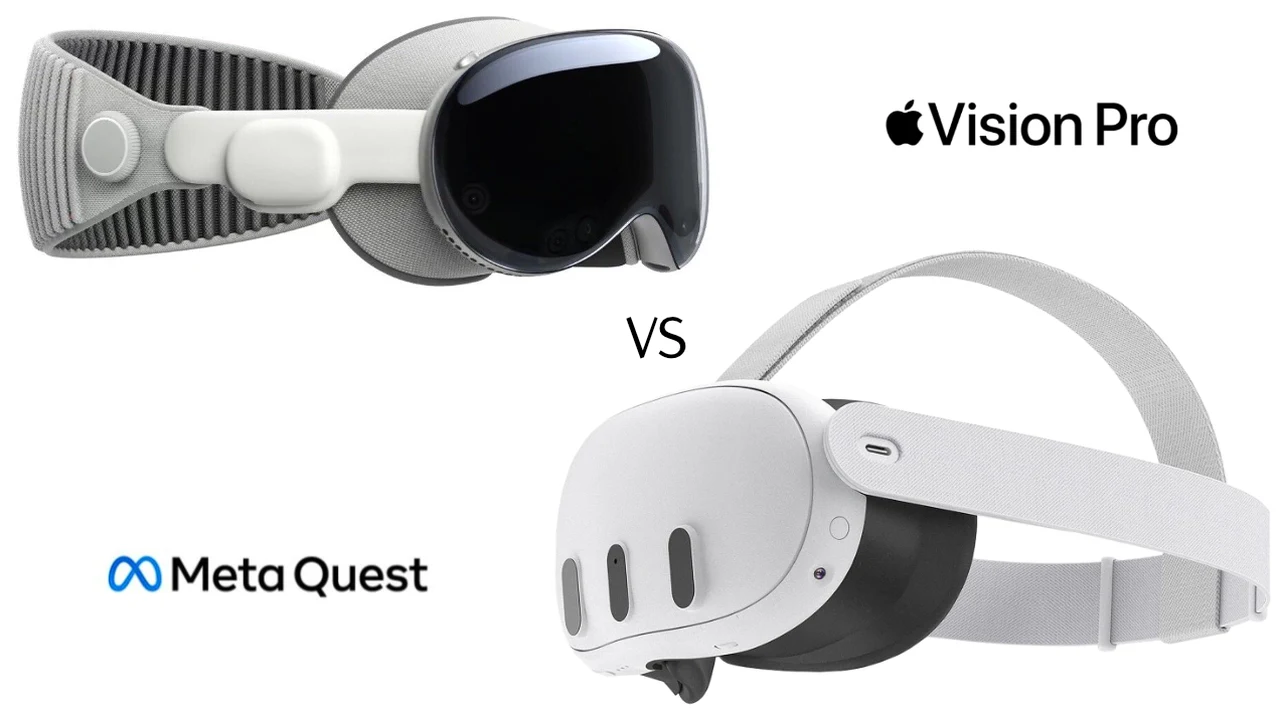

Apple Vision Pro vs Meta Quest

When comparing the Apple Vision Pro vs Meta Quest 3, there are several dimensions to understand As well as the impact on the virtual reality (VR) and augmented reality (AR) landscape. This quick comparison draws upon details from the recent interview with Mark Zuckerberg.

Technological Specifications

Apple Vision Pro is positioned as a high-end mixed reality headset, blending AR and VR capabilities. It’s notable for its advanced display technology, offering high resolution and a wide field of view. The device integrates seamlessly with the Apple ecosystem, promising a user-friendly experience and incorporating spatial audio for immersive sound. Apple’s emphasis on privacy and data security is also a key component of its design philosophy.

Meta Quest 3, on the other hand, is primarily a VR headset with some AR capabilities through pass-through technology. It emphasizes affordability while still delivering high-quality VR experiences. The Quest 3 features a lightweight design, high-resolution displays, and a robust tracking system without the need for external sensors. Meta focuses on making VR accessible to a broader audience, with a strong emphasis on social connectivity and an open ecosystem for developers.

Market Positioning and Price

The Apple Vision Pro is targeted at the premium segment of the market, with a price point reflecting its high-end specifications and the broader Apple ecosystem integration. It’s aimed at professionals, creators, and users seeking premium mixed reality experiences. The device’s pricing reflects its positioning as a luxury product within the Apple lineup, potentially limiting its accessibility to a wider audience.

Meta Quest 3 is designed with mass market adoption in mind, priced competitively to appeal to a broad range of consumers, from gamers to educators. Meta’s pricing strategy for the Quest 3 underlines its goal to democratize VR, making it more accessible to people who are interested in VR but cautious about the investment.

Content Ecosystem

Apple’s approach with the Vision Pro is expected to leverage its strong developer relationships and ecosystem, encouraging the creation of high-quality AR and VR applications. The integration with existing Apple services and platforms could offer a seamless user experience, with a potential focus on professional applications, education, and premium entertainment.

Meta Quest 3 benefits from Meta’s established presence in the VR space, boasting a wide array of games, social experiences, and educational content. Meta has cultivated a large community of developers and content creators, ensuring a diverse and vibrant ecosystem. The emphasis is on social VR experiences and making development accessible to a wide range of creators.

Implications for Consumers and Developers

For consumers, the choice between the Apple Vision Pro and Meta Quest 3 comes down to prioritizing premium experiences and ecosystem integration versus affordability and a broad content library. Apple’s offering is likely to appeal to those already invested in its ecosystem, seeking the latest in mixed reality technology. In contrast, the Quest 3 targets a more diverse audience, emphasizing value and the social aspects of VR.

Developers face a decision between focusing on a premium, possibly more lucrative Apple user base versus the larger, more diverse audience of Meta’s platform. Apple’s strict ecosystem may offer advantages in terms of user spending and engagement, while Meta’s more open approach could allow for greater creative freedom and innovation.

Zuckerberg’s insights into Meta’s direction highlight the company’s focus on leading the charge in new technologies, the value of competition in the market, and the strategic role of AI and the metaverse in the company’s future. As the technological landscape continues to evolve, Meta’s Quest 3 virtual reality headset and other upcoming innovations are poised to redefine our connection with the digital world.

Filed Under: Hardware, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.