Microwave and mmWave with high spectral purity are critical for a wide range of applications1,2,3, including metrology, navigation and spectroscopy. Owing to the superior fractional frequency stability of reference-cavity stabilized lasers when compared to electrical oscillators14, the most stable microwave sources are now achieved in optical systems by using optical frequency division4,5,6,7 (OFD). Essential to the division process is an optical frequency comb4, which coherently transfers the fractional stability of stable references at optical frequencies to the comb repetition rate at radio frequency. In the frequency division, the phase noise of the output signal is reduced by the square of the division ratio relative to that of the input signal. A phase noise reduction factor as large as 86 dB has been reported4. However, so far, the most stable microwaves derived from OFD rely on bulk or fibre-based optical references4,5,6,7, limiting the progress of applications that demand exceedingly low microwave phase noise.

Integrated photonic microwave oscillators have been studied intensively for their potential of miniaturization and mass-volume fabrication. A variety of photonic approaches have been shown to generate stable microwave and/or mmWave signals, such as direct heterodyne detection of a pair of lasers15, microcavity-based stimulated Brillouin lasers16,17 and soliton microresonator-based frequency combs18,19,20,21,22,23 (microcombs). For solid-state photonic oscillators, the fractional stability is ultimately limited by thermorefractive noise (TRN), which decreases with the increase of cavity mode volume24. Large-mode-volume integrated cavities with metre-scale length and a greater than 100 million quality (Q)-factor have been shown recently8,25 to reduce laser linewidth to Hz-level while maintaining chip footprint at centimetre-scale9,26,27. However, increasing cavity mode volume reduces the effective intracavity nonlinearity strength and increases the turn-on power for Brillouin and Kerr parametric oscillation. This trade-off poses a difficult challenge for an integrated cavity to simultaneously achieve high stability and nonlinear oscillation for microwave generation. For oscillators integrated with photonic circuits, the best phase noise reported at 10 kHz offset frequency is demonstrated in the SiN photonic platform, reaching −109 dBc Hz−1 when the carrier frequency is scaled to 10 GHz (refs. 21,26). This is many orders of magnitude higher than that of the bulk OFD oscillators. An integrated photonic version of OFD can fundamentally resolve this trade-off, as it allows the use of two distinct integrated resonators in OFD for different purposes: a large-mode-volume resonator to provide exceptional fractional stability and a microresonator for the generation of soliton microcombs. Together, they can provide major improvements to the stability of integrated oscillators.

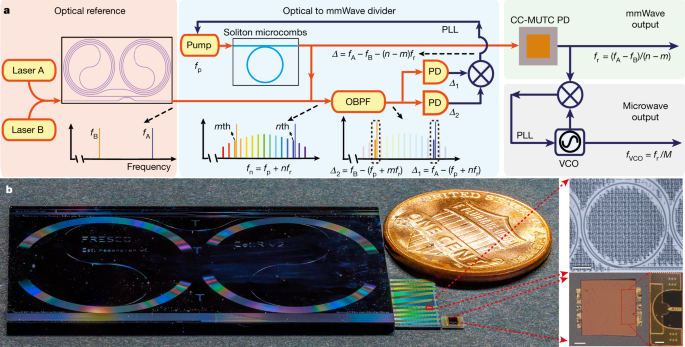

Here, we notably advance the state of the art in photonic microwave and mmWave oscillators by demonstrating integrated chip-scale OFD. Our demonstration is based on complementary metal-oxide-semiconductor-compatible SiN integrated photonic platform28 and reaches record-low phase noise for integrated photonic-based mmWave oscillator systems. The oscillator derives its stability from a pair of commercial semiconductor lasers that are frequency stabilized to a planar-waveguide-based reference cavity9 (Fig. 1). The frequency difference of the two reference lasers is then divided down to mmWave with a two-point locking method29 using an integrated soliton microcomb10,11,12. Whereas stabilizing soliton microcombs to long-fibre-based optical references has been shown very recently30,31, its combination with integrated optical references has not been reported. The small dimension of microcavities allows soliton repetition rates to reach mmWave and THz frequencies12,30,32, which have emerging applications in 5G/6G wireless communications33, radio astronomy34 and radar2. Low-noise, high-power mmWaves are generated by photomixing the OFD soliton microcombs on a high-speed flip-chip bonded charge-compensated modified uni-travelling carrier photodiode (CC-MUTC PD)12,35. To address the challenge of phase noise characterization for high-frequency signals, a new mmWave to microwave frequency division (mmFD) method is developed to measure mmWave phase noise electrically while outputting a low-noise auxiliary microwave signal. The generated 100 GHz signal reaches a phase noise of −114 dBc Hz−1 at 10 kHz offset frequency (equivalent to −134 dBc Hz−1 for 10 GHz carrier frequency), which is more than two orders of magnitude better than previous SiN-based photonic microwave and mmWave oscillators21,26. The ultra-low phase noise can be maintained while pushing the mmWave output power to 9 dBm (8 mW), which is only 1 dB below the record for photonic oscillators at 100 GHz (ref. 36). Pictures of chip-based reference cavity, soliton-generating microresonators and CC-MUTC PD are shown in Fig. 1b.

The integrated optical reference in our demonstration is a thin-film SiN 4-metre-long coil cavity9. The cavity has a cross-section of 6 μm width × 80 nm height, a free-spectral-range (FSR) of roughly 50 MHz, an intrinsic quality factor of 41 × 106 (41 × 106) and a loaded quality factor of 34 × 106 (31 × 106) at 1,550 nm (1,600 nm). The coil cavity provides exceptional stability for reference lasers because of its large-mode volume and high-quality factor9. Here, two widely tuneable lasers (NewFocus Velocity TLB-6700, referred to as laser A and B) are frequency stabilized to the coil cavity through Pound–Drever–Hall locking technique with a servo bandwidth of 90 kHz. Their wavelengths can be tuned between 1,550 nm (fA = 193.4 THz) and 1,600 nm (fB = 187.4 THz), providing up to 6 THz frequency separation for OFD. The setup schematic is shown in Fig. 2.

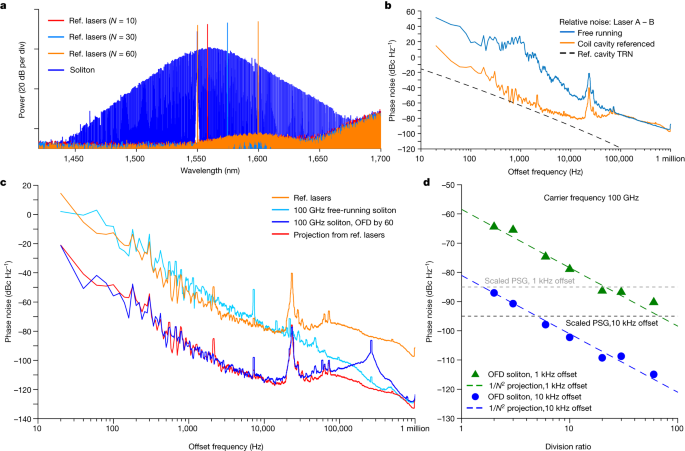

The soliton microcomb is generated in an integrated, bus-waveguide-coupled Si3N4 micro-ring resonator10,12 with a cross-section of 1.55 μm width × 0.8 μm height. The ring resonator has a radius of 228 μm, an FSR of 100 GHz and an intrinsic (loaded) quality factor of 4.3 × 106 (3.0 × 106). The pump laser of the ring resonator is derived from the first modulation sideband of an ultra-low-noise semiconductor extended distributed Bragg reflector laser from Morton Photonics37, and the sideband frequency can be rapidly tuned by a voltage-controlled oscillator (VCO). This allows single soliton generation by implementing rapid frequency sweeping of the pump laser38, as well as fast servo control of the soliton repetition rate by tuning the VCO30. The optical spectrum of the soliton microcombs is shown in Fig. 3a, which has a 3 dB bandwidth of 4.6 THz. The spectra of reference lasers are also plotted in the same figure.

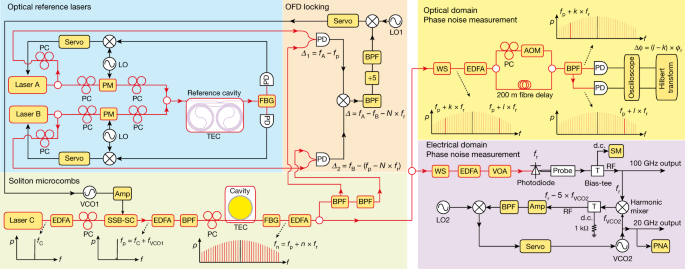

The OFD is implemented with the two-point locking method29,30. The two reference lasers are photomixed with the soliton microcomb on two separate photodiodes to create beat notes between the reference lasers and their nearest comb lines. The beat note frequencies are Δ1 = fA − (fp + n × fr) and Δ2 = fB − (fp + m × fr), where fr is the repetition rate of the soliton, fp is pump laser frequency and n, m are the comb line numbers relative to the pump line number. These two beat notes are then subtracted on an electrical mixer to yield the frequency and phase difference between the optical references and N times of the repetition rate: Δ = Δ1 − Δ2 = (fA − fB) − (N × fr), where N = n − m is the division ratio. Frequency Δ is then divided by five electronically and phase locked to a low-frequency local oscillator (LO, fLO1) by feedback control of the VCO frequency. The tuning of VCO frequency directly tunes the pump laser frequency, which then tunes the soliton repetition rate through Raman self-frequency shift and dispersive wave recoil effects20. Within the servo bandwidth, the frequency and phase of the optical references are thus divided down to the soliton repetition rate, as fr = (fA − fB − 5fLO1)/N. As the local oscillator frequency is in the 10 s MHz range and its phase noise is negligible compared to the optical references, the phase noise of the soliton repetition rate (Sr) within the servo locking bandwidth is determined by that of the optical references (So): Sr = So/N2.

To test the OFD, the phase noise of the OFD soliton repetition rate is measured for division ratios of N = 2, 3, 6, 10, 20, 30 and 60. In the measurement, one reference laser is kept at 1,550.1 nm, while the other reference laser is tuned to a wavelength that is N times of the microresonator FSR away from the first reference laser (Fig. 3a). The phase noise of the reference lasers and soliton microcombs are measured in the optical domain by using dual-tone delayed self-heterodyne interferometry39. In this method, two lasers at different frequencies can be sent into an unbalanced Mach–Zehnder interferometer with an acoustic-optics modulator in one arm (Fig. 2). Then the two lasers are separated by a fibre-Bragg grating filter and detected on two different photodiodes. The instantaneous frequency and phase fluctuations of these two lasers can be extracted from the photodetector signals by using Hilbert transform. Using this method, the phase noise of the phase difference between the two stabilized reference lasers is measured and shown in Fig. 3b. In this work, the phase noise of the reference lasers does not reach the thermal refractive noise limit of the reference cavity9 and is likely to be limited by environmental acoustic and mechanical noises. For soliton repetition rate phase noise measurement, a pair of comb lines with comb numbers l and k are selected by a programmable line-by-line waveshaper and sent into the interferometry. The phase noise of their phase differences is measured, and its division by (l − k)2 yields the soliton repetition rate phase noise39.

The phase noise measurement results are shown in Fig. 3c,d. The best phase noise for soliton repetition rate is achieved with a division ratio of 60 and is presented in Fig. 3c. For comparison, the phase noises of reference lasers and the repetition rate of free-running soliton without OFD are also shown in the figure. Below 100 kHz offset frequency, the phase noise of the OFD soliton is roughly 602, which is 36 dB below that of the reference lasers and matches very well with the projected phase noise for OFD (noise of reference lasers – 36 dB). From roughly 148 kHz (OFD servo bandwidth) to 600 kHz offset frequency, the phase noise of the OFD soliton is dominated by the servo pump of the OFD locking loop. Above 600 kHz offset frequency, the phase noise follows that of the free-running soliton, which is likely to be affected by the noise of the pump laser20. Phase noises at 1 and 10 kHz offset frequencies are extracted for all division ratios and are plotted in Fig. 3d. The phase noises follow the 1/N2 rule, validating the OFD.

The measured phase noise for the OFD soliton repetition rate is low for a microwave or mmWave oscillator. For comparison, phase noises of Keysight E8257D PSG signal generator (standard model) at 1 and 10 kHz are given in Fig. 3d after scaling the carrier frequency to 100 GHz. At 10 kHz offset frequency, our integrated OFD oscillator achieves a phase noise of −115 dBc Hz−1, which is 20 dB better than a standard PSG signal generator. When comparing to integrated microcomb oscillators that are stabilized to long optical fibres30, our integrated oscillator matches the phase noise at 10 kHz offset frequency and provides better phase noise below 5 kHz offset frequency (carrier frequency scaled to 100 GHz). We speculate this is because our photonic chip is rigid and small when compared to fibre references and thus is less affected by environmental noises such as vibration and shock. This showcases the capability and potential of integrated photonic oscillators. When comparing to integrated photonic microwave and mmWave oscillators, our oscillator shows exceptional performance: at 10 kHz offset frequency, its phase noise is more than two orders of magnitude better than other demonstrations, including the free-running SiN soliton microcomb oscillators21,26 and the very recent single-laser OFD40. A notable exception is the recent work of Kudelin et al.41, in which 6 dB better phase noise was achieved by stabilizing a 20 GHz soliton microcomb oscillator to a microfabricated Fabry–Pérot reference cavity.

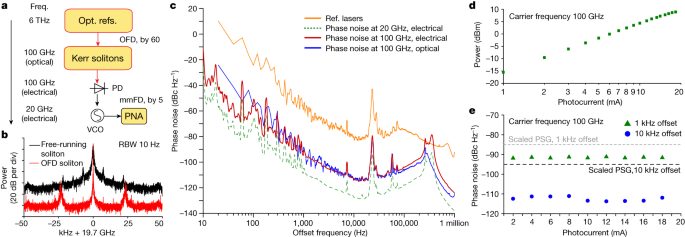

The OFD soliton microcomb is then sent to a high-power, high-speed flip-chip bonded CC-MUTC PD for mmWave generation. Similar to a uni-travelling carrier PD42, the carrier transport in the CC-MUTC PD depends primarily on fast electrons that provide high speed and reduce saturation effects due to space-charge screening. Power handling is further enhanced by flip-chip bonding the PD to a gold-plated coplanar waveguide on an aluminium nitride submount for heat sinking43. The PD used in this work is an 8-μm-diameter CC-MUTC PD with 0.23 A/W responsivity at 1,550 nm wavelength and a 3 dB bandwidth of 86 GHz. Details of the CC-MUTC PD are described elsewhere44. Whereas the power characterization of the generated mmWave is straightforward, phase noise measurement at 100 GHz is not trivial as the frequency exceeds the bandwidth of most phase noise analysers. One approach is to build two identical yet independent oscillators and down-mix the frequency for phase noise measurement. However, this is not feasible for us due to the limitation of laboratory resources. Instead, a new mmWave to microwave frequency division method is developed to coherently divide down the 100 GHz mmWave to 20 GHz microwave, which can then be directly measured on a phase noise analyser (Fig. 4a).

In this mmFD, the generated 100 GHz mmWave and a 19.7 GHz VCO signal are sent to a harmonic radio-frequency (RF) mixer (Pacific mmWave, model number WM/MD4A), which creates higher harmonics of the VCO frequency to mix with the mmWave. The mixer outputs the frequency difference between the mmWave and the fifth harmonics of the VCO frequency: Δf = fr − 5fVCO2 and Δf is set to be around 1.16 GHz. Δf is then phase locked to a stable local oscillator (fLO2) by feedback control of the VCO frequency. This stabilizes the frequency and phase of the VCO to that of the mmWave within the servo locking bandwidth, as fVCO2 = (fr − fLO2)/5. The electrical spectrum and phase noise of the VCO are then measured directly on the phase noise analyser and are presented in Fig. 4b,c. The bandwidth of the mmFD servo loop is 150 kHz. The phase noise of the 19.7 GHz VCO can be scaled back to 100 GHz to represent the upper bound of the mmWave phase noise. For comparison, the phase noise of reference lasers and the OFD soliton repetition rate measured in the optical domain with dual-tone delayed self-heterodyne interferometry method are also plotted. Between 100 Hz to 100 kHz offset frequency, the phase noise of soliton repetition rate and the generated mmWave match very well with each other. This validates the mmFD method and indicates that the phase stability of the soliton repetition rate is well transferred to the mmWave. Below 100 Hz offset frequency, measurements in the optical domain suffer from phase drift in the 200 m optical fibre in the interferometry and thus yield phase noise higher than that measured with the electrical method.

Finally, the mmWave phase noise and power are measured versus the MUTC PD photocurrent from 1 to 18.3 mA at −2 V bias by varying the illuminating optical power on the PD. Although the mmWave power increases with the photocurrent (Fig. 4d), the phase noise of the mmWave remains almost the same for all different photocurrents (Fig. 4e). This suggests that low phase noise and high power are simultaneously achieved. The achieved power of 9 dBm is one of the highest powers ever reported at 100 GHz frequency for photonic oscillators36.