[ad_1]

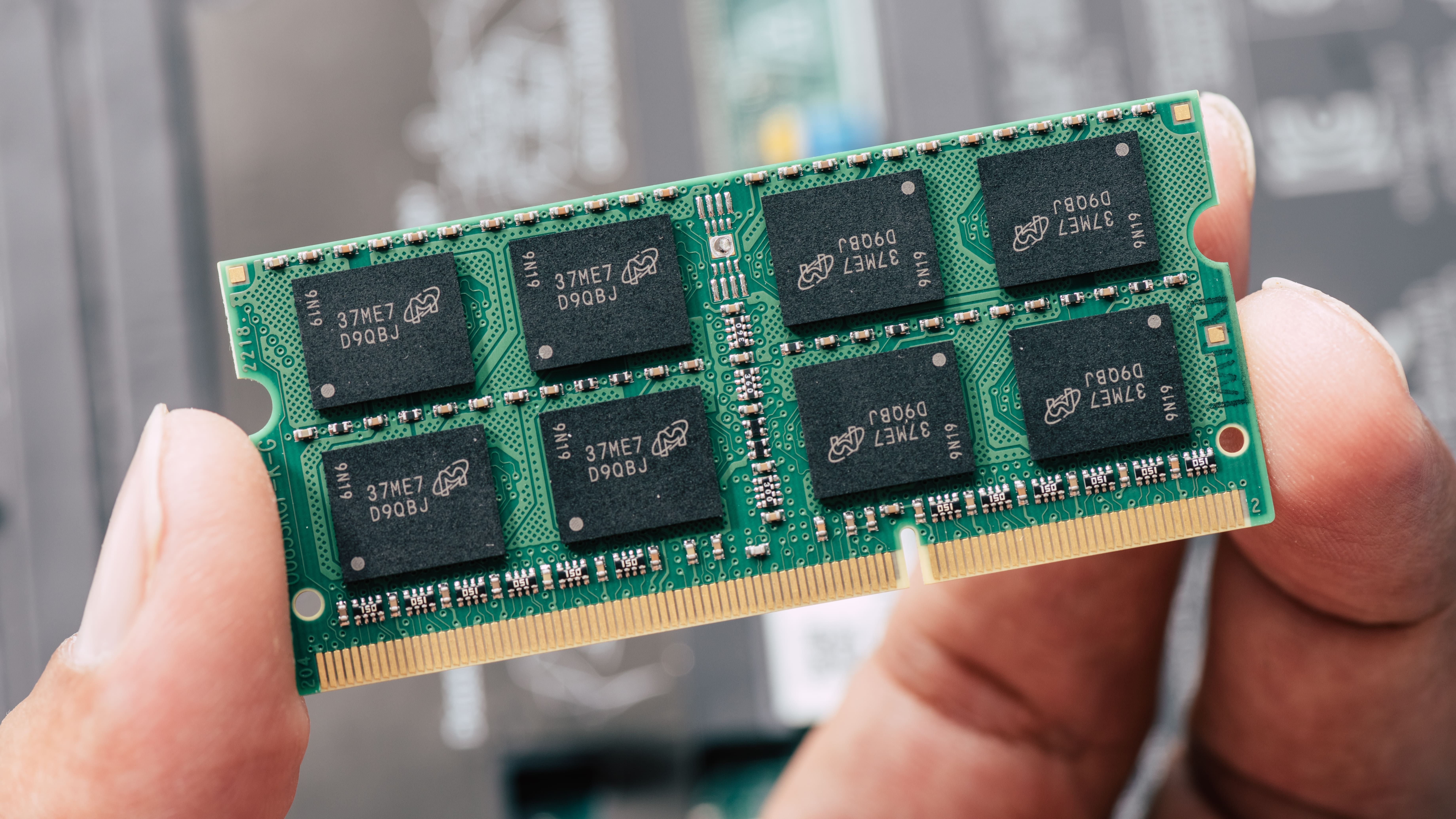

¿Está buscando aumentar la capacidad del servidor? tecnología financiera 96 demócrata La caja CXL podría ser lo que estás buscando. Fue presentado en la Cumbre OCP 2024 junto con Astera Labs CXL La caja de expansión permite a los usuarios conectar hasta 96 DIMM DDR5 a un solo servidor, proporcionando capacidades de memoria masivas de hasta decenas de terabytes por servidor de memoria.

Como se informó Servicio a domicilio,La caja de expansión se puede conectar de las siguientes ocho maneras Intel Servidor Xeon 6 Granite Rapids-SP, que ofrece mayor rendimiento.

junto con Servidor Intel Xeon 6que cuenta con un total de 128 ranuras DIMM DDR, lo que significa que los usuarios pueden aprovechar la friolera de 244 ranuras DIMM para un solo servidor, lo que proporciona una amplia capacidad de memoria.

Lo que puede esperar de la caja de expansión 96 DIMM CXL

Entonces, ¿qué significa exactamente este anuncio? Bueno, estamos hablando de aumentos importantes en la capacidad de memoria de los servidores.

el DDR5 Los DIMMS en cuestión aquí son DDR5-4800 y se utiliza la caja de expansión Leo de Astera Labs. En otros lugares, los usuarios pueden crear 24 puertos CDFP, cada uno con conectividad PCIe Gen5 x16 que conecta el barco CXL y los propios servidores.

como Servicio a domicilio Como señalamos, el lanzamiento muestra que los usuarios pueden beneficiarse de hasta 20 TB de memoria en un solo servidor, lo que tiene enormes implicaciones a largo plazo para las capacidades del servidor y representa un gran avance.

Todo esto apoya el creciente potencial de Calcular enlace rápido (CXL) tecnología.

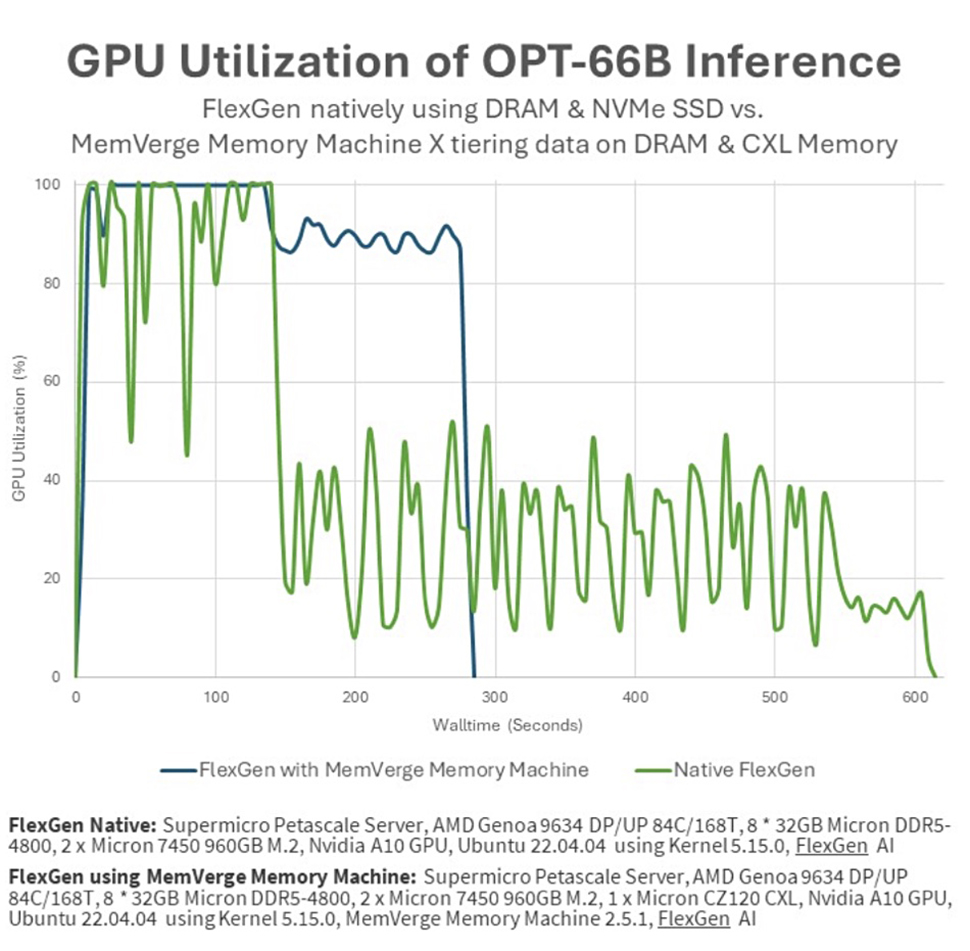

En 2023, Meta anunció una asociación con… AMD a Muestra el tipo de memoria que se puede agregar a los servidores. Es capaz de reciclar el equivalente a un petabyte de RAM.

Como parte de la colaboración, las dos empresas demostraron que CXL se puede utilizar para mejorar significativamente la eficiencia de la memoria, reduciendo así los costos y mejorando el rendimiento.

La placa de demostración presentada por AMD y Meta incluía una AMD EPYC 9004 Genoa, que cuenta con cuatro ranuras DIMM, un disipador de calor y un ventilador.

La participación de Astera Labs aquí marca el segundo anuncio importante de la compañía de semiconductores en lo que va de año. En abril de 2024, Astera Labs mostró su placa temporizadora PCIe Aries 6, que… Tecnología Radar Pro reportado en ese momento Podría desbloquear importantes beneficios para los hiperescaladores que trabajan para acelerar el desarrollo de la IA.

Esta placa temporizadora puede ayudar a mantener la integridad de la señal de datos a través de la red. Llorar Interfaz.

Estas transferencias de datos de alta velocidad suelen sufrir degradación a largas distancias o debido a interferencias.

Los reclockers Aries 6 representan los primeros en la cartera PCIe 6.x de Astera Labs y están específicamente destinados a mejorar las capacidades de red de GPU, aceleradores, CPU y controladores de memoria CXL de próxima generación.

Más de TechRadar Pro

[ad_2]

Source Article Link