Creating artificial intelligence (AI) agents has become a crucial skill for many. Thanks to the power of already available no code AI tools and applications no coding skills are required to create custom AI assistance and workflows. Voiceflow is one such platform making this task easier, offering a simple drag-and-drop interface that allows both professionals and enthusiasts to build AI without needing to be experts in coding. This platform is perfect for those who want to design, prototype, and deploy AI agents, , workflows and assistance that can engage in conversations across various channels. Whether it be to a website already existing application or online platform or service.

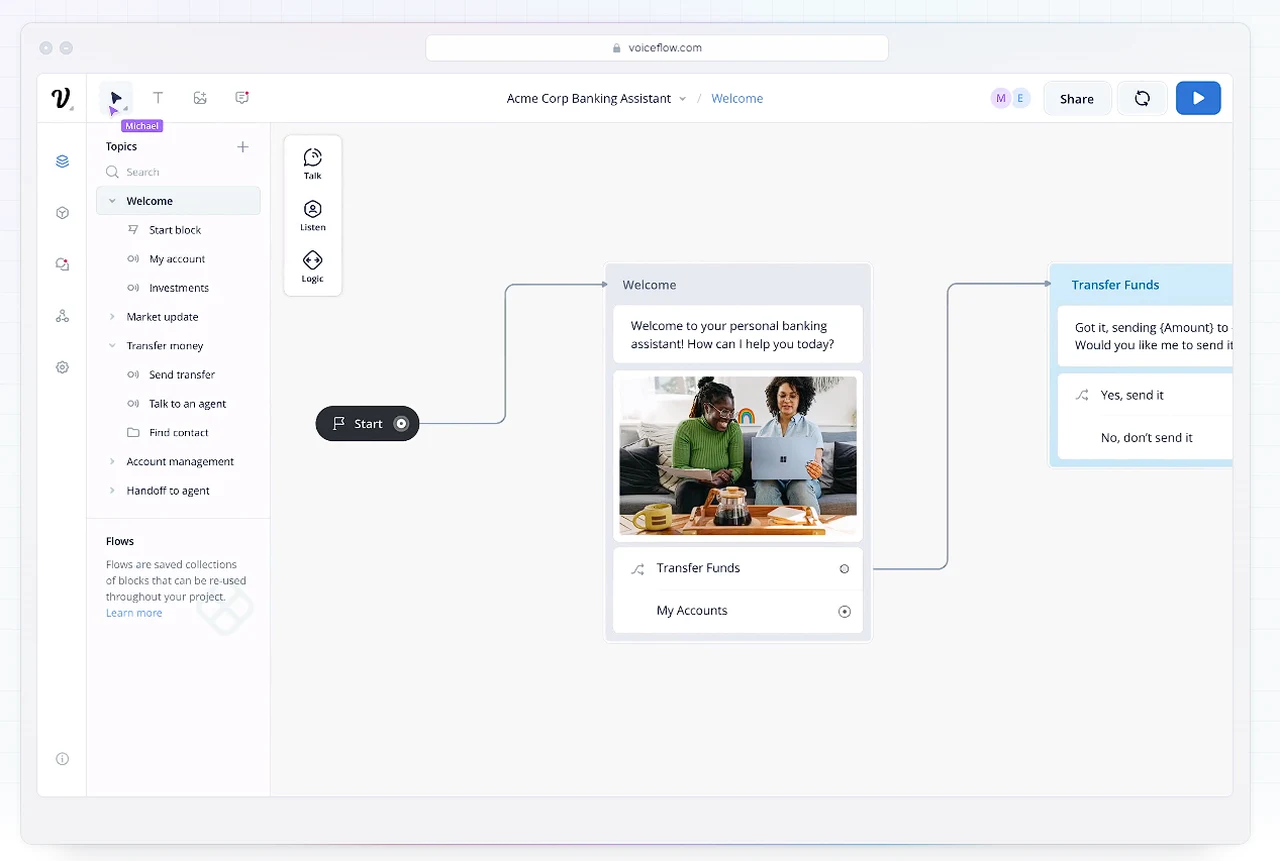

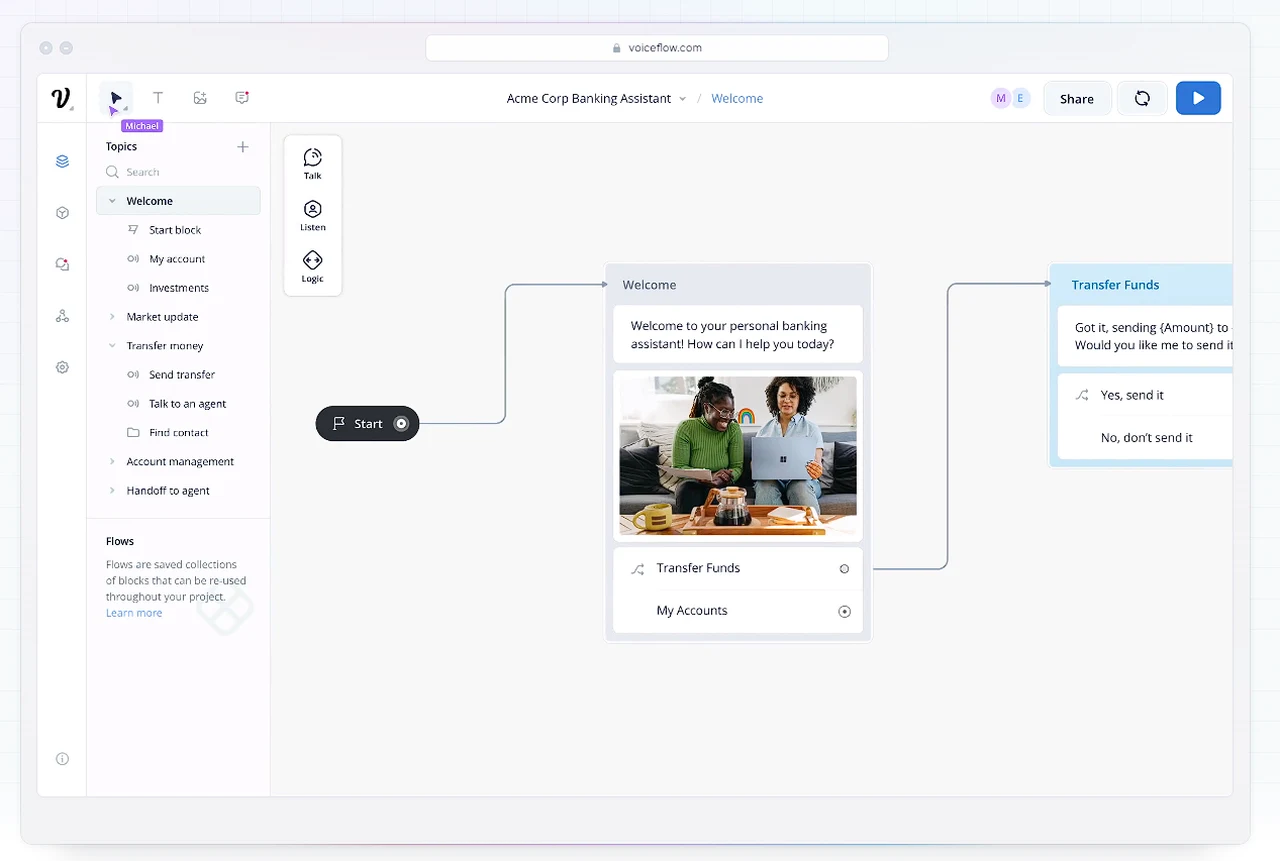

Voiceflow’s user-friendly design interface is its main attraction. It enables users to visually map out the conversational flow of their AI agents. This visual approach to design not only makes the process more efficient but also accessible to those without a background in programming. Users can create an AI agent by dragging elements into place and defining how they interact with each other.

One of the standout features of Voiceflow is its ability to work with advanced language models. This means that the AI agents you create can have sophisticated natural language processing abilities. As a result, they can provide responses that feel more natural and human-like. This is particularly useful for AI agents that function as customer support bots or personal virtual assistants.

Drag-and-Drop No cCode AI Agent Creator

When it comes to customization, Voiceflow excels. It allows users to enrich their AI agent’s knowledge base with custom data sets. This ensures that the agent can offer information that is not only accurate but also tailored to the specific needs of the task at hand. Keeping track of how well your AI agent is performing is also made easy with Voiceflow. The platform comes with analytics tools that let you monitor user interactions and engagement. These insights are invaluable as they help you refine your AI agent, improving its performance over time.

Voiceflow is also an excellent tool for prototyping and team collaboration. Its prototyping tools are user-friendly, making it easy to share and test your AI agent. This is essential for getting quick feedback and making necessary adjustments. For larger teams, Voiceflow can support up to 100 members, making it suitable for big projects that require coordinated efforts.

Here are some other articles you may find of interest on the subject of building AI agents and workflows harnessing the power of artificial intelligence:

For those who need even more from their AI agents, Voiceflow offers extensive API options. These APIs provide advanced dialogue management and project configuration. They also allow for seamless integration of the knowledge base, ensuring that your AI agent can be tailored to meet very specific requirements and work well with other systems.

No code AI User Interface and Design Process

Voiceflow stands out as a top choice for anyone interested in building advanced conversational AI agents. Its combination of an easy-to-use interface, powerful integrations, and wide range of customization options make it a flexible and approachable platform. Whether you’re working alone or as part of a team, Voiceflow gives you the tools and support needed to bring your AI agent ideas to life.

Voiceflow’s platform is a game-changer for those looking to create artificial intelligence (AI) agents without deep technical knowledge. Its drag-and-drop interface simplifies the process of building conversational AI, making it accessible to a broader audience. This visual approach allows users to construct the conversational flow by placing elements on a canvas and connecting them to map out the dialogue structure. The ease of use of this interface means that creating an AI agent becomes more about the design of the conversation rather than the complexity of the code behind it.

The platform’s user-friendly design interface is particularly beneficial for those who are not programmers. It empowers users to focus on the creative aspects of AI agent development, such as the personality and tone of the agent, without getting bogged down by coding syntax. By enabling a more intuitive design process, Voiceflow democratizes AI development, allowing more people to contribute to the field and bring diverse perspectives to the creation of AI agents.

Enhancing Conversational AI with Advanced Language Capabilities

Voiceflow’s integration with advanced language models elevates the capabilities of the AI agents created on its platform. These models incorporate natural language processing (NLP), which is a branch of AI that focuses on the interaction between computers and humans using natural language. The ability to process and understand human language allows AI agents to respond in a way that is more conversational and intuitive, which is particularly important for applications like customer support bots or personal virtual assistants.

The sophistication of these language models means that the AI agents can handle a wide range of queries and engage in more meaningful interactions with users. This level of natural language understanding can significantly enhance the user experience, making the AI agents more effective and enjoyable to interact with. As a result, businesses and developers can create AI assistants that are not only functional but also provide a level of engagement that closely resembles human interaction.

Customization and Collaboration with Voiceflow

Customization is a key strength of Voiceflow, allowing users to tailor their AI agents to specific needs. By enriching the knowledge base with custom data sets, AI agents can deliver personalized and contextually relevant information. This customization extends to the responses the AI agent provides, ensuring that the information is not just accurate but also specific to the individual user or situation. This level of personalization is critical for businesses that want to provide a unique and targeted experience to their customers.

Voiceflow also shines in its support for prototyping and team collaboration. The platform’s prototyping tools are designed to be user-friendly, enabling quick sharing and testing of AI agents. This rapid prototyping is crucial for iterating on design and functionality, allowing teams to refine their AI agents based on real user feedback. For larger projects, Voiceflow’s ability to support collaboration among team members ensures that everyone can contribute to the development process, making it a valuable tool for both small and large-scale AI initiatives.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.