[ad_1]

Meta for its AI assistant platform, Meta AI, which has been built using the long-awaited open source Llama 3 large language model (LLM). The company says it’s “now the most intelligent AI assistant you can use for free.” As for use case scenarios, the company touts the ability to help users study for tests, plan dinners and schedule nights out. You know the drill. It’s an AI chatbot.

Meta AI, however, has expanded into just about every nook and cranny throughout the company’s entire portfolio, after a test run . It’s still available with Instagram, but now users can access it on Messenger, Facebook feeds and Whatsapp. The chatbot also has a dedicated web portal at, wait for it, . You don’t need a company login to use it this way, though it won’t generate images. Those recently-released also integrate with the bot, with Quest headset integration coming soon.

On the topic of image generation, Meta says it’s now much faster and will produce images as you type. It also handles custom animated GIFs, which is pretty cool. Hopefully, it can successfully generate images of different races of people. We found that it a couple of weeks back, as it seemed biased toward creating images of people of the same race, even when prompted otherwise.

Meta’s also expanding global availability along with this update, as Meta AI is coming to more than a dozen countries outside of the US. These include Australia, Canada, Ghana, Jamaica, Pakistan, Uganda and others. However, there’s one major caveat. It’s only in English, which doesn’t seem that useful to a global audience, but whatever.

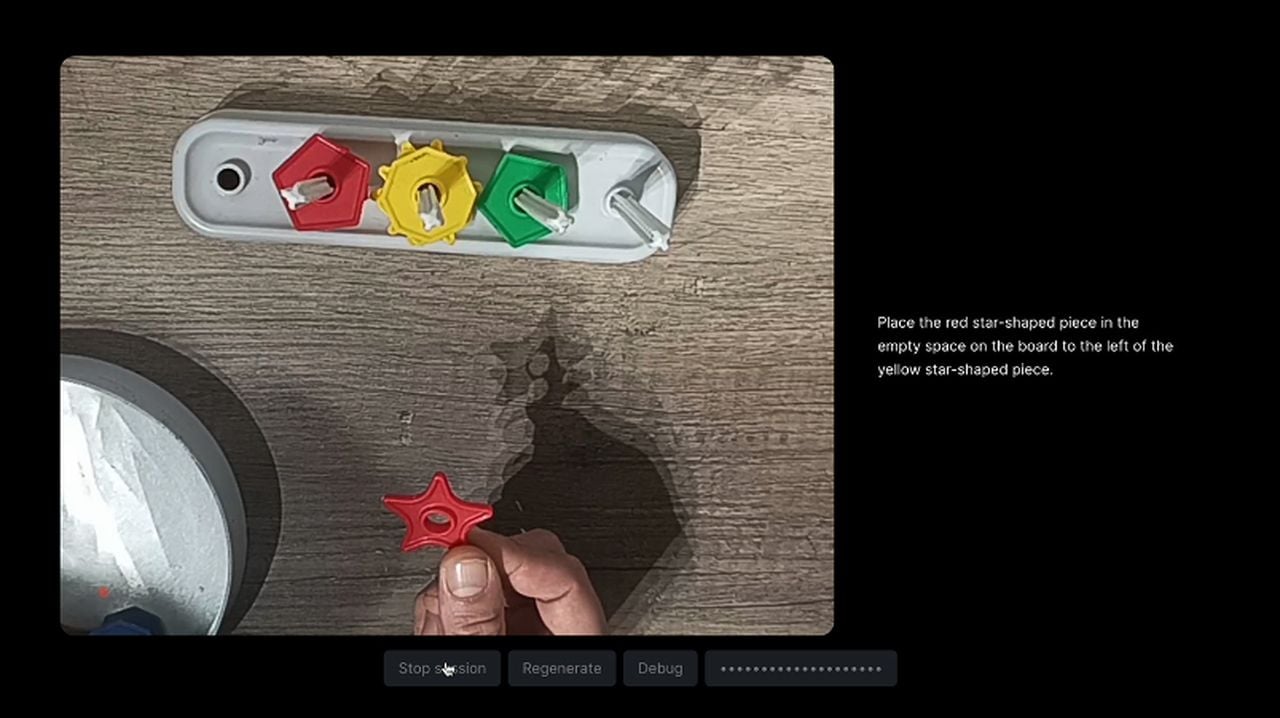

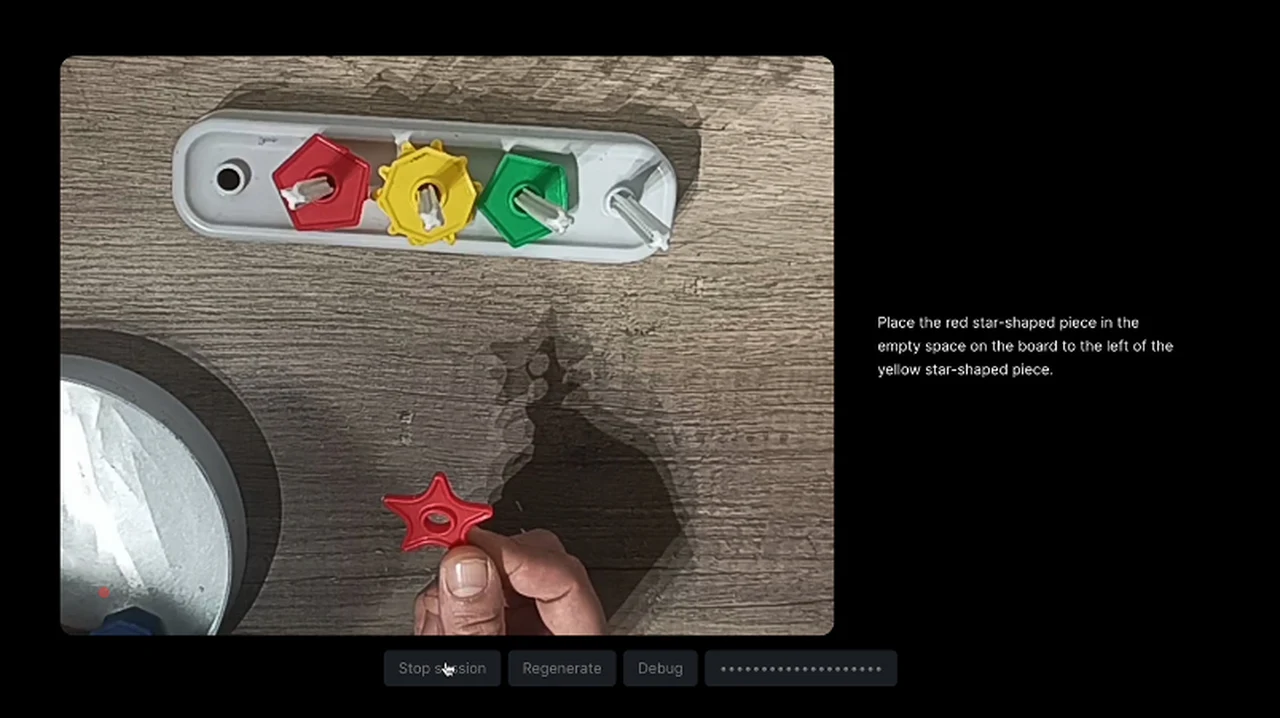

As for safety and reliability, the company says Llama 3 has been trained on an expanded data set when compared to Llama 2. It also used synthetic data to create lengthy documents to train on and claims it excluded all data sources that are known to contain a “high volume of personal information about private individuals.” Meta says it conducted a series of evaluations to see how the chatbot would handle risk areas like conversations about weapons, cyber attacks and child exploitation, and adjusted as required. In our brief testing with the product, we’ve already run into hallucinations, as seen below.

AI has become one of Meta CEO , along with in a secluded Hawaiian compound, but the company’s still playing catch up to OpenAI and, to a lesser extent, Google. Meta’s Llama 2 never really wowed users, due to a limited feature set, so maybe this new version of the AI assistant will catch lightning in a bottle. At the very least, it should be able to draw lightning in a bottle, or more accurately, slightly tweak someone else’s drawing of lightning in a bottle.

[ad_2]

Source Article Link