Gamers seeking a compact yet powerful Chimera OS powered Linux gaming PC with access to Valve’s Steam Big Picture and the ability to start playing your favorite games on your large screen TV or favorite monitor. May find the MINISFORUM MS01 to be an attractive option. This mini PC has been making waves in the gaming community, not only for its small footprint but also for its robust performance capabilities. The MS01 is designed to cater to those who want a high-quality gaming experience without the bulk of a traditional tower.

At the heart of the MS01 is an Intel Core i9-13900H processor, which, when combined with 32 GB of DDR5 RAM, ensures that games run smoothly and without lag. The inclusion of a Radeon RX 6400 GPU means that even graphically intensive games are displayed with clarity and precision perfect for running Chimera OS. Gamers will appreciate the ample storage options, as the MS01 comes equipped with three M.2 slots, allowing for a potential 24 TB of storage—more than enough to house a large library of games and media.

One of the standout features of the MS01 is its full-size PCIe x16 slot, which provides users with the opportunity to upgrade their GPU as technology advances. This level of upgradeability is particularly appealing to gamers who aim to maintain a state-of-the-art gaming rig. The MS01’s future-proof design ensures that it can keep pace with the latest gaming trends and hardware releases.

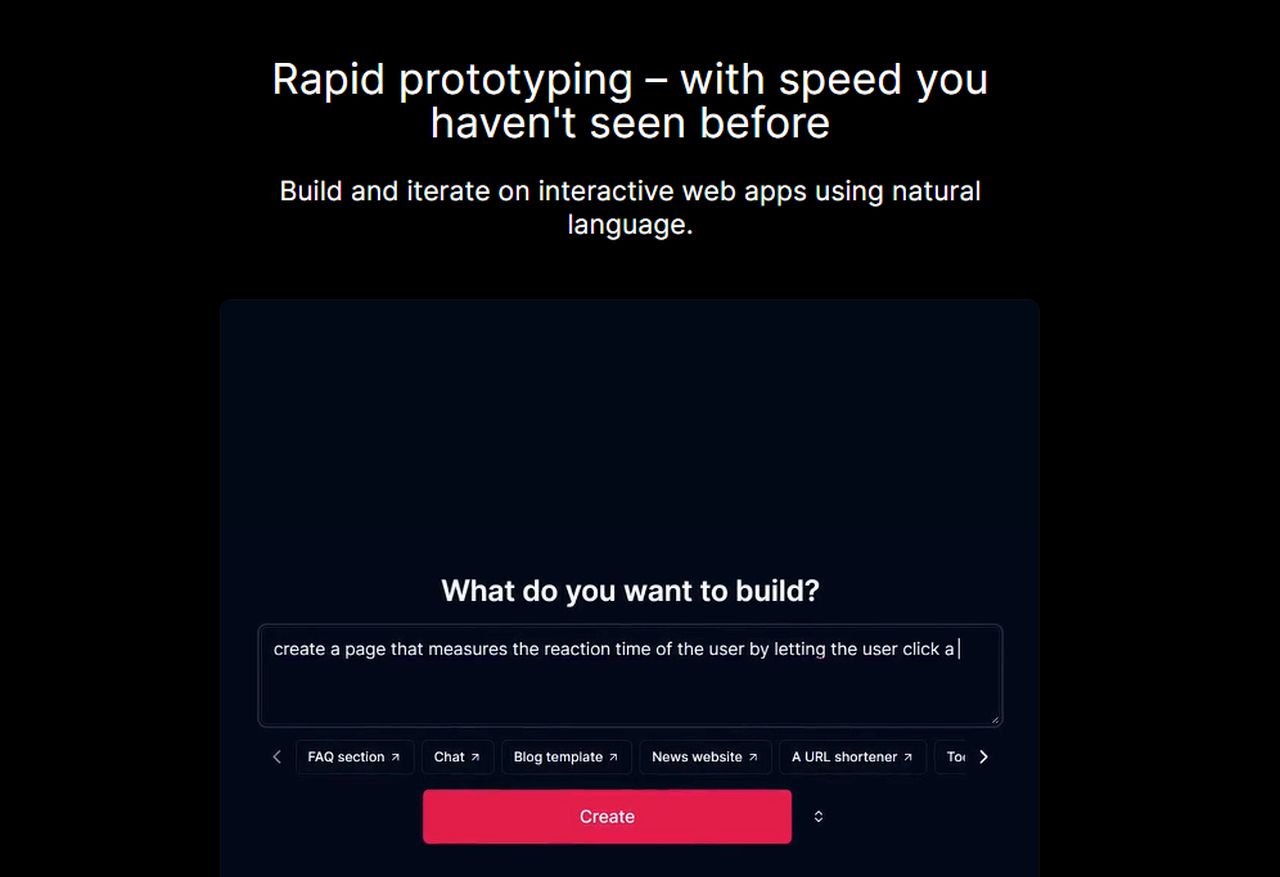

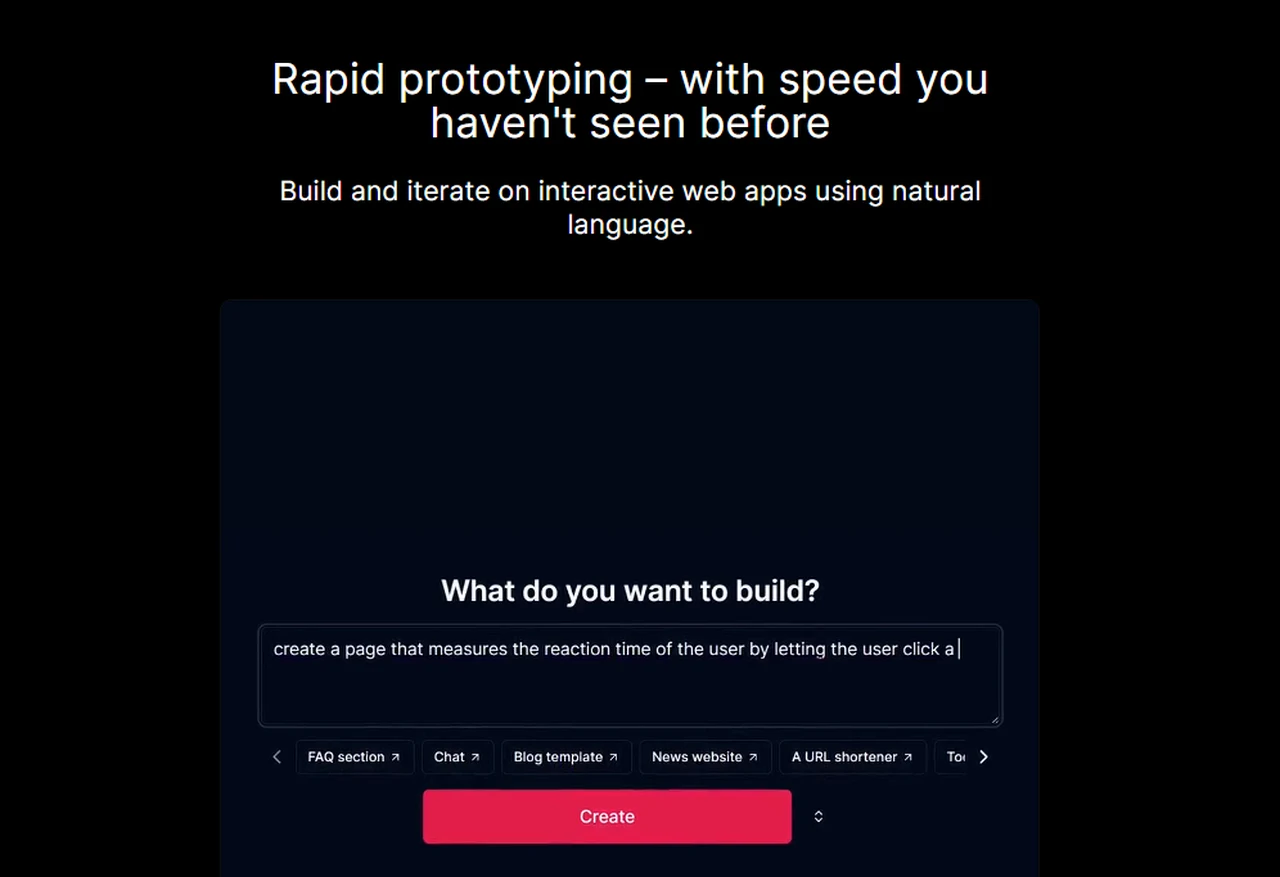

Steam Big Picture Chimera OS gaming PC

ChimeraOS is an operating system that provides an out of the box couch gaming experience. After installation, boot directly into Steam Big Picture and start playing your favorite games.

Here are some other articles you may find of interest on the subject of Valve’s Steam Deck :

Connectivity is another area where the MS01 shines. With Wi-Fi 6, Bluetooth 5.2, and multiple Ethernet ports, the mini PC offers a variety of options for connecting to the internet and other devices. This ensures that whether you’re downloading games, streaming content, or engaging in online multiplayer battles, your connection will be both stable and fast.

Chimera OS the perfect gaming companion

The MS01 also supports Chimera OS, a Linux-based operating system that provides a seamless gaming experience similar to that of the popular Steam Deck. Installing Chimera OS on the MS01 is straightforward, and the hardware is fully compatible with the operating system, ensuring a hassle-free setup. Once installed, Chimera OS offers a user-friendly interface and access to a vast selection of games.

ChimeraOS is an innovative operating system designed specifically for enhancing the gaming experience, particularly in a living room setup. It distinguishes itself by offering a seamless couch gaming experience, booting directly into Steam Big Picture mode. This feature underscores its primary function: to transform a traditional computer system into a dedicated gaming console-like environment.

- Installation of ChimeraOS is designed to be straightforward, enabling users to quickly set up their new gaming system. This ease of installation is a significant advantage for gamers who prefer a plug-and-play experience without the complexities often associated with setting up gaming environments on traditional operating systems.

- A notable feature of ChimeraOS is its powerful built-in web app. This app allows users to install and manage games from any device, offering a level of convenience and flexibility not commonly found in standard gaming consoles. This functionality reflects the operating system’s focus on user-centric design, prioritizing accessibility and ease of use.

- ChimeraOS is characterized by its minimalistic design. It provides only the essential components needed for gaming, eliminating unnecessary software or features that could detract from the gaming experience. This minimalism ensures that the system resources are primarily dedicated to gaming performance.

- The operating system promises an “out of the box” experience, with zero configuration needed for supported games. This feature is particularly appealing to gamers who want to avoid the often tedious process of tweaking settings and configurations before playing a game.

- Keeping the system up to date is another key aspect of ChimeraOS. It offers regular updates, ensuring that users have the latest drivers and software. These updates are designed to be fully automatic and run in the background, minimizing disruptions to gameplay. This approach to updates is crucial in maintaining optimal performance and security without compromising the gaming experience.

- Controller compatibility is a central element of ChimeraOS. The interface is fully compatible with controllers, highlighting its living room gaming focus. Additionally, it supports a wide range of controllers, including Xbox, PlayStation, and Steam controllers, among others. This broad compatibility ensures that gamers can use their preferred controllers without compatibility concerns.

Performance tests of the MS01 have shown that it can handle the latest gaming titles with ease. Benchmarks for games like “Spider-Man Miles Morales” and “Cyberpunk 2077” demonstrate that the MS01 delivers high-quality gameplay and consistent frame rates, providing a clear indication of the level of performance gamers can expect from this mini PC.

Overall, the MINISFORUM MS01 is a versatile and powerful gaming machine that excels in both performance and the ability to be upgraded. It’s well-suited for a range of games, from blockbuster AAA titles to independent releases, offering a comprehensive gaming experience on Chimera OS. For gamers who prioritize a system that can adapt and grow with their gaming needs, the MS01 presents itself as a wise investment.

Image Credit : COS

Filed Under: Gaming News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.