In the ever-evolving world of artificial intelligence (AI), there’s a lot of talk about how we should build and share AI technologies. Two main approaches are often discussed: open-source AI and proprietary AI. A recent experiment that compared an open-source AI model called Orca-2-13B with a proprietary model known as GPT-4 Turbo has sparked a lively debate. This debate is not just about which model is better but about what each approach means for the future of AI.

The open-source AI model, Orca-2-13B, is a shining example of transparency, collaboration, and innovation. Open-source AI is all about sharing code and ideas so that everyone can work together to make AI better. This approach believes that when we make AI technology open for all, we create a space where anyone with the right skills can help improve it. One of the best things about open-source AI is that you can see how the AI makes decisions, which is really important for trusting AI systems. Plus, open-source AI benefits from communities like GitHub, where developers from all over can work together to make AI models even better.

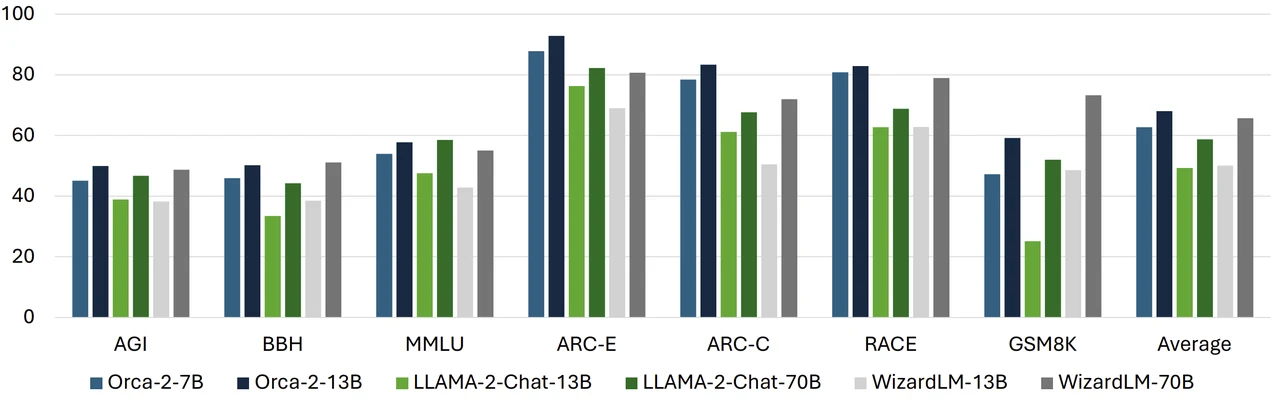

Orca 2 is Microsoft’s latest development in its efforts to explore the capabilities of smaller LMs (on the order of 10 billion parameters or less). With Orca 2, it demonstrates that improved training signals and methods can empower smaller language models to achieve enhanced reasoning abilities, which are typically found only in much larger language models.

On the other side, we have proprietary AI, like GPT-4 Turbo, which focuses on security, investment, and accountability. Proprietary AI is usually made by companies that spend a lot of money on research and development. They argue that this investment is key to making AI smarter and more capable. With proprietary AI, the code isn’t shared openly, which helps protect it from being used in the wrong way. Companies that make proprietary AI are also in charge of making sure the AI works well and meets ethical standards, which is really important for making sure AI is safe and effective.

GPT-4 Turbo vs Orca-2-13B

- Orca-2-13B (Open-Source AI)

- Focus: Emphasizes transparency, collaboration, and innovation.

- Benefits:

- Encourages widespread participation and idea sharing.

- Increases trust through transparent decision-making processes.

- Fosters innovation by allowing communal input and improvements.

- Challenges:

- Potential for fragmented efforts and resource dilution.

- Quality assurance can be inconsistent without structured oversight.

- GPT-4 Turbo (Proprietary AI)

- Focus: Concentrates on security, investment, and accountability.

- Benefits:

- Higher investment leads to advanced research and development.

- Greater control over AI, ensuring security and ethical compliance.

- More consistent quality assurance and product refinement.

- Challenges:

- Limited accessibility and collaboration due to closed-source nature.

- Might induce skepticism due to lack of transparency in decision-making.

The discussion around Orca-2-13B and GPT-4 Turbo has highlighted the strengths and weaknesses of both approaches. Open-source AI is great for driving innovation, but it can lead to a lot of similar projects that spread resources thin. Proprietary AI might give us more polished and secure products, but it can lack the openness that makes people feel comfortable using it.

Another important thing to think about is accessibility. Open-source AI is usually easier for developers around the world to get their hands on, which means more people can bring new ideas and improvements to the table. However, without strict quality checks, open-source AI might not always be reliable.

After much debate, there seems to be a slight preference for the open-source AI model, Orca-2-13B. The idea of an AI world that’s more inclusive, creative, and open is really appealing. But it’s also clear that we need to have strong communities and good quality checks to make sure open-source AI stays on the right track.

For those interested in open-source AI, there’s a GitHub repository available that has all the details of the experiment. It even includes a guide on how to use open-source models. This is a great opportunity for anyone who wants to dive into AI and be part of the ongoing conversation about where AI is headed.

The debate between open-source and proprietary AI models is about more than just code. It’s about deciding how we want to shape the development of AI. Whether you like the idea of working together in the open-source world or prefer the structured environment of proprietary AI, it’s clear that both ways of doing things will have a big impact on building an AI future that’s skilled, secure, and trustworthy.

Here are some other articles you may find of interest on the subject of AI model comparisons :

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.