If you’re venturing into the world of audio, music, and speech generation, you’ll be pleased to know that a new open-source AI Text-to-Speech (TTS) toolkit called Amphion might be worth further consideration and investigation. Designed with both seasoned experts and budding researchers in mind, Amphion stands as a robust platform for transforming various inputs into audio. Its primary appeal lies in its ability to simplify and demystify the complex processes of audio generation.

Amphion’s Core Functionality

Amphion isn’t just another toolkit in the market. It’s a comprehensive system that offers:

- Multiple Generation Tasks: Beyond the traditional Text-to-Speech (TTS) functionality, Amphion extends its capabilities to Singing Voice Synthesis (SVS), Voice Conversion (VC), and more. These features are in various stages of development, ensuring constant evolution and improvement.

- Advanced Model Support: The toolkit includes support for a range of state-of-the-art models like FastSpeech2, VITS, and NaturalSpeech2. These models are at the forefront of TTS technology, offering users a variety of options to suit their specific needs.

- Vocoder and Evaluation Metrics Integration: Vocoder technology is crucial for generating high-quality audio signals. Amphion includes several neural vocoders like GAN-based and diffusion-based options. Evaluation metrics are also part of the package, ensuring consistency and quality in generation tasks.

Why Amphion Stands Out

Amphion distinguishes itself through its user-friendly approach. If you’re wondering how this toolkit can benefit you, here’s a glimpse:

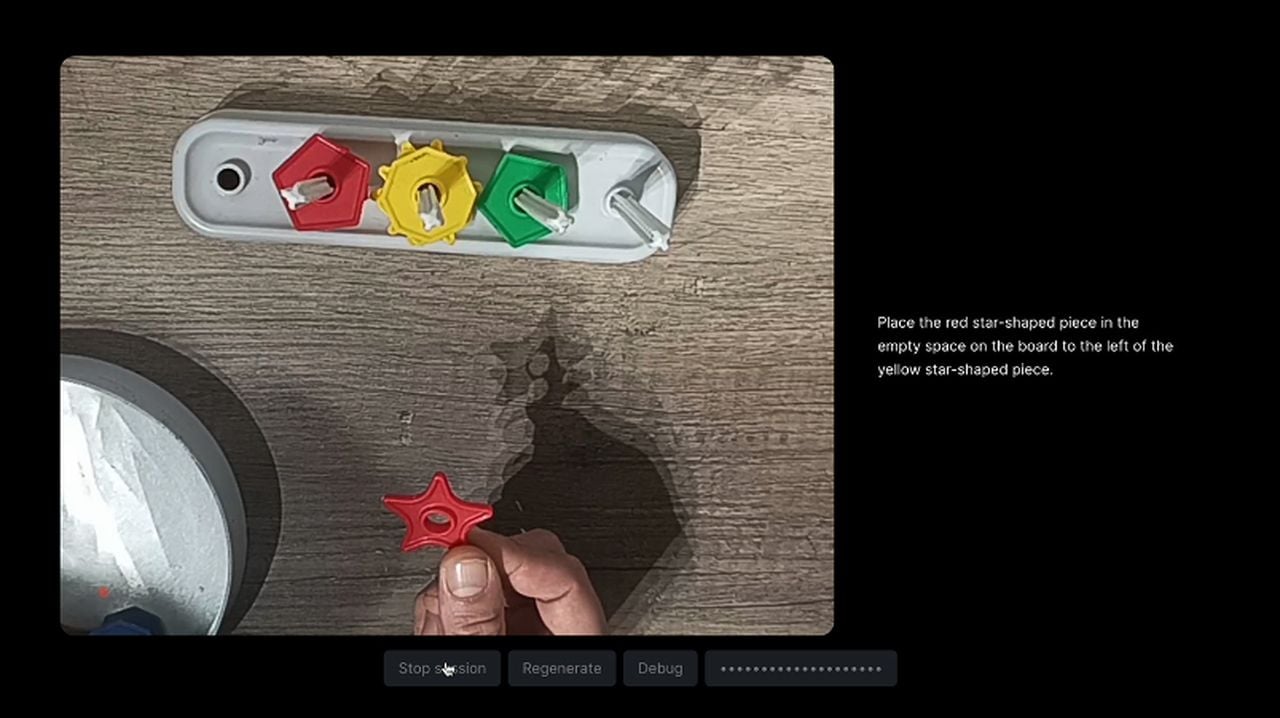

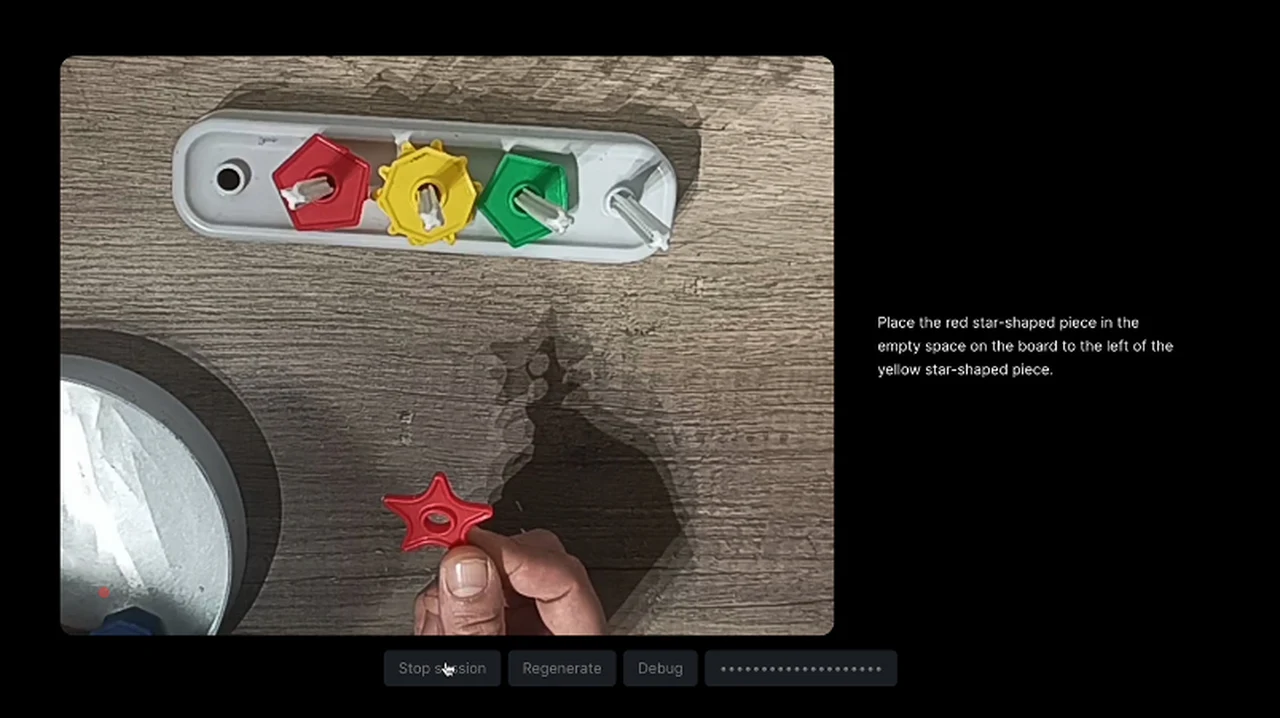

- Visualizations of Classic Models: A unique feature of Amphion is its visualizations, which are especially beneficial for those new to the field. These visual aids provide a clearer understanding of model architectures and processes.

- Versatility for Different Users: Whether you are setting up locally or integrating with online platforms like Hugging Face spaces, Amphion is adaptable. It comes with comprehensive guides and examples, making it accessible to a wide range of users.

- Reproducibility in Research: Amphion’s commitment to research reproducibility is clear. It supports classic models and structures while offering visual aids to enhance understanding.

Amphion open source Text-to-Speech

Here are some other articles you may find of interest on the subject of Text-to-Speech TTS AI :

Amphion’s technical aspects :

Let’s delve into the more technical aspects of Amphion:

- Text to Speech (TTS): Amphion excels in TTS, supporting models like FastSpeech2 and VITS, known for their efficiency and quality.

- Singing Voice Conversion (SVC): SVC is a novel feature, supported by content-based features from models like WeNet and Whisper.

- Text to Audio (TTA): Amphion’s TTA capability uses a latent diffusion model, offering a sophisticated approach to audio generation.

- Vocoder Technology: Amphion’s range of vocoders includes GAN-based vocoders like MelGAN and HiFi-GAN, and others like WaveGlow and Diffwave.

- Evaluation Metrics: The toolkit ensures consistent quality in audio generation through its integrated evaluation metrics.

Amphion offers a bridge connecting AI enthusiasts, researchers and sound engineers to the vast and evolving world of AI audio generation. Its ease of use, high-quality audio outputs, and commitment to research reproducibility position it as a valuable asset in the field. Whether you are a novice exploring the realm of TTS or an experienced professional, Amphion offers a comprehensive and user-friendly platform to enhance your work.

The open source Amphion Text-to-Speech AI modeldemonstrates the power and potential of open-source projects in advancing technology. It’s a testament to the collaborative spirit of the tech community, offering a resource that not only achieves technical excellence but also fosters learning and innovation. So, if you’re looking to embark on or further your journey in audio generation, Amphion is your go-to toolkit. Its blend of advanced features, user-centric design, and commitment to research makes it an indispensable resource in the field.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.