[ad_1]

Screenshots have emerged online of what may be the user interface for Spotify’s long-awaited Supremium tier. Or should we say “Enhanced Listening”? This is apparently the new name for the tier according to user OhItsTom who posted seven images of the potential update on Reddit. They reveal what the new tier could look like on desktop and mobile devices. What’s interesting about the pictures is that they show some of the tools and text windows that may be present in the final product.

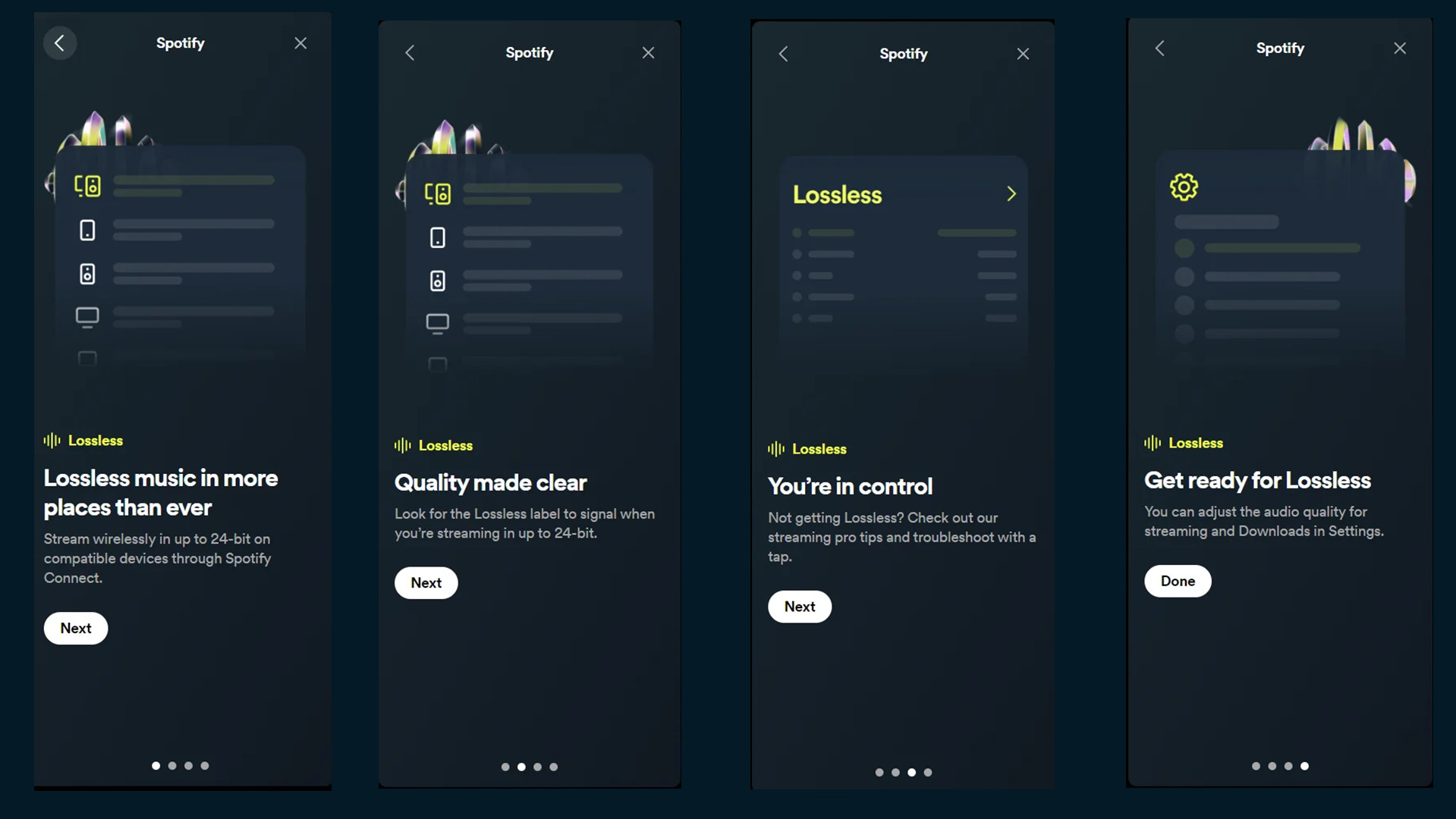

Based on the four smartphone pictures, the hi-res audio feature will apparently be known as Spotify Lossless. This set consists of an introductory guide explaining how the whole thing works. It states subscribers can wirelessly stream music files “in up to 24-bit” via Spotify Connect on a compatible device. A Lossless label will seemingly light up letting you know when you’re streaming in the higher format. Spotify is giving users “pro tips” and a troubleshooting tool in case they’re not receiving lossless audio. The last screenshot says you can adjust song quality “and Downloads in Settings.”

Spotify Lossless on desktop

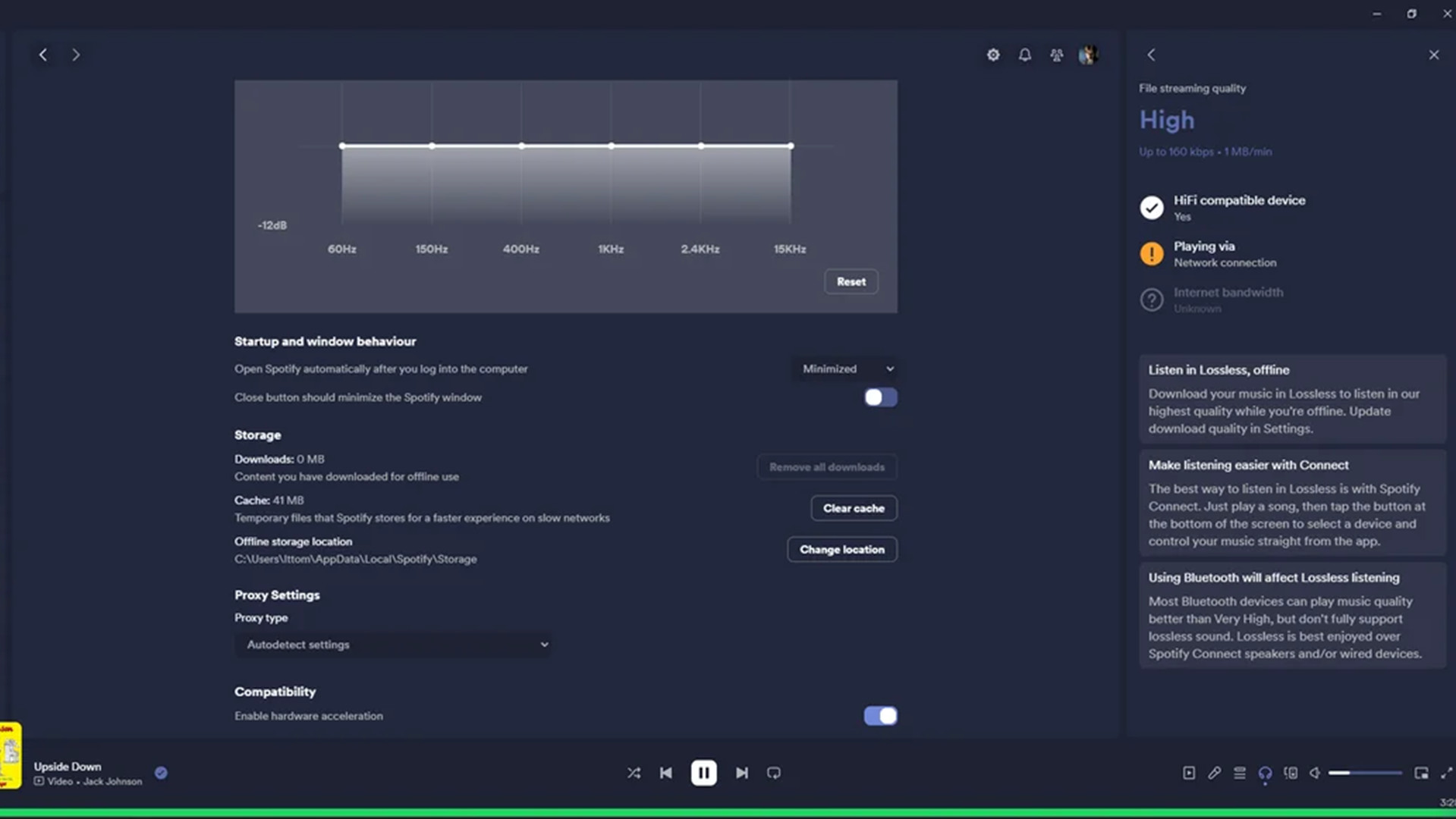

A lot of the important information can be found on the desktop screenshots. This set displays what seems to be a compatibility checker to see if your computer setup is good enough to stream hi-res music. At the top of the menu page is an audio frequency monitor – at least that’s what we think it is. We’re not entirely sure what it’s for. The table has a frequency readout going from 60Hz up to 15kHz. It’ll presumably allow users to see the strength of their signal.

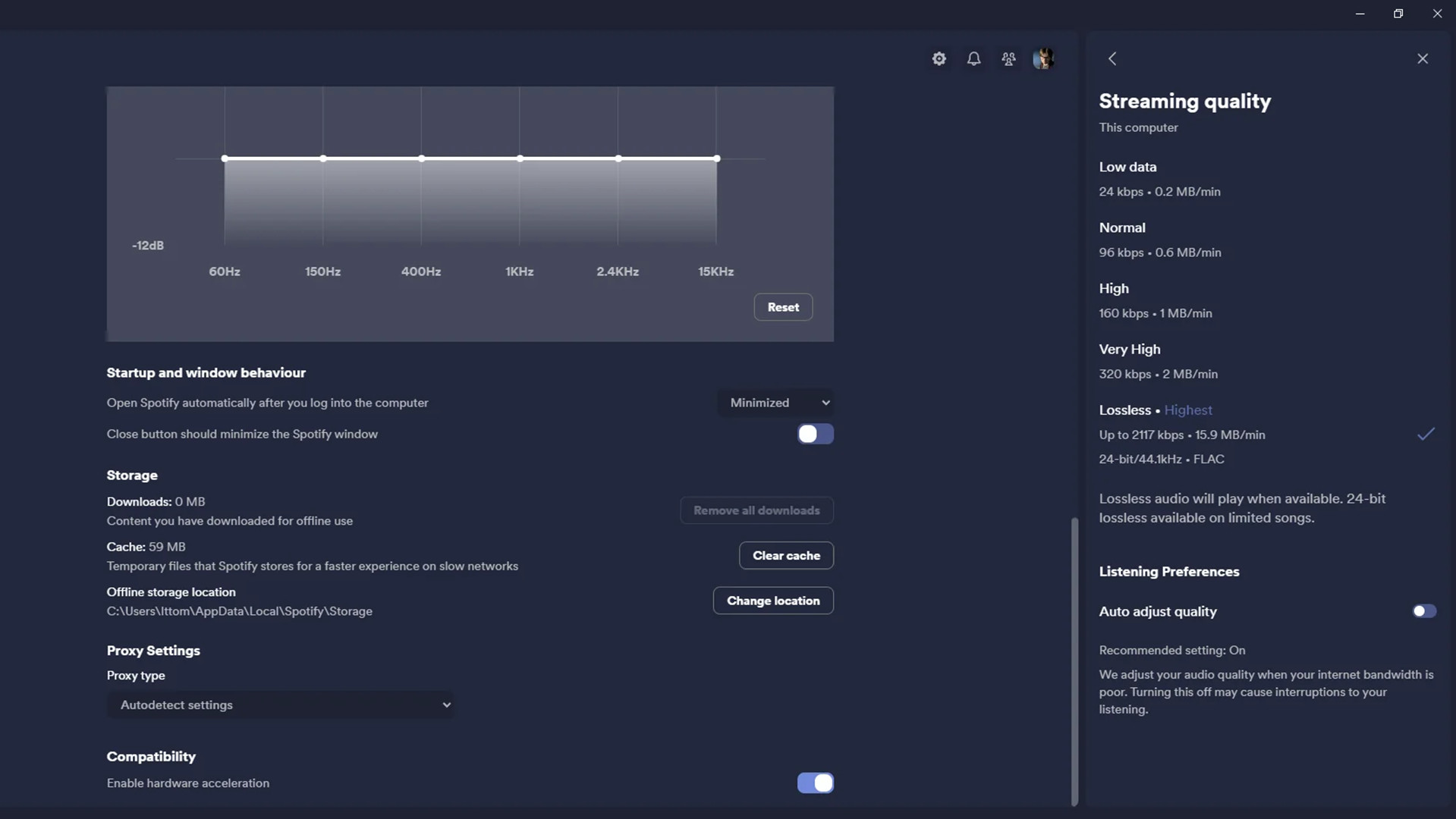

The first image reveals the right side of the page will house Enhanced Listening options. When connecting to the service, Spotify will test to see if you have a device capable of streaming HiFi music. Then, it’ll check what kind of connection you have and what your internet bandwidth is like. The app will give you a score ranging from Low to Lossless which is the best rank.

Lossless has a bitrate cap of 1,411 kbps. 9To5Google points out in its coverage that the figure is lower than Tidal’s maximum cap of 192kHz. So, Spotify’s hi-res streams may not be as good as Tidal’s, even though the average listener may not be able to discern the difference. However, the final screenshot shows it’ll be possible to boost the bitrate limit up to 2,117 kbps. Text underneath the tier adds that 24-bit lossless music in the FLAC format will be available but only on certain songs.

Promising update

A notice on the compatibility checker states using a Bluetooth connection could negatively affect Lossless listening since the standard doesn’t “fully support lossless sound”. It recommends you use certified Spotify Connect hardware such as Amazon Alexa speakers.

That’s all the important details we can gather from the leak. Of course, take everything with a grain of salt.

No word on a release date but based on the leak, Spotify Enhanced Listening might launch soon, and it looks complete at a glance. Perhaps there are just a couple of bugs to be ironed out. Fingers crossed that this is the case.

If you’re curious about how they made the features appear, OhItsTom says in a comment they utilized Spicetify, a third-party customization tool for the Spotify client. They explain that they used the app to crack open the software and “forcefully enabled [the UI changes] using code”. Spicetify is free although it requires some technical know-how to get the most out of it.

Be sure to check out TechRadar’s list of the best headphones for 2024.

You might also like

[ad_2]

Source Article Link