Anthropic has announced a new family of AI models named Claude3, consisting of three different-sized models: Haiku, Sonnet, and Opus. These models are vision language models (VLMs), capable of processing both text and images. The Opus model has shown to outperform OpenAI’s GPT-4 in various benchmarks, including zero-shot performance in tasks like grade school math and multilingual math problem-solving. The models also boast improved speed, with Sonnet being twice as fast as Claude II and Haiku being the fastest.

Additionally, the models have a reduced rate of refusal to answer questions, a feature that distinguishes them from other large language models. Anthropic has also increased the context window size, with the capability to handle up to a million tokens, although this feature is not yet available via API. The models are available on Anthropic’s API, with Sonnet and Opus already accessible and Haiku to be released soon. The pricing for using these models varies, with Opus being the most expensive and Haiku potentially offering a cost-effective solution with capabilities close to GPT-4.

Claude3’s standout feature is its ability to handle multimodal tasks. This means that unlike older models that could only work with text or images, Claude3 models can manage both. This versatility opens doors to a range of applications, from enhancing search engines to creating more advanced chatbots. Opus, the most powerful of the three, has demonstrated impressive abilities, outperforming GPT-4 in tasks that it hasn’t been specifically trained for, such as basic math and solving problems in different languages.

When it comes to speed, the Claude3 models are ahead of the game. Sonnet processes information twice as fast as its predecessor, Claude II, and Haiku is even quicker, delivering rapid responses without compromising the quality of the output. These models are also more responsive, meaning they are less likely to refuse to answer a query, which is a significant step forward compared to other large language models.

Claude 3 AI models Compared

Another advantage of the Claude3 models is their expanded context window, which can handle up to a million tokens. This is especially useful for complex tasks that require a deep understanding of long conversations or documents. While this feature isn’t available through an API yet, it shows that Anthropic is preparing to support more complex AI applications in the future.

Here are some other articles you may find of interest on the subject of Claude AI

For developers and companies looking to integrate AI into their services, API access is crucial. Anthropic has made Sonnet and Opus available through its API and plans to add Haiku soon. The pricing is structured in tiers, reflecting the different capabilities of each model. Opus is the most expensive, while Haiku will be a more cost-effective option that still offers competitive performance. Integrating the Claude3 models into existing platforms is made easy. They are designed to work with various ecosystems, such as Amazon Bedrock and Google’s Vertex AI, which means they can be adopted across different industries without much hassle.

As you consider the AI tools available for your projects, Anthropic’s Claude3 AI models are worth your attention. Their ability to work with both text and images, their fast processing speeds, and their improved responsiveness make them strong competitors to GPT-4. The potential for a larger context window and the upcoming API access add to their appeal. As you evaluate your options, think about the costs and how easily these models can be integrated into your work, and keep an eye out for Haiku, which could provide a balance of affordability and performance for your AI-driven initiatives.

The Claude 3 AI models introduced by Anthropic represent a significant advancement in the realm of AI, particularly in the vision-language model (VLM) domain. These models, named Haiku, Sonnet, and Opus, vary in size and capabilities, each designed to fulfill different computational and application requirements. Here’s a detailed comparison summary based on various aspects, leading to a conclusion that encapsulates their collective impact and individual strengths.

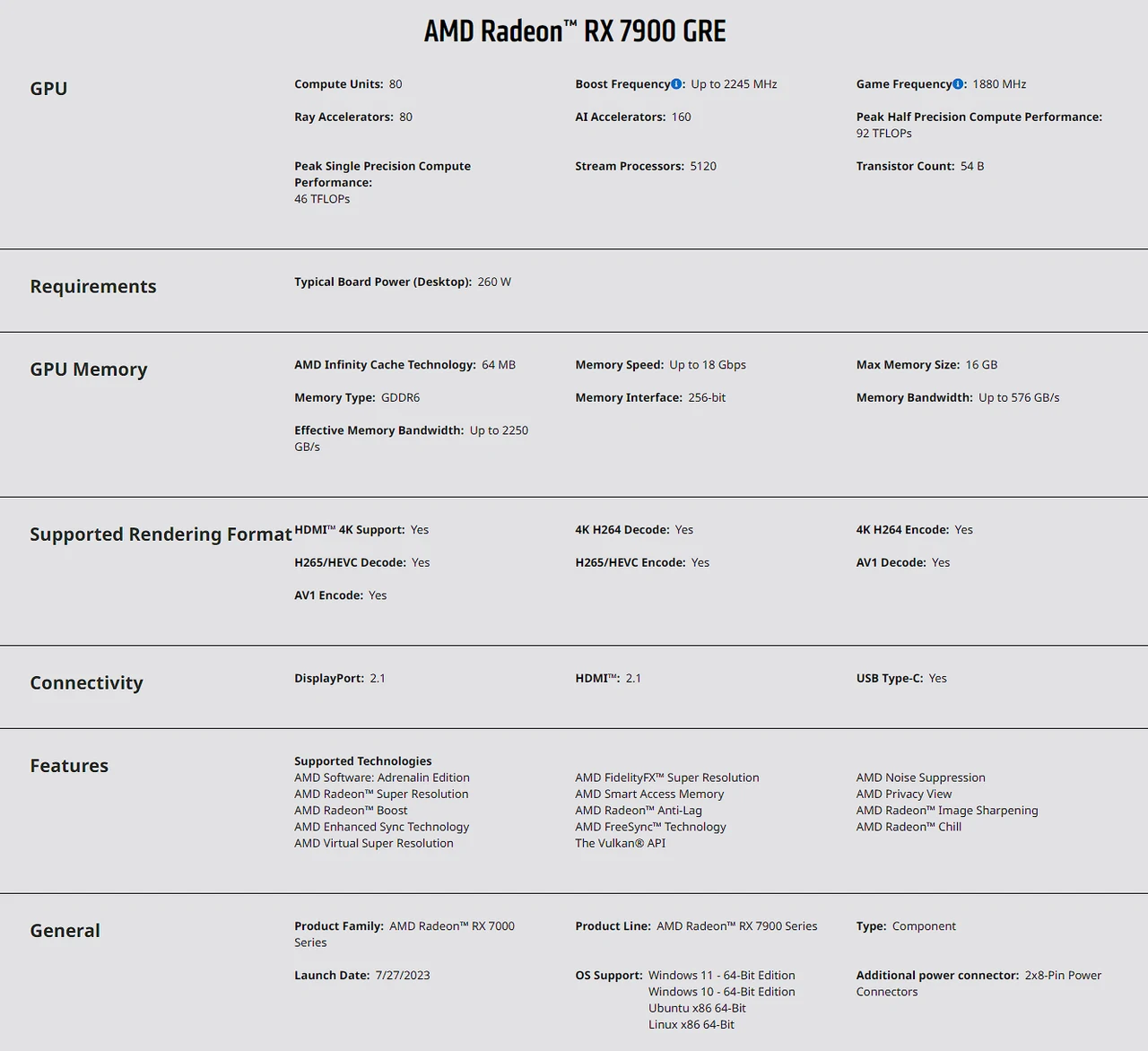

Claude 3 Opus

Stands out as the most advanced model, designed for executing highly complex tasks. It excels in navigating open-ended prompts and unexplored scenarios with a level of fluency and comprehension that pushes the boundaries of current AI capabilities. Opus is distinct in its higher intelligence, making it suitable for intricate task automation, research and development, and strategic analysis.

-

- Cost: $15 per million input tokens | $75 per million output tokens

- Context Window: Up to 200k tokens, with a 1M token capability for specific use cases upon request

- Primary Uses: Task automation, R&D, strategic analysis

- Differentiator: Unparalleled intelligence in the AI market

Claude 3 Sonnet

Achieves a balance between speed and intelligence, making it ideal for enterprise environments. It provides robust performance at a lower cost, engineered for endurance in extensive AI operations. Sonnet is tailored for data processing, sales enhancements, and efficiency in time-saving tasks, offering a cost-effective solution for scaling.

-

- Cost: $3 per million input tokens | $15 per million output tokens

- Context Window: 200k tokens

- Primary Uses: Data processing, sales optimization, code generation

- Differentiator: Offers a sweet spot of affordability and intelligence for enterprise workloads

Claude 3 Haiku

Designed for rapid response, handling simple queries and requests with unparalleled speed. This model is aimed at creating seamless AI interactions that closely mimic human responses, ideal for customer interactions, content moderation, and cost-saving operations, embodying efficiency and affordability.

-

- Cost: $0.25 per million input tokens | $1.25 per million output tokens

- Context Window: 200k tokens

- Primary Uses: Customer support, content moderation, logistics optimization

- Differentiator: Exceptional speed and affordability for its intelligence level

Enhanced Capabilities Across Models

All three models exhibit advanced capabilities in adhering to complex, mthisulti-step instructions and developing customer-facing experiences with trust. They excel in producing structured outputs like JSON, simplifying tasks such as natural language classification and sentiment analysis. This functionality enhances their utility across a broad spectrum of applications, from customer service automation to deep research and analysis.

Model Availability and Access

- Opus and Sonnet are immediately available for use through Anthropic’s API, facilitating quick integration and usage by developers.

- Haiku is announced to be available soon, promising to extend the Claude 3 model family’s capabilities to even more applications.

- Sonnet powers the free experience on claude.ai, with Opus available for Claude Pro subscribers, and forthcoming availability on Amazon Bedrock and Google Cloud’s Vertex AI Model Garden.

The Claude 3 AI models by Anthropic represent a formidable advance in AI technology, each tailored to specific needs from high-end complex problem-solving with Opus, balanced intelligence and speed with Sonnet, to rapid response capabilities with Haiku. The detailed cost structure and potential uses provide a clear guide for businesses and developers to choose the appropriate model based on their specific needs, budget constraints, and desired outcomes, marking a significant leap towards more personalized, efficient, and intelligent AI-driven solutions.

Each model has its distinct place within the AI ecosystem, catering to different needs and applications. The choice between them would depend on specific requirements such as computational resources, performance needs, and cost considerations. Collectively, they signify a significant leap forward in making advanced AI more accessible and applicable across a broader spectrum of uses. To learn more about each jump over to the official Anthropic website for details.

Filed Under: Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.