Developers, coders and enthusiasts may be interested in a new open source AI coding assistant model in the form of the DeepSeek large language model (LLM). DeepSeek, a company that’s been working under the radar, has recently released an open-source coding model that’s making waves in the tech community. This model, known as the DeepSeek coder model, boasts an impressive 67 billion parameters, putting it in the same league as some of the most advanced AI models out there, like GPT-4. The open source AI coding assistant has been trained from scratch on a vast dataset in both English and Chinese.

-

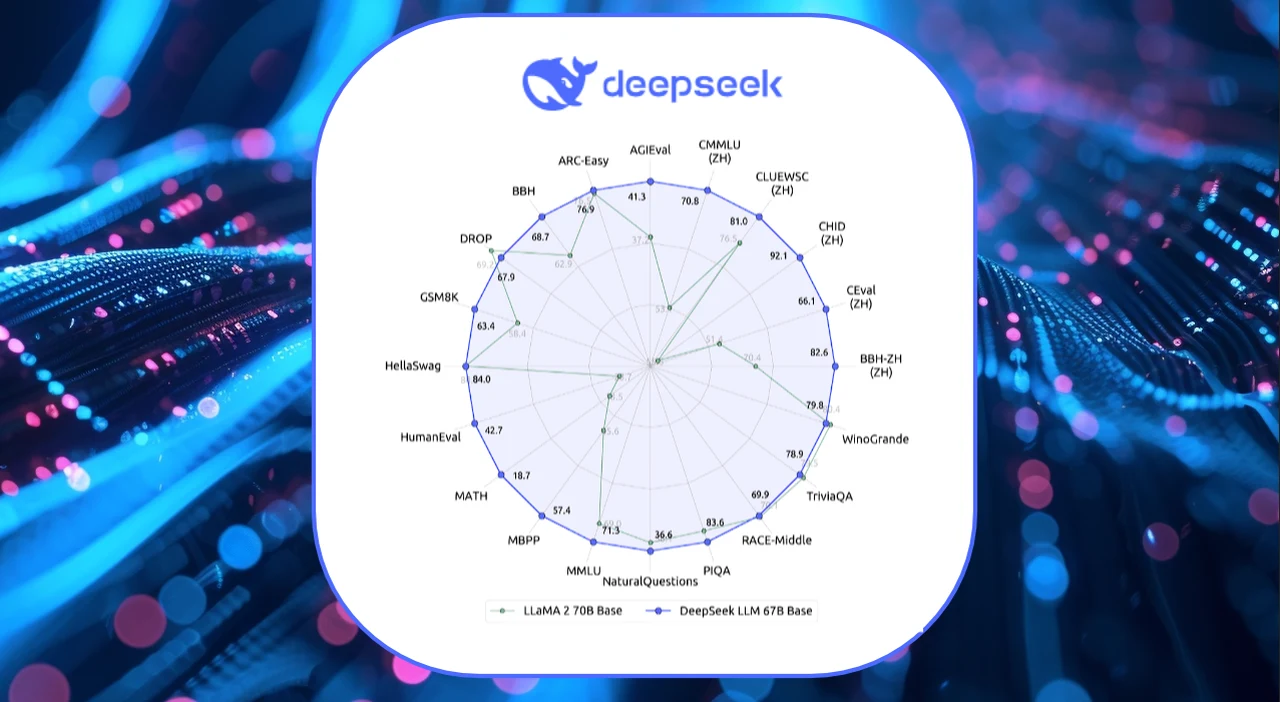

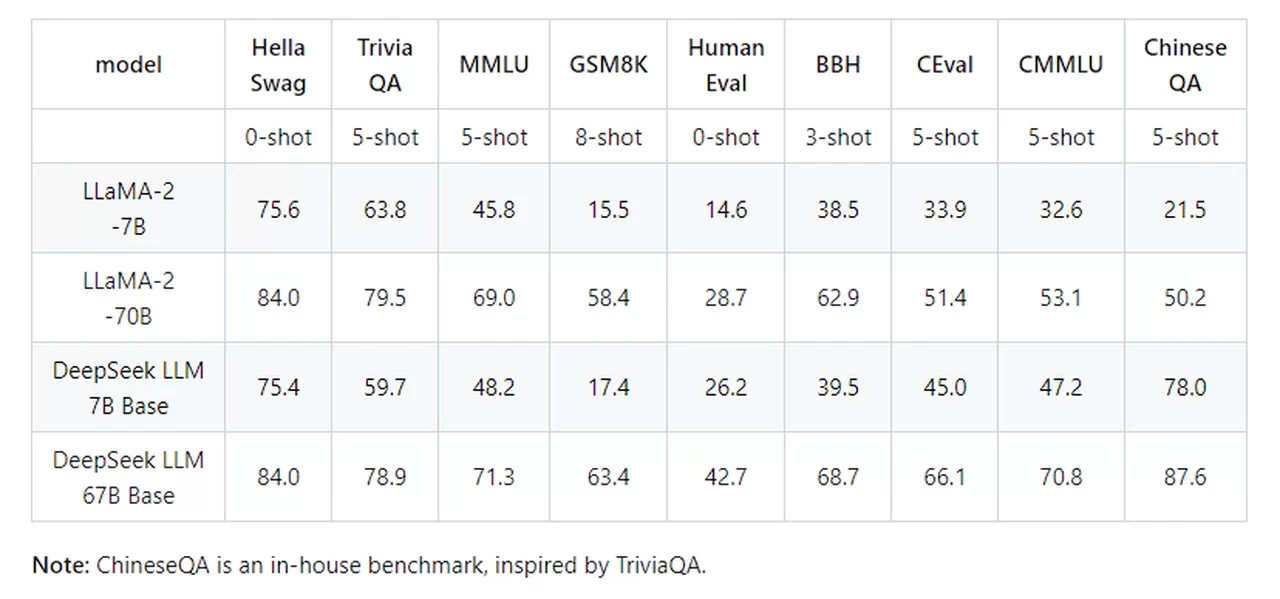

Superior General Capabilities: DeepSeek LLM 67B Base outperforms Llama2 70B Base in areas such as reasoning, coding, math, and Chinese comprehension.

-

Proficient in Coding and Math: DeepSeek LLM 67B Chat exhibits outstanding performance in coding (HumanEval Pass@1: 73.78) and mathematics (GSM8K 0-shot: 84.1, Math 0-shot: 32.6). It also demonstrates remarkable generalization abilities, as evidenced by its exceptional score of 65 on the Hungarian National High School Exam.

-

Mastery in Chinese Language: Based on our evaluation, DeepSeek LLM 67B Chat surpasses GPT-3.5 in Chinese.

What makes the DeepSeek coder model stand out is its extensive training on a dataset comprising two trillion tokens. This vast amount of data has given the model a wide-ranging understanding and knowledge base, allowing it to perform at levels that exceed Llama 2’s 70 billion base model and show competencies akin to GPT-3.5. This achievement has quickly made it a notable competitor in the AI landscape.

But DeepSeek didn’t stop there. They’ve been continuously improving their model. With the release of version 1.5, they’ve added an extra 1.4 trillion tokens of coding data to the model’s training, which has significantly enhanced its capabilities. This upgrade means that the DeepSeek coder model is now even more adept at handling complex tasks, such as natural language programming and mathematical reasoning. It’s become an essential tool for those who need to simplify intricate processes.

DeepSeek open source AI coding assistant

“We release the DeepSeek LLM 7B/67B, including both base and chat models, to the public. To support a broader and more diverse range of research within both academic and commercial communities, we are providing access to the intermediate checkpoints of the base model from its training process. Please note that the use of this model is subject to the terms outlined in License section. Commercial usage is permitted under these terms.”

The model’s versatility is also worth mentioning once again as it supports multiple languages, including Chinese, which opens up its benefits to a wider, international audience. This is particularly important as the demand for advanced AI technology grows across different regions and industries.

DeepSeek LLM vs LLaMA 2

For those interested in using the DeepSeek AI coding assistant, it’s readily available on platforms like Hugging Face and LM Studio.and is available to download in both 7 Billion and 33 Billion versions. This accessibility ensures that users who need cutting-edge AI can easily integrate it into their work. The model’s technical capabilities are further showcased by its ability to predict the next token in a sequence with a window size of 4K, which means it can produce outputs that are more nuanced and aware of the surrounding context. Additionally, the model has been fine-tuned on 2 billion tokens of instruction data, which guarantees that it can understand and carry out complex instructions with remarkable accuracy.

The research and development team responsible for creating this unique advanced language model comprising of 67 billion parameters have future plans for its development, and the DeepSeek AI coding assistant is likely just the start of their journey. They’ve hinted at future developments that could redefine the limits of AI models. This suggests that we can expect more innovative tools from DeepSeek that will continue to shape the future of various industries and applications.

The DeepSeek coder model is a significant step forward in the realm of open-source AI technology. With its advanced features and strong performance, it’s an excellent option for anyone in need of an AI model that specializes in coding and mathematics. As the AI community continues to expand, the DeepSeek coder model stands as a prime example of the kind of innovative, powerful, and adaptable tools that are driving progress across different fields. To give the AI coding assistant try jump over to the official DeepSeek Alpha website.

Filed Under: Gadgets News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.