Apple has recently introduced the Ferret 7B, a sophisticated large language model (LLM) that represents a significant step forward in the realm of artificial intelligence. This new technology is a testament to Apple’s commitment to advancing AI and positions the company as a formidable player in the tech industry. The Ferret 7B is engineered to integrate smoothly with both iOS and macOS, taking full advantage of Apple’s powerful silicon to ensure users enjoy a fluid experience.

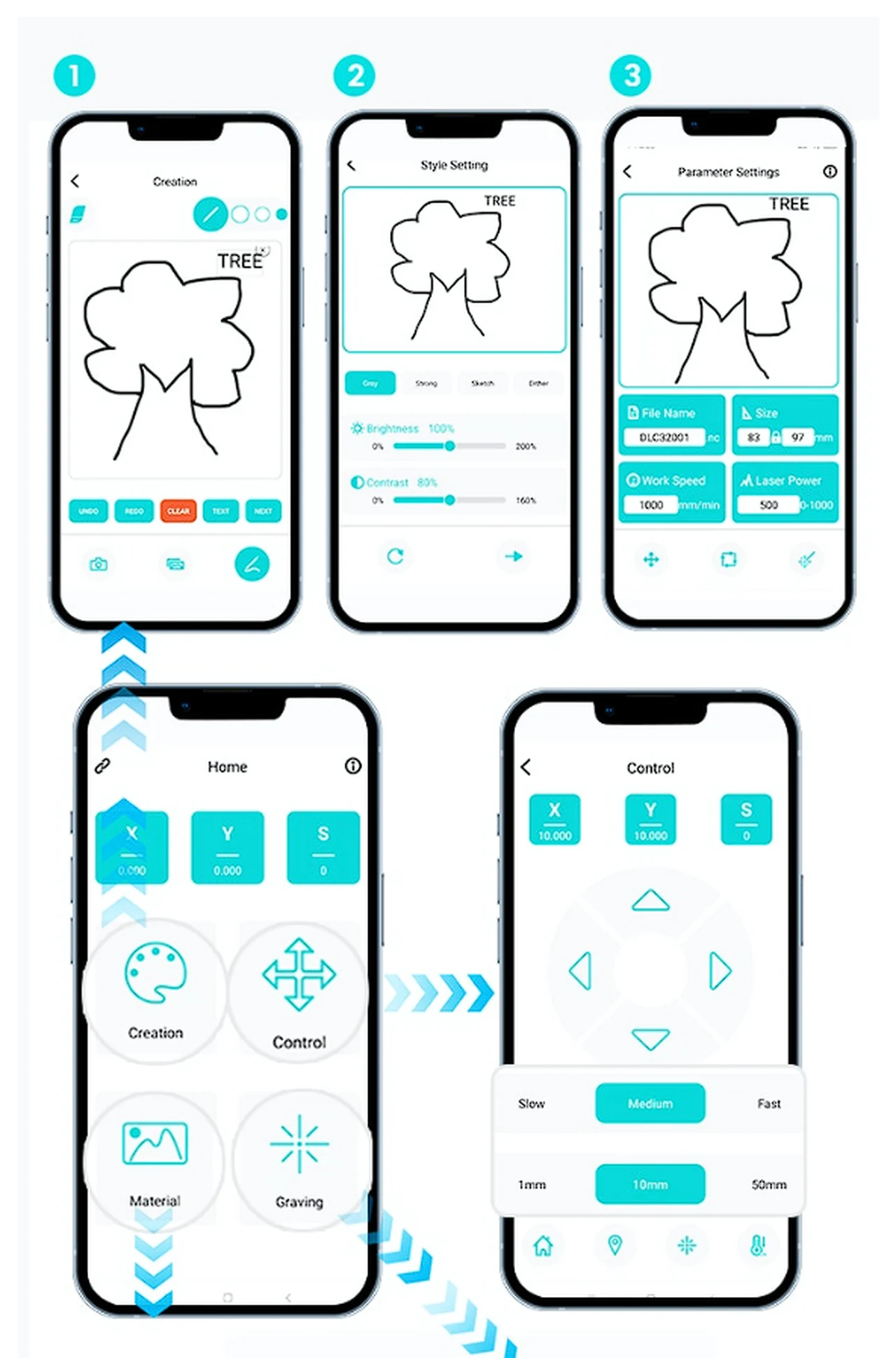

The standout feature of the Ferret 7B is its multimodal capabilities, which allow it to interpret and create content that combines images and text. This breakthrough goes beyond what traditional text-based AI models can do. The Ferret 7B’s capabilities are showcased in systems like the Google 5.2 coding model and MixL 8X 7B, which are built on Apple’s MLX platform and utilize its unique tools.

- Ferret Model – Hybrid Region Representation + Spatial-aware Visual Sampler enable fine-grained and open-vocabulary referring and grounding in MLLM.

- GRIT Dataset (~1.1M) – A Large-scale, Hierarchical, Robust ground-and-refer instruction tuning dataset.

- Ferret-Bench – A multimodal evaluation benchmark that jointly requires Referring/Grounding, Semantics, Knowledge, and Reasoning.

There’s buzz around the upcoming iOS 18, which is expected to incorporate AI more comprehensively, potentially transforming how users interact with Apple devices. The collaboration between AI advancements and Apple’s silicon architecture is likely to result in a more cohesive and powerful ecosystem for both iOS and macOS users.

Apple Ferret 7B MLLM

Here are some other articles you may find of interest on the subject of multimodal large language models :

For those interested in the technical performance of the Ferret 7B, Apple has developed the Ferret Bench, a benchmarking tool specifically for this model. This tool will help developers and researchers evaluate the model’s efficiency and flexibility in various situations.

Apple’s approach to AI is centered on creating practical applications that provide tangible benefits to users of its devices. The company’s dedication to this strategy is clear from its decision to make the Ferret 7B open-source, offering the code and checkpoints for research purposes. This move encourages further innovation and collaboration within the AI community.

Training complex models like the Ferret 7B requires considerable resources, and Apple has invested in this by using NVIDIA A100 GPUs. This reflects the company’s deep investment in AI research and development.

Apple multimodal large language model (MLLM)

It’s important to note the differences between the 7B and the larger 13B versions of the model. The 7B is likely tailored for iOS devices, carefully balancing performance with the constraints of mobile hardware. This strategic decision is in line with Apple’s focus on the user experience, ensuring that AI improvements directly benefit the user.

# 7B python3 -m ferret.model.apply_delta \ --base ./model/vicuna-7b-v1-3 \ --target ./model/ferret-7b-v1-3 \ --delta path/to/ferret-7b-delta # 13B python3 -m ferret.model.apply_delta \ --base ./model/vicuna-13b-v1-3 \ --target ./model/ferret-13b-v1-3 \ --delta path/to/ferret-13b-delta

Usage and License Notices: The data, and code is intended and licensed for research use only. They are also restricted to uses that follow the license agreement of LLaMA, Vicuna and GPT-4. The dataset is CC BY NC 4.0 (allowing only non-commercial use) and models trained using the dataset should not be used outside of research purposes.

With the release of the Ferret 7B LLM, Apple has made a bold move in the AI space. The launch showcases the company’s technical prowess and its commitment to creating powerful, user-friendly AI. This development is set to enhance device functionality and enrich user interactions. As Apple continues to invest in AI, we can expect to see more innovations that will significantly impact how we interact with technology.

Filed Under: Apple, Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.