IBM has teamed up with Korea Quantum Computing (KQC) in a strategic partnership that’s set to advance on some computing. This alliance is not just a handshake between two companies; it’s a fusion of IBM’s trailblazing AI software and quantum computing services with KQC’s ambition to push the boundaries of technology.

“We are excited to work with KQC to deploy AI and quantum systems to drive innovation across Korean industries. With this engagement, KQC clients will have the ability to train, fine-tune, and deploy advanced AI models, using IBM watsonx and advanced AI infrastructure. Additionally, by having the opportunity to access IBM quantum systems over the cloud, today—and a next-generation quantum system in the coming years—KQC members will be able to combine the power of AI and quantum to develop new applications to address their industries’ toughest problems,” said Darío Gil, IBM Senior Vice President and Director of Research.

This collaboration includes an investment in infrastructure to support the development and deployment of generative AI. Plans for the AI-optimized infrastructure includes advanced GPUs and IBM’s Artificial Intelligence Unit (AIU), managed with Red Hat OpenShift to provide a cloud-native environment. Together, the GPU system and AIU combination is being engineered to offer members state-of-the-art hardware to power AI research and business opportunities.

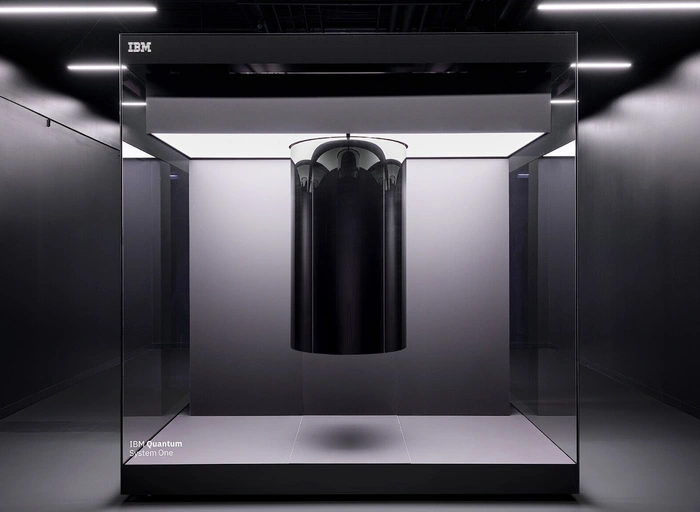

Quantum Computing

That’s the vision KQC is chasing, and by 2028, they plan to bring this vision to life by installing an IBM Quantum System Two right in their Busan site. This isn’t just about getting their hands on new gadgets; it’s about weaving quantum computing into the very fabric of mainstream applications. To make this a reality, KQC is already on the move, beefing up their infrastructure with the latest GPUs and IBM’s AI Unit, all fine-tuned for AI applications that will redefine what’s possible.

But what’s advanced technology without a solid foundation? That’s where Red Hat OpenShift comes into play. It’s the backbone that will ensure this complex infrastructure stands strong, offering the scalable cloud services that KQC needs to manage their high-tech setup. And it doesn’t stop there. KQC is also diving into the world of Red Hat OpenShift AI for management and runtime, and they’re exploring the frontiers of generative AI technologies with the WatsonX platform. These are the tools that will fuel the next wave of innovation and efficiency in AI.

Now, let’s talk about the ripple effect. This partnership isn’t just about KQC and IBM; it’s about sparking a fire of innovation across entire industries. Korean companies in finance, healthcare, and pharmaceuticals are joining the fray, eager to collaborate on research that leverages AI and quantum computing. The goal? To craft new applications that will catapult these industries into a new era of technological prowess.

The KQC-IBM partnership is more than a milestone for Korea’s tech landscape; it’s a beacon that signals a new dawn in the application of AI and quantum computing. With the integration of Red Hat OpenShift and the WatsonX platform, KQC is not just boosting its capabilities; it’s setting the stage for groundbreaking research and innovation. This collaboration is a testament to the power of partnership and the shared commitment to sculpting the future of industries with the finest technology at our fingertips.

Filed Under: Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.