[ad_1]

Una startup de inteligencia artificial en Corea del Sur Hiper Axel Se asoció con la empresa de SoC basada en plataforma y el diseñador de ASIC SEMIFIVE en enero de 2024 para desarrollar Bertha LPU.

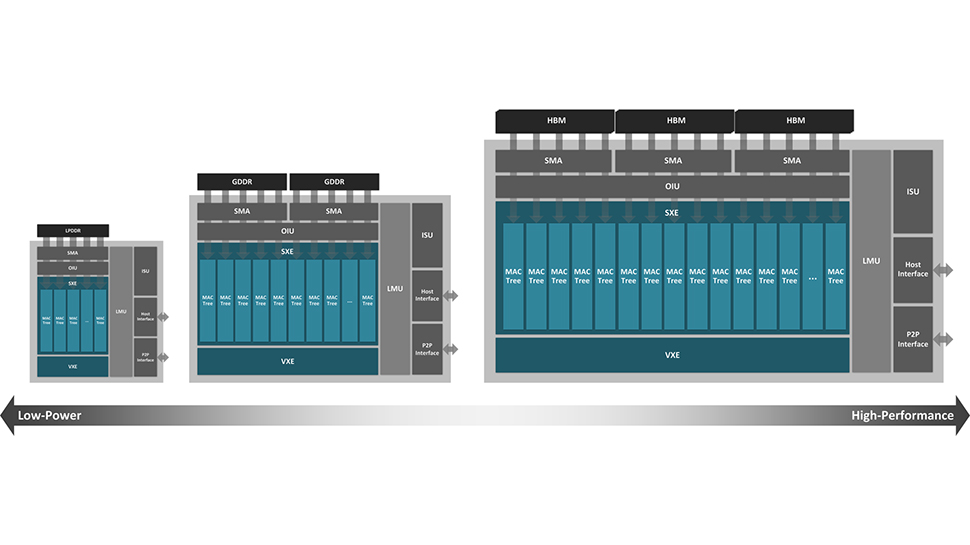

Diseñado para la inferencia LLM, Bertha ofrece “bajo costo, baja latencia y características específicas de dominio”, con el objetivo de reemplazar las GPU de “alto costo y baja eficiencia”. SEMIFIVE informa que el trabajo ya se ha completado y que el procesador, diseñado con tecnología de 4 nm, se producirá en masa a principios de 2026.

HyperAccel afirma que Bertha puede ofrecer hasta el doble de rendimiento y una relación precio-rendimiento 19 veces mejor que la de una supercomputadora típica, pero se enfrenta a una dura competencia en un mercado donde… NVIDIASus GPU están muy bien establecidas.

Enfrentando desafíos

“Estamos encantados de trabajar con SEMIFIVE, un proveedor líder de plataformas SoC y soluciones integrales de diseño ASIC, para desarrollar Bertha para la producción en masa”, dijo Ju Young Kim, director ejecutivo de HyperAccel. “Al colaborar con SEMIFIVE, nos complace ofrecer a los clientes semiconductores de inteligencia artificial que brindan funciones LLM más rentables y energéticamente eficientes en comparación con las plataformas GPU. Este avance reducirá significativamente los gastos operativos del centro de datos y expandirá nuestro negocio a otras industrias que requieren MBA. .

Groq, un competidor de IA con sede en Silicon Valley y dirigido por el ex director ejecutivo de…Google El ingeniero y director ejecutivo Jonathan Ross ya ha logrado grandes avances Con su propio producto LPUcentrándose en la inferencia de inteligencia artificial de alta velocidad.

La tecnología de Groq, que proporciona inferencia local y en la nube a escala para aplicaciones de IA, ya ha encontrado una gran audiencia con más de 525.000 desarrolladores que utilizan LPU desde su lanzamiento en febrero. La llegada tardía de Bertha puede ponerla en desventaja.

Brandon Chu, director ejecutivo y cofundador de SEMIFIVE, es más optimista sobre las posibilidades de Bertha. “HyperAccel es una empresa que cuenta con la tecnología LPU más eficiente y escalable para estudiantes de MBA. Con la demanda de computación LLM creciendo exponencialmente, HyperAccel tiene el potencial de convertirse en una nueva fuerza en la infraestructura de procesadores global”, afirmó.

El enfoque de Bertha en la eficiencia podría atraer a empresas que buscan alternativas para reducir los costos operativos, pero con el dominio incomparable de Nvidia, el producto HyperAccel puede encontrarse luchando por un nicho en un espacio ya abarrotado, en lugar de convertirse en un líder en inteligencia artificial.

Más de TechRadar Pro

[ad_2]

Source Article Link