In the ever-evolving landscape of artificial intelligence, OpenAI has once again pushed the boundaries with the introduction of Sora, a cutting-edge AI model designed to transform the way we think about video generation. This remarkable tool stands out by creating videos up to 60 seconds long from just a text prompt. For those keen on exploring the frontiers of AI and video production, Sora represents not just an advancement but a whole new realm of possibilities.

What Sets Sora Apart?

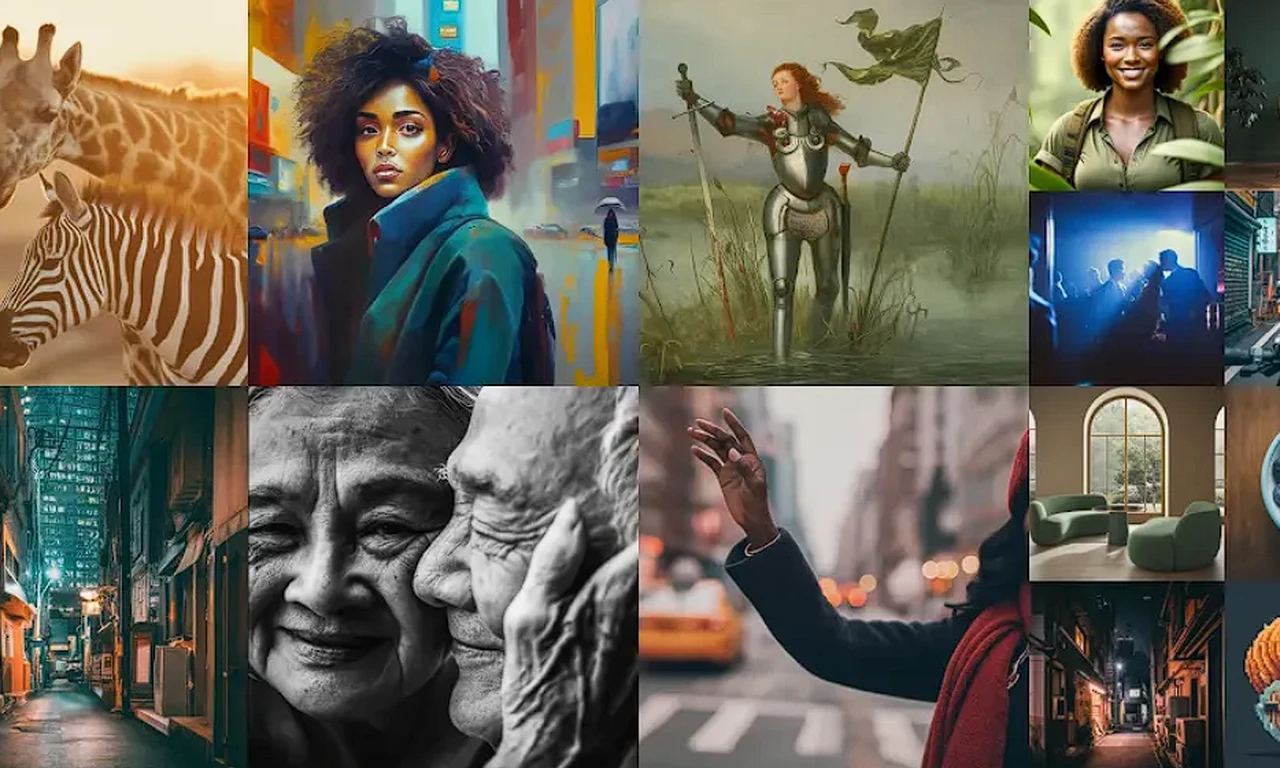

Imagine typing a description and watching it come to life as a detailed video with dynamic camera movements and characters brimming with emotion. This is the reality with Sora. The model’s ability to generate such intricate visuals marks a significant leap from its predecessors, which were limited to producing much shorter clips. Whether it’s a serene natural landscape or a bustling urban setting, Sora brings the text to vivid life with unparalleled detail.

Diving into Sora’s Capabilities

For those curious about the extent of what Sora can achieve, the examples shared by OpenAI are nothing short of astonishing. From the majestic approach of woolly mammoths to the thrilling escapades of a spaceman, the model showcases an impressive range of scenarios. Each example is a testament to the model’s versatility and its potential to revolutionize content creation across various domains.

Navigating the Road to Wider Accessibility

Currently, access to Sora is selective, part of OpenAI’s commitment to thorough testing and improvement through red teaming. This phase is essential for refining the model’s capabilities and ensuring it meets the high standards expected by creators and the tech community at large. If you are wondering how you might get your hands on Sora, patience is key as OpenAI works towards making it more accessible.

The Future of Content Creation Unfolds

Sora opens new doors for content creators by enabling the generation of minute-long videos from detailed prompts. This advancement holds immense potential for storytelling, educational content, marketing, and entertainment, offering a new canvas for creativity and innovation.

Reflecting on Progress and Looking Forward

The evolution from the AI video generation capabilities of a year ago to what Sora offers today is stark. This rapid progress not only highlights the leaps being made in AI technology but also hints at the future possibilities that remain unexplored.

A New Era for Creatives

While advancements like Sora may raise questions about the future of traditional video production roles, it’s essential to view these tools as allies in the creative process. Rather than replacing human creativity, Sora is poised to enhance it, providing new ways for creatives to express their visions and tell their stories.

As we stand on the brink of this transformative moment in AI video generation, it’s clear that Sora by OpenAI is not just a technological marvel but a gateway to expanding the horizons of filmmakers, marketers, educators, and content creators across the board. With the promise of continued advancements and broader access, the future of video content production is poised for a revolution, reshaping how we create, share, and engage with visual narratives.

Source Matt Wolfe

Filed Under: Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.