[ad_1]

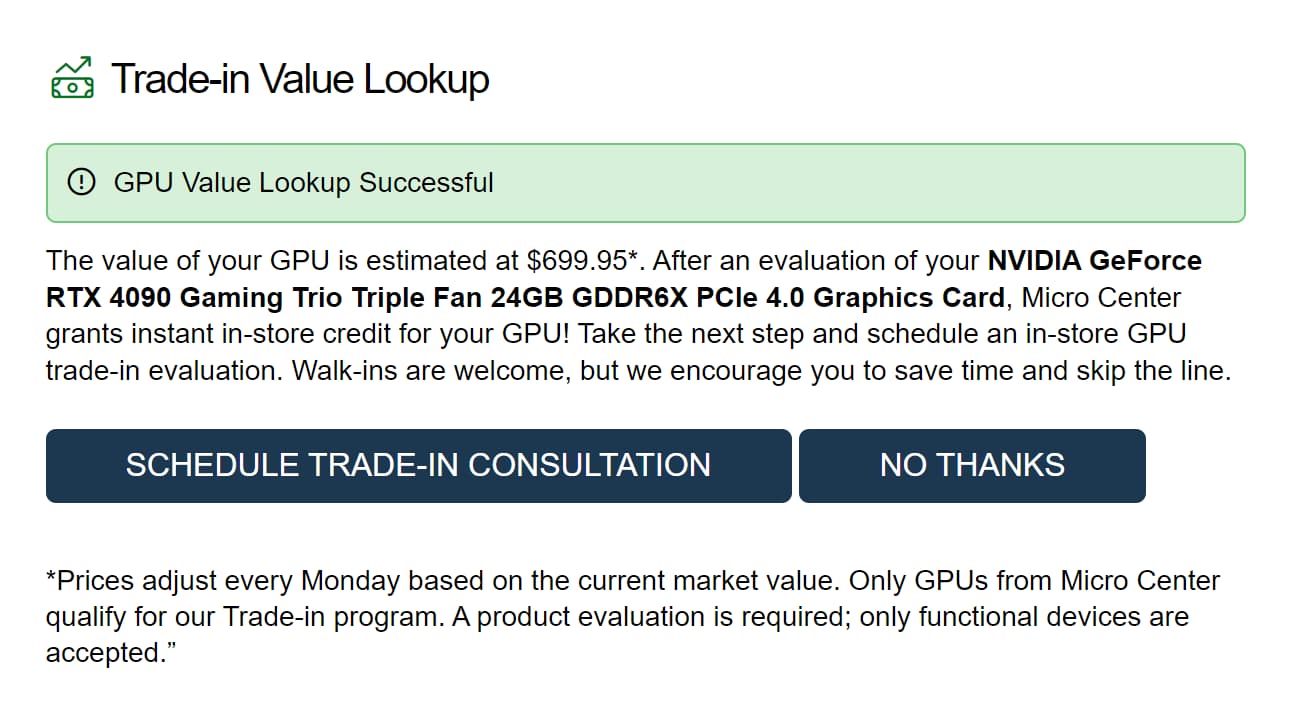

Recently a story made headlines concerning a potential seller finding out just how bad Microcenter’s trade-in value is for a Nvidia GeForce RTX 4090 graphics card.

The retailer only offered $700 for a card that’s currently priced at nearly $2000 on its own online store, less than half its original value. And keep in mind that this is a current-gen high-end component, easily the best graphics card out there right now, not something from two generations ago.

Of course, there are several factors involved in trade-in value, including the condition of the product in question. However, Wccftech reported that this was a simple look-up through Microcenter’s website, meaning that this value is the standard one. Compare this to what Newegg is offering, about $1,500 or over twice as much, and you see quite the discrepancy between the two amounts.

Microcenter is also a brick-and-mortar retailer unlike Newegg, which means its reach extends beyond online shoppers and to more casual shoppers who may not be aware of how terrible that trade-in amount is compared to its competitors. Which is something it shares with another massive chain that buys and sells used products — Gamestop.

Though far from its peak as the most popular chain gaming retailer in the US, Gamestop is still widely known and attracts plenty of customers, including casual shoppers who simply want to buy whatever’s the most popular game or console and are willing to trade in older used products for it.

Gamestop has always been infamous for just how little it offers customers for trade-ins, even becoming an internet meme, and yet despite that still attracts quite a bit of business. At one point, it was estimated that the retailer made nearly $1 billion in profit off the used trade-in market alone. The company earned 48 cents of gross profits from every dollar earned in its pre-owned games and consoles, which it accomplished by reselling purchased used products at a much higher price.

So when I see a large retailer offer such an abysmal trade-in value for a very recent product that it’s guaranteed to resale at a premium, I get flashbacks to a Gamestop employee cheerfully announcing to me that my combined trade-in value of Final Fantasy X and Madden 2005 would be just over $3.

Hopefully, news will spread and it will inform more buyers to shop for better prices rather than take Microcenter’s paltry offer, and maybe even dissuade Microcenter from trying to low-ball its customers like this. This is behavior that needs to be nipped in the bud now, before it poisons the well and makes it that much harder to sell and purchase used components in the future.

You might also like

[ad_2]

Source Article Link