[ad_1]

Two years after the debut of its Arc Alchemist GPUs, Intel is launching six new Arc products, but these are designed for edge/embedded systems.

These edge systems, which process data near the source to reduce latency and bandwidth use, are becoming increasingly essential in areas such as IoT, autonomous vehicles, and AI applications.

As Intel says, “AI at the edge is exploding with new use cases and workloads being developed daily. These AI workloads often require a high degree of parallel processing and memory bandwidth for peak performance, dedicated hardware, optimized architecture for compute efficiency, and reduced latency with faster results for real-time processing. A discrete GPU may be the ideal solution for edge AI use cases requiring high performance and complex model support.”

Six SKUs

The new Arc on edge GPUs are built on Intel’s highly scalable Intel Xe-core architecture and support AI acceleration, visual computing and media processing. Using the OpenVINO toolkit developers can deploy AI models across Intel hardware.

The Arc on edge offerings have a number of benefits, including reduced latency, improved bandwidth efficiency and better privacy and security.

For high performance and to handle heavy AI workloads and expansive use cases such as facial recognition and generative conversational speech, there’s the 7XXE. For immersive visual experiences and enhanced AI inferencing capabilities, there’s the 5XXE, and for low power and small form factor requirements, Intel has the 3XXE.

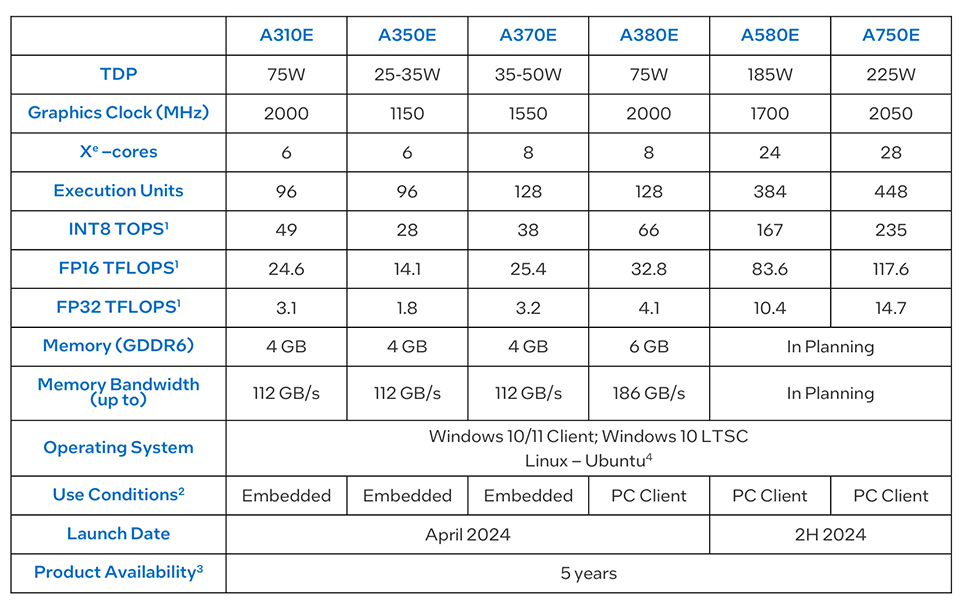

There are six SKUs available – the A310E and A3503 with 6 Xe-cores, the A370E and A380E with 8 cores, and the A580E and A750E with 28 cores. The A310E, A3503 and A370E have 4GB of GDDDR6 memory with 112GB/s memory bandwidth. The A380E has 6GB with 186GB/s bandwidth, while the A580E and A750E’s memory and memory bandwidth are unknown for now. Intel says only that it is “in planning”. There’s also no launch date for those two either, just TBD. The other four SKUs will be available this month.

Intel Arc GPUs are built to be paired with Intel Core processors, from 10th Gen upwards and Intel Xeon W-3400 and W-2400. A number of products featuring the new Arc GPUs are set to be released in the coming months from Intel partners including from ADLINK, Advantech, Asus, Matrox and Sparkle.

More from TechRadar Pro

[ad_2]

Source Article Link