Trapped atomic ions are among the most advanced technologies for realizing quantum computation and quantum simulation, based on a combination of high-fidelity quantum gates1,2,3 and long coherence times7. These have been used to realize small-scale quantum algorithms and quantum error correction protocols. However, scaling the system size to support orders-of-magnitude more qubits8,9 seems highly challenging10,11,12,13. One of the primary paths to scaling is the quantum charge-coupled device (QCCD) architecture, which involves arrays of trapping zones between which ions are shuttled during algorithms13,14,15,16. However, challenges arise because of the intrinsic nature of the radio-frequency (rf) fields, which require specialized junctions for two-dimensional (2D) connectivity of different regions of the trap. Although successful demonstrations of junctions have been performed, these require dedicated large-footprint regions of the chip that limit trap density17,18,19,20,21. This adds to several other undesirable features of the rf drive that make micro-trap arrays difficult to operate6, including substantial power dissipation due to the currents flowing in the electrodes, and the need to co-align the rf and static potentials of the trap to minimize micromotion, which affects gate operations22,23. Power dissipation is likely to be a very severe constraint in trap arrays of more than 100 sites5,23.

An alternative to rf electric fields for radial confinement is to use a Penning trap in which only static electric and magnetic fields are used, which is an extremely attractive feature for scaling because of the lack of power dissipation and geometrical restrictions on the placement of ions23,24. Penning traps are a well-established tool for precision spectroscopy with small numbers of ions25,26,27,28, whereas quantum simulations and quantum control have been demonstrated in crystals of more than 100 ions29,30,31. However, the single trap site used in these approaches does not provide the flexibility and scalability necessary for large-scale quantum computing.

Invoking the idea of the QCCD architecture, the Penning QCCD can be envisioned as a scalable approach, in which a micro-fabricated electrode structure enables the trapping of ions at many individual trapping sites, which can be actively reconfigured during the algorithm by changing the electric potential. Beyond the static arrays considered in previous work23,32, here we conceptualize that ions in separated sites are brought close to each other to use the Coulomb interaction for two-qubit gate protocols implemented through applied laser or microwave fields33,34, before being transported to additional locations for further operations. The main advantage of this approach is that the transport of ions can be performed in three dimensions almost arbitrarily without the need for specialized junctions, enabling flexible and deterministic reconfiguration of the array with low spatial overhead.

In this study, we demonstrate the fundamental building block of such an array by trapping a single ion in a cryogenic micro-fabricated surface-electrode Penning trap. We demonstrate quantum control of its spin and motional degrees of freedom and measure a heating rate lower than in any comparably sized rf trap. We use this system to demonstrate flexible 2D transport of ions above the electrode plane with negligible heating of the motional state. This provides a key ingredient for scaling based on the Penning ion-trap QCCD architecture.

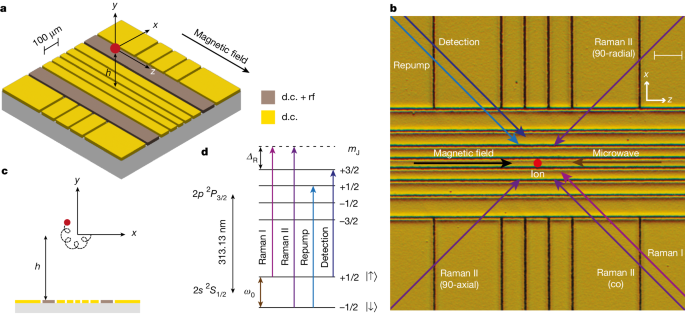

The experimental setup involves a single beryllium (9Be+) ion confined using a static quadrupolar electric potential generated by applying voltages to the electrodes of a surface-electrode trap with geometry shown in Fig. 1a–c. We use a radially symmetric potential \(V(x,y,z)=m{\omega }_{z}^{2}({z}^{2}-({x}^{2}+{y}^{2})/2)/(2e)\), centred at a position 152 μm above the chip surface. Here, m is the mass of the ion, ωz is the axial frequency and e is the elementary charge. The trap is embedded in a homogeneous magnetic field aligned along the z-axis with a magnitude of B ≃ 3 T, supplied by a superconducting magnet. The trap assembly is placed in a cryogenic, ultrahigh vacuum chamber that fits inside the magnet bore, with the aim of reducing background-gas collisions and motional heating. Using a laser at 235 nm, we load the trap by resonance-enhanced multiphoton ionization of neutral atoms produced from either a resistively heated oven or an ablation source35. We regularly trap single ions for more than a day, with the primary loss mechanism being related to user interference. Further details about the apparatus can be found in the Methods.

The three-dimensional (3D) motion of an ion in a Penning trap can be described as a sum of three harmonic eigenmodes. The axial motion along z is a simple harmonic oscillator with frequency ωz. The radial motion is composed of modified-cyclotron (ω+) and magnetron (ω−) components, with frequencies ω± = ωc/2 ± Ω, where \(\varOmega =\sqrt{{\omega }_{{\rm{c}}}^{2}-2{\omega }_{z}^{2}}/2\) (ref. 36) and ωc = eB/m ≃ 2π × 5.12 MHz is the bare cyclotron frequency. Voltage control over the d.c. electrodes of the trap enables the axial frequency to be set to any value up to the stability limit, ωz ≤ ωc/\(\sqrt{2}\) ≃ 2π × 3.62 MHz. This corresponds to a range 0 ≤ ω− ≤ 2π × 2.56 MHz and 2π × 2.56 MHz ≤ ω+ ≤ 2π × 5.12 MHz for the magnetron and modified-cyclotron modes, respectively. Doppler cooling of the magnetron mode, which has a negative total energy, is achieved using a weak axialization rf quadrupolar electric field (less than 60 mV peak-to-peak voltage on the electrodes) at the bare cyclotron frequency, which resonantly couples the magnetron and modified-cyclotron motions37,38. For the wiring configuration used in this work, the null of the rf field is produced at a height h ≃ 152 μm above the electrode plane. Aligning the null of the d.c. (trapping) field to the rf null is beneficial because it reduces the driven radial motion at the axialization frequency; nevertheless, we find that Doppler cooling works with a relative displacement of tens of micrometres between the d.c. and rf nulls, albeit with lower efficiency. The rf field is required only during Doppler cooling, and not, for instance, during coherent operations on the spin or motion of the ion. All measurements in this work are taken at an axial frequency ωz ≃ 2π × 2.5 MHz, unless stated otherwise. The corresponding radial frequencies are ω+ ≃ 2π × 4.41 MHz and ω− ≃ 2π × 0.71 MHz.

Figure 1d shows the electronic structure of the beryllium ion along with the transitions relevant to this work. We use an electron spin qubit (consisting of the \(|\uparrow \rangle \equiv |{m}_{{\rm{I}}}=+\,3/2,{m}_{{\rm{J}}}=+\,1/2\rangle \) and \(|\downarrow \rangle \equiv |{m}_{{\rm{I}}}\,=+\,3/2,{m}_{{\rm{J}}}=-\,1/2\rangle \) eigenstates within the 2s 2S1/2 ground-state manifold), which in the high field is almost decoupled from the nuclear spin. The qubit frequency is ω0 ≃ 2π × 83.2 GHz. Doppler cooling is performed using the detection laser red-detuned from the (bright) |↑⟩ ↔ 2p 2P3/2 |mI = +3/2, mJ = +3/2⟩ cycling transition, whereas an additional repump laser optically pumps population from the (dark) |↓⟩ level to the higher energy |↑⟩ level through the fast-decaying 2p 2P3/2 |mI = +3/2, mJ = +1/2⟩ excited state. State-dependent fluorescence with the detection laser allows for discrimination between the two qubit states based on photon counts collected on a photomultiplier tube using an imaging system that uses a 0.55 NA Schwarzschild objective. The fluorescence can also be sent to an electron-multiplying CCD (EMCCD) camera.

Coherent operations on the spin and motional degrees of freedom of the ion are performed either using stimulated Raman transitions with a pair of lasers tuned to 150 GHz above the 2p 2P3/2 |mI = +3/2, mJ = +3/2⟩ state or using a microwave field. The former requires the use of two 313 nm lasers phase-locked at the qubit frequency, which we achieve using the method outlined in ref. 39. By choosing different orientations of Raman laser paths, we can address the radial or axial motions, or implement single-qubit rotations using a co-propagating Raman beam pair.

The qubit transition has a sensitivity of 28 GHz T−1 to the magnetic field, meaning the phase-coherence of our qubit is susceptible to temporal fluctuations or spatial gradients of the field across the extent of the motion of the ion. Using Ramsey spectroscopy, we measure a coherence time of 1.9(2) ms with the Raman beams. Similar values are measured with the microwave field, indicating that laser phase noise from beam path fluctuations or imperfect phase-locking does not significantly contribute to dephasing. The nature of the noise seems to be slow on the timescale (about 1 ms to 10 ms) of a single experimental shot consisting of cooling, probing and detection, and the fringe contrast decay follows a Gaussian curve. We note that the coherence is reduced if vibrations induced by the cryocoolers used to cool the magnet and the vacuum apparatus are not well decoupled from the experimental setup. Further characterization of the magnetic field noise is performed by applying different orders of the Uhrig dynamical decoupling sequence40,41, with the resulting extracted coherence time from the measurements being 3.2(1) ms, 5.8(3) ms and 8.0(7) ms for orders 1, 3 and 5, respectively. Data on spin-dephasing are presented in Extended Data Fig. 1.

A combination of the Doppler cooling and repump lasers prepares the ion in the |↑⟩ electronic state and a thermal distribution of motional Fock states. After Doppler cooling using the axialization technique, we measure mean occupations of \(\{{\bar{n}}_{+},{\bar{n}}_{-},{\bar{n}}_{z}\}=\{6.7(4),9.9(6),4.4(1)\}\) using sideband spectroscopy on the first four red and blue sidebands38. Pulses of continuous sideband cooling31,38 are subsequently performed by alternatively driving the first and third blue sidebands of a positive energy motional mode and red sidebands of a negative energy motional mode while simultaneously repumping the spin state to the bright state. The 3D ground state can be prepared by applying this sequence for each of the three modes in succession. The use of the third sideband is motivated by the high Lamb–Dicke parameters of approximately 0.4 in our system42,43. After a total time of 60 ms of cooling, we probe the temperature using sideband spectroscopy on the first blue and red sidebands44. Assuming thermal distributions, we measure \(\{{\bar{n}}_{+},{\bar{n}}_{-},{\bar{n}}_{z}\}=\{0.05(1),0.03(2),0.007(3)\}\). We have achieved similar performance of the ground-state cooling at all trap frequencies probed to date. The long duration of the sideband cooling sequence stems from the large (estimated as 80 μm) Gaussian beam radius of the Raman beams each with power in the range of 2 mW to 6 mW, leading to a Rabi frequency Ω0 ≃ 2π × 8 kHz, which corresponds to π times of approximately 62 μs, 145 μs and 2,000 μs for the ground-state carrier, first and third sidebands, respectively, at ωz = 2π × 2.5 MHz.

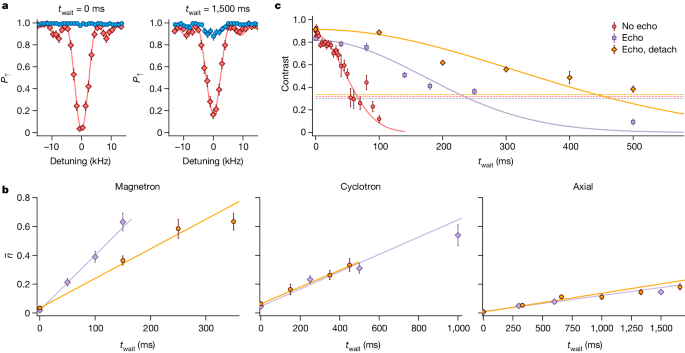

Trapped-ion quantum computing uses the collective motion of the ions for multi-qubit gates and thus requires the motional degree of freedom to retain coherence over the timescale of the operation33,45. A contribution to decoherence comes from motional heating due to fluctuations in the electric field at frequencies close to the oscillation frequencies of the ion. We measure this by inserting a variable-length delay twait between the end of sideband cooling and the temperature probe. As shown in Fig. 2, we observe motional heating rates \(\{{\dot{\bar{n}}}_{+},{\dot{\bar{n}}}_{-},{\dot{\bar{n}}}_{z}\}=\{0.49(5)\,{{\rm{s}}}^{-1},3.8(1)\,{{\rm{s}}}^{-1},0.088(9)\,{{\rm{s}}}^{-1}\}\). The corresponding electric-field spectral noise density for the axial mode, \({S}_{{\rm{E}}}=4\hbar m{\omega }_{z}{\dot{\bar{n}}}_{z}/{e}^{2}=3.4(3)\times {10}^{-16}\,{{\rm{V}}}^{2}{{\rm{m}}}^{-2}{{\rm{Hz}}}^{-1}\), is lower than any comparable measurement in a trap of similar size46,47. As detailed in the Methods, we can trap ions in our setup with the trap electrodes detached from any external supply voltage except during Doppler cooling, which requires the axialization signal to pass to the trap. Using this method, we measure heating rates \({\dot{\bar{n}}}_{z}=0.10(1)\,{{\rm{s}}}^{-1}\) and \({\dot{\bar{n}}}_{+}=0.58(2)\,{{\rm{s}}}^{-1}\) for the axial and cyclotron modes, respectively, whereas the rate for the lower-frequency magnetron mode drops to \({\dot{\bar{n}}}_{-}=1.8(3)\,{{\rm{s}}}^{-1}\). This reduction suggests that external electrical noise contributes to the higher magnetron heating rate in the earlier measurements.

Motional-state dephasing was measured using Ramsey spectroscopy, involving setting up a superposition |↑⟩ (|0⟩z + |1⟩z)/\(\sqrt{2}\) of the first two Fock states of the axial mode (here ωz ≃ 2π × 3.1 MHz) using a combination of carrier and sideband pulses48. Following a variable wait time, we reverse the preparation sequence with a shifted phase. The resulting decay of the Ramsey contrast shown in Fig. 2c is much faster than what would be expected from the heating rate. The decay is roughly Gaussian in form with a 1/e coherence time of 66(5) ms. Inserting an echo pulse in the Ramsey sequence extends the coherence time to 240(20) ms, which indicates low-frequency noise components dominating the bare Ramsey coherence. Further improvement of the echo coherence time to 440(50) ms is observed when the trap electrodes are detached from external voltage sources between the conclusion of Doppler cooling and the start of the detection pulse, in which again the axialization signal is beneficial. The data with the voltage sources detached are taken at ωz ≃ 2π × 2.5 MHz.

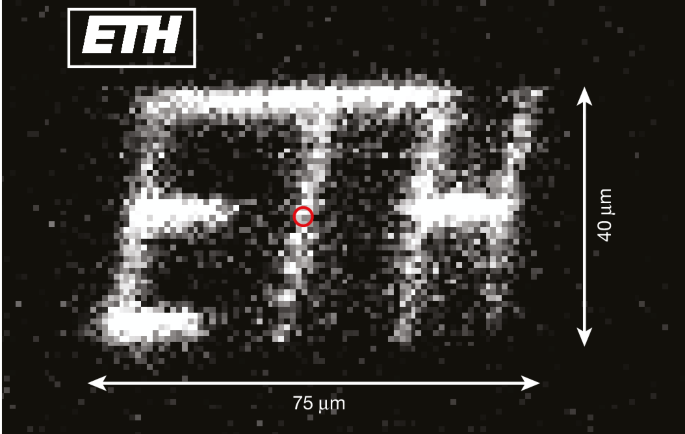

An important component of the QCCD architecture14 is ion transport. We demonstrate that the Penning trap approach enables us to perform this flexibly in two dimensions by adiabatically transporting a single ion, and observing it at the new location. The ion is first Doppler-cooled at the original location, and then transported in 4 ms to a second desired location along a direct trajectory. We then perform a 500-μs detection pulse without applying axialization and collect the ion fluorescence on an EMCCD camera. The exposure of the camera is limited to the time window defined by the detection pulse. The lack of axialization is important when the ion is sufficiently far from the rf null to minimize radial excitation due to micromotion and subsequently produce enough fluorescence during the detection window. The ion is then returned to the initial location. Figure 3 shows a result in which we have drawn the first letters of the ETH Zürich logo. The image quality and maximum canvas size are only limited by the point-spread function and field of view of our imaging system, as well as the spatial extent of the detection laser beam, and not by any property of the transport. Reliable transport to a set location and back has been performed up to 250 μm. By probing ion temperatures after transport using sideband thermometry (Extended Data Fig. 2), we have observed no evidence of motional excitation from transport compared with the natural heating expected over the duration of the transport. This contrasts with earlier non-adiabatic radial transport of ensembles of ions in Penning traps, in which a good fraction of the ions were lost in each transport49.

This work marks a starting point for quantum computing and simulation in micro-scale Penning trap 2D arrays. The next main step is to operate with multiple sites of such an array, which will require optimization of the loading while keeping the ions trapped in shallow potentials. This can be accomplished in the current trap with the appropriate wiring, but notable advantages could be gained by using a trap with a loading region and shuttling ions into the micro-trap region. Multi-qubit gates could then be implemented following the standard methods demonstrated in rf traps23,34. Increased spin-coherence times could be achieved through improvements to the mechanical stability of the magnet, or in the longer term through the use of decoherence-free subspaces, which were considered in the original QCCD proposals14,50,51. For scaling to large numbers of sites, it is likely that scalable approaches to light delivery will be required, which might necessitate switching to an ion species that is more amenable to integrated optics52,53,54,55. The use of advanced standard fabrication methods such as CMOS56,57 is facilitated, compared with rf traps, by the lack of high-voltage rf signals. Compatibility with these technologies demands an evaluation of how close to the surface ions could be operated for quantum computing and will require in-depth studies of heating—here an obvious next step is to sample electric field noise as a function of ion-electrode distance47. Unlike in rf traps, 3D scans of electric field noise are possible in any Penning trap because of the flexibility of confinement to the uniform magnetic field. This flexibility of ion placement has advantages in many areas of ion-trap physics, for instance, in placing ions in anti-nodes of optical cavities58, or sampling field noise from surfaces of interest59,60. We, therefore, expect that our work will open previously unknown avenues in sensing, computation, simulation and networking, enabling ion-trap physics to break out beyond its current constraints.