[ad_1]

- Intel apuesta fuerte por Jaguar Shores para competir en el espacio de la IA

- Los chips Gaudí dan a Intel una oportunidad en el mercado de inferencia de IA

- Intel espera que el Nodo 18A le dé una ventaja de fabricación

Intel Se ha estado construyendo silenciosamente para ella. Amnistía Internacional Chip Wallet, recientemente agregó el acelerador de IA Jaguar Shores a su hoja de ruta en un movimiento significativo en su intento por competir con empresas como Nvidia y AMD.

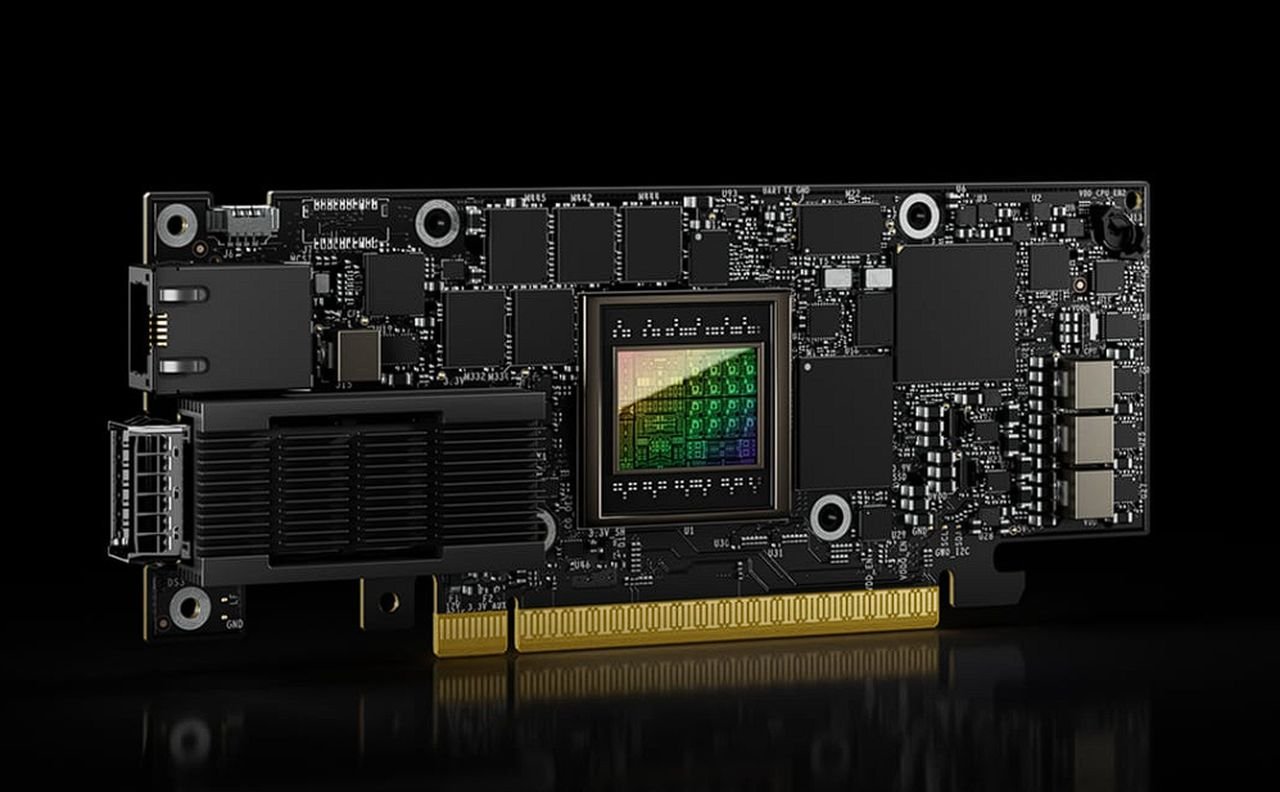

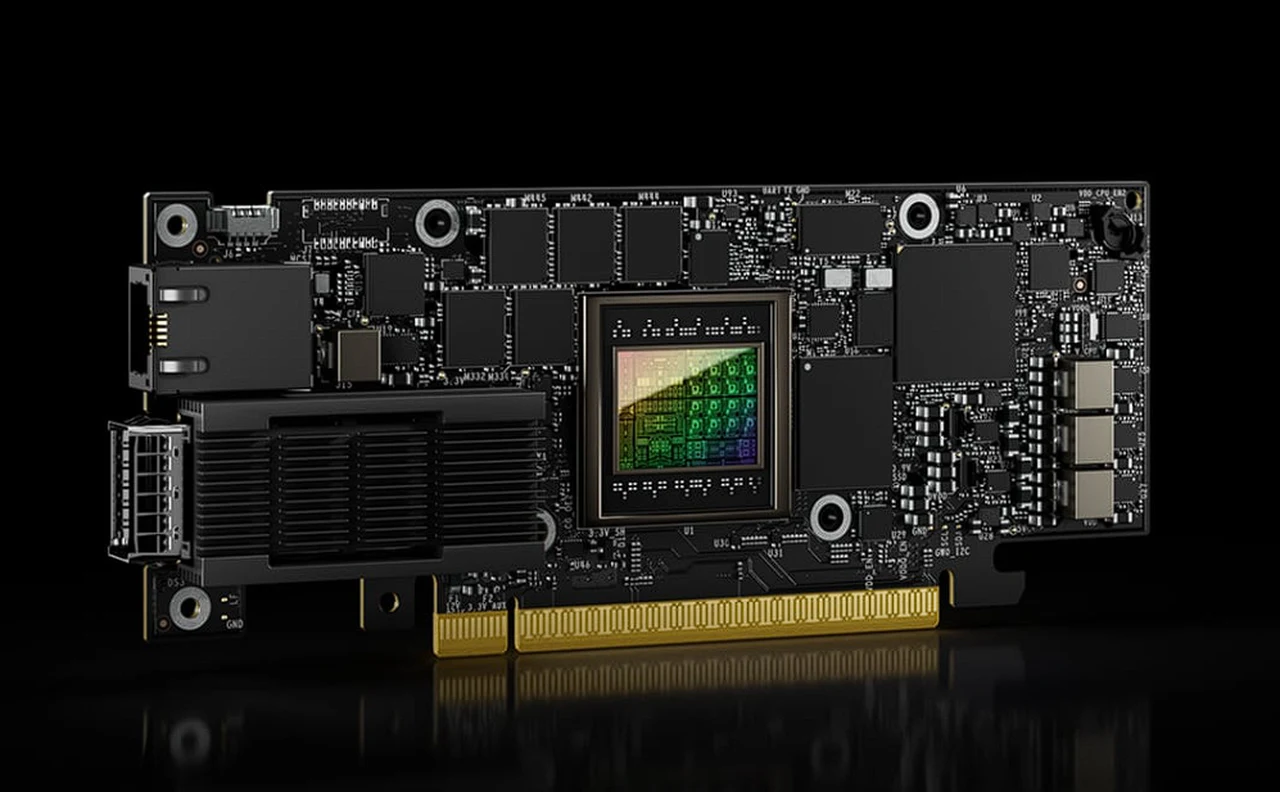

El acelerador de IA Jaguar Shores, presentado en la reciente conferencia de supercomputación SC2024, es una parte clave de la estrategia de Intel para seguir siendo competitivo. Aunque los detalles son escasos, es probable que Jaguar Shores sea el sucesor de Falcon Shores, cuyo lanzamiento está previsto para 2025.

Jaguar Shores podría ser el objetivo de la inferencia de inteligencia artificial, un área en la que Intel espera avanzar NVIDIA y AMD. Aún no está claro si Jaguar Shores será una GPU o un ASIC, pero la hoja de ruta actual de productos de Intel sugiere que podría ser una GPU de próxima generación diseñada para aplicaciones empresariales de IA.

Lucha por la relevancia del mercado

Si bien Intel se ha quedado atrás de sus competidores en hardware de entrenamiento de IA, la compañía ahora se está centrando en la inferencia de IA, aprovechando los chips Gaudí y las próximas tecnologías como Falcon Shores y Jaguar Shores.

Sin embargo, para recuperar su ventaja competitiva, Intel necesitará superar varios desafíos técnicos y organizativos.

Intel se ha enfrentado a múltiples reveses en el campo del hardware de IA, especialmente en el campo de la inteligencia artificial. GPU rebanada. El intento anterior de la empresa de desarrollar una GPU, el Puente de Rialto, fue cancelado por falta de interés de los clientes, dejando a instituciones como el Centro de Supercomputación de Barcelona en una posición difícil.

Los planes de Intel para la GPU Falcon Shores se han revisado varias veces y originalmente estaban pensados para ser un producto integrado de CPU y GPU y ahora se han reinventado como una GPU independiente.

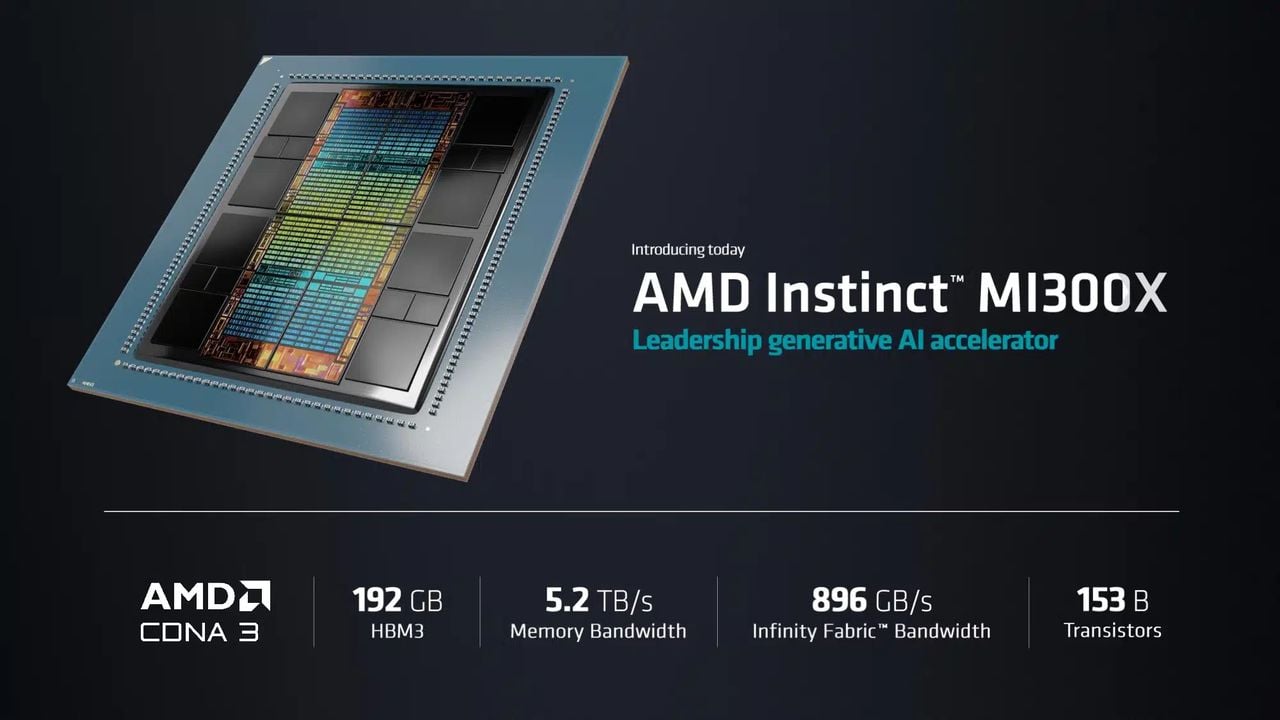

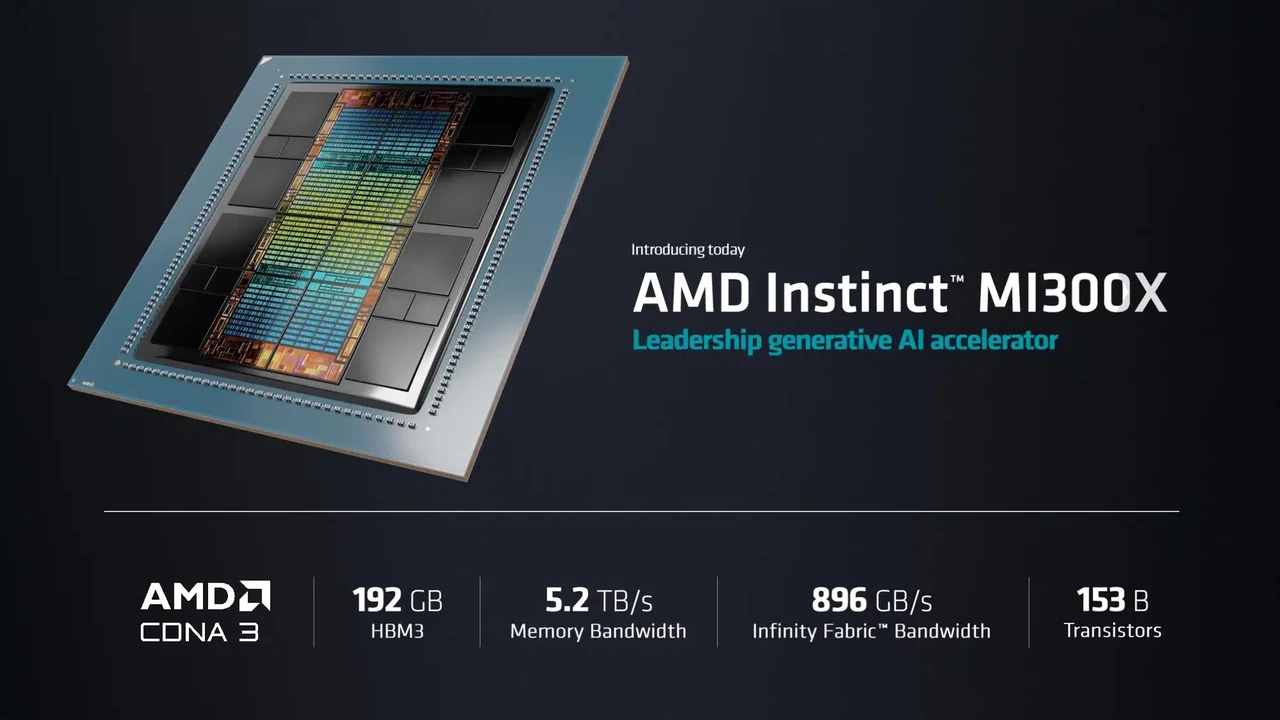

Intel ha cedido en gran medida el mercado de formación de IA a Nvidia y AMD, con Nvidia dominando gracias a sus GPU y su paquete de software CUDA. El director ejecutivo de Intel, Pat Gelsinger, reconoció el lugar distante de la compañía en la carrera de la IA antes de su retiro, reconociendo que Intel actualmente se ubica detrás de Nvidia, Amazon Web Services y Amazon Web Services. Google Nube y AMD.

Intel cuenta con el próximo nodo de fabricación 18A para darle una ventaja sobre competidores como TSMC. El proceso 18A, que incorpora nuevas tecnologías como transistores RibbonFET y acoplamiento de potencia de back-end, promete mejorar la eficiencia y el rendimiento del chip.

a través de cable HPC

También te puede gustar

[ad_2]

Source Article Link