If like me you were a little disappointed to learn that the Google Gemini demonstration released earlier this month was more about clever editing rather than technology advancements. You will be pleased to know that perhaps we won’t have to wait too long before something similar is available to use.

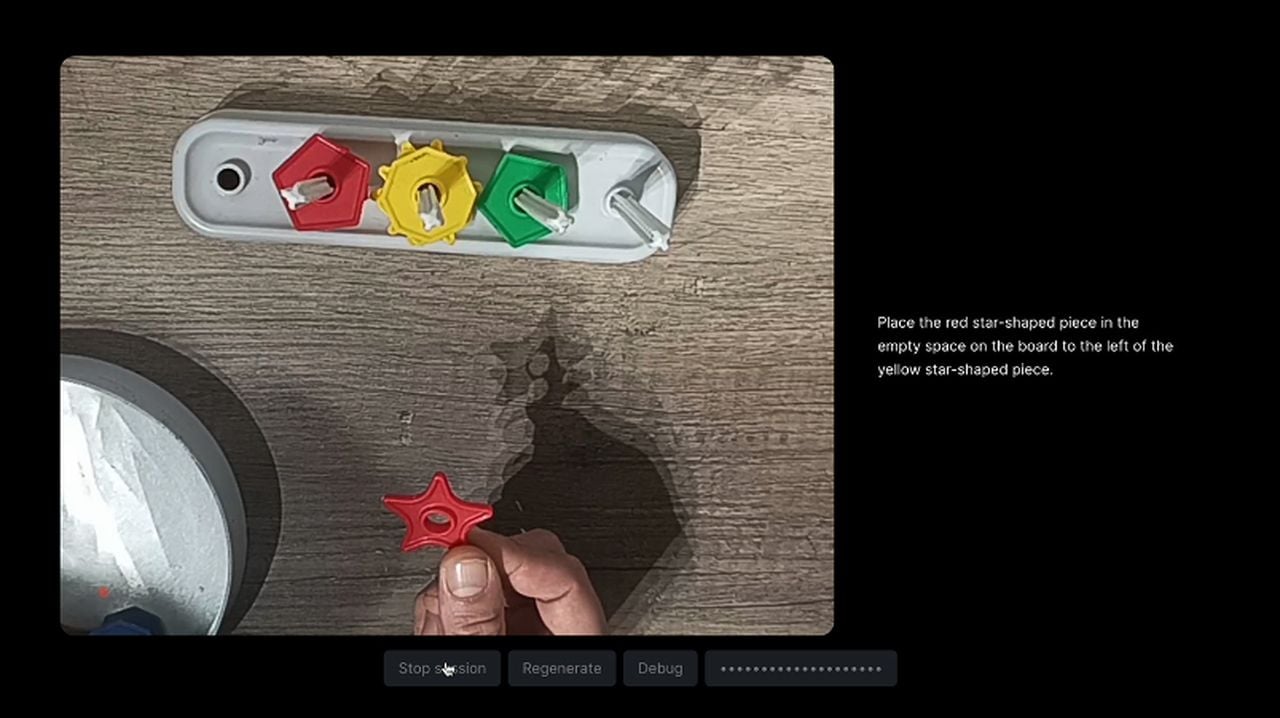

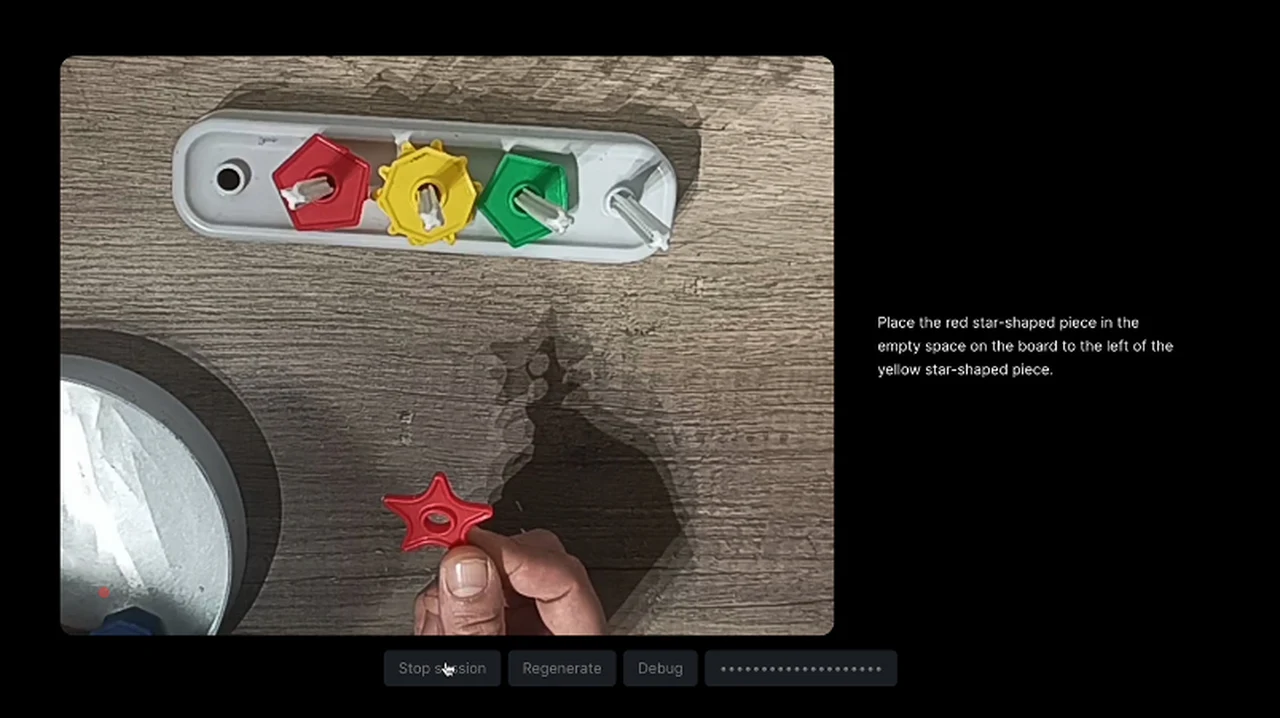

After seeing the Google Gemini demonstration and the revelation from the blog post revealing its secrets. Julien De Luca asked himself “Could the ‘gemini’ experience showcased by Google be more than just a scripted demo?” He then went about creating a fun experiment to explore the feasibility of real-time AI interactions similar to those portrayed in the Gemini demonstration. Here are a few restrictions he put on the project to keep it in line with Google’s original demonstration.

- It must happen in real time

- User must be able to stream a video

- User must be able to talk to the assistant without interacting with the UI

- The assistant must use the video input to reason about user’s questions

- The assistant must respond by talking

Due to the current ability of Chat GPT Vision to only accept individual images De Luca needed to upload a series of images and screenshots taken from the video at regular intervals for the GPT to understand what was happening.

“KABOOM ! We now have a single image representing a video stream. Now we’re talking. I needed to fine tune the system prompt a lot to make it “understand” this was from a video. Otherwise it kept mentioning “patterns”, “strips” or “grid”. I also insisted on the temporality of the images, so it would reason using the sequence of images. It definitely could be improved, but for this experiment it works well enough” explains De Luca. To learn more about this process jump over to the Crafters.ai website or GitHub for more details.

Real Google Gemini demo created

AI Jason has also created a example combining GPT-4, Whisper, and Text-to-Speech (TTS) technologies. Check out the video below for a demonstration and to learn more about creating one yourself using different AI technologies combined together.

Here are some other articles you may find of interest on the subject of ChatGPT Vision :

To create a demo that emulates the original Gemini with the integration of GPT-4V, Whisper, and TTS, developers embark on a complex technical journey. This process begins with setting up a Next.js project, which serves as the foundation for incorporating features such as video recording, audio transcription, and image grid generation. The implementation of API calls to OpenAI is crucial, as it allows the AI to engage in conversation with users, answer their inquiries, and provide real-time responses.

The design of the user experience is at the heart of the demo, with a focus on creating an intuitive interface that facilitates natural interactions with the AI, akin to having a conversation with another human being. This includes the AI’s ability to understand and respond to visual cues in an appropriate manner.

The reconstruction of the Gemini demo with GPT-4V, Whisper, and Text-To-Speech is a clear indication of the progress being made towards a future where AI can comprehend and interact with us through multiple senses. This development promises to deliver a more natural and immersive experience. The continued contributions and ideas from the AI community will be crucial in shaping the future of multimodal applications.

Image Credit : Julien De Luca

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.