[ad_1]

Tools such as Sora can generate convincing video footage from text prompts.Credit: Jonathan Raa/NurPhoto via Getty

Artificial intelligence (AI) tools that translate text descriptions into images and video are advancing rapidly.

Just as many researchers are using ChatGPT to transform the process of scientific writing, others are using AI image generators such as Midjourney, Stable Diffusion and DALL-E to cut down on the time and effort it takes to produce diagrams and illustrations. However, researchers warn that these AI tools could spur an increase in fake data and inaccurate scientific imagery.

Nature looks at how researchers are using these tools, and what their increasing popularity could mean for science.

How do text-to-image tools work?

Many text-to-image AI tools, such as Midjourney and DALL-E, rely on machine-learning algorithms called diffusion models that are trained to recognize the links between millions of images scraped from the Internet and text descriptions of those images. These models have advanced in recent years owing to improvements in hardware and the availability of large data sets for training. After training, diffusion models can use text prompts to generate new images.

What are researchers using them for?

Some researchers are already using AI-generated images to illustrate methods in scientific papers. Others are using them to promote papers in social-media posts or to spice up presentation slides. “They are using tools like DALL-E 3 for generating nice-looking images to frame research concepts,” says AI researcher Juan Rodriguez at ServiceNow Research in Montreal, Canada. “I gave a talk last Thursday about my work and I used DALL-E 3 to generate appealing images to keep people’s attention,” he says.

Text-to-video tools are also on the rise, but seem to be less widely used by researchers who are not actively developing or studying these tools, says Rodriguez. However, this could soon change. Last month, ChatGPT creator OpenAI in San Francisco, California, released video clips generated by a text-to-video tool called Sora. “With the experiments we saw with Sora, it seems their method is much more robust at getting results quickly,” says Rodriguez. “We are early in terms of text-to-video, but I guess this year we will find out how this develops,” he adds.

What are the benefits of using these tools?

Generative AI tools can reduce the time taken to produce images or figures for papers, conference posters or presentations. Conventionally, researchers use a range of non-AI tools, such as PowerPoint, BioRender, and Inkscape. “If you really know how to use these tools, you can make really impressive figures, but it’s time-consuming,” says Rodriguez.

AI tools can also improve the quality of images for researchers who find it hard to translate scientific concepts into visual aids, says Rodriguez. With generative AI, researchers still come up with the high-level idea for the image, but they can use the AI to refine it, he says.

What are the risks?

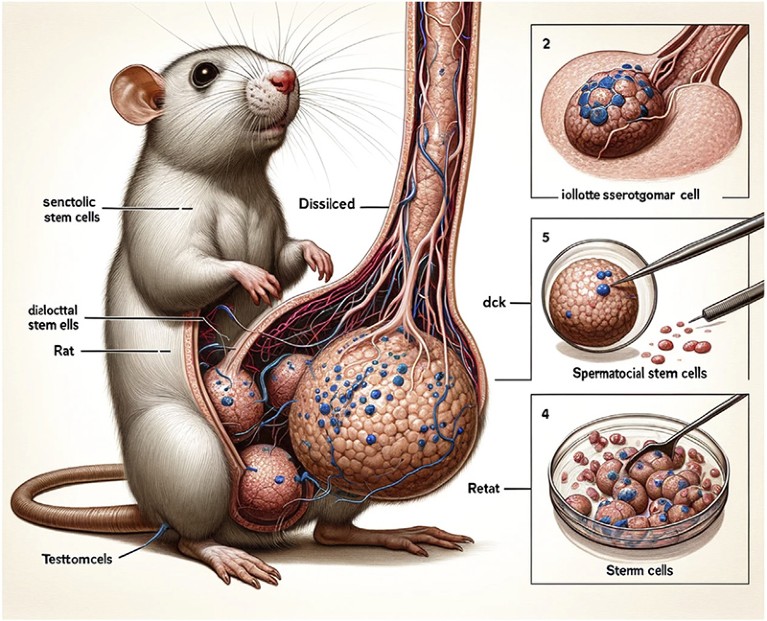

Currently, AI tools can produce convincing artwork and some illustrations, but they are not yet able to generate complex scientific figures with text annotations. “They don’t get the text right — the text is sometimes too small, much bigger or rotated,” says Rodriguez. The kind of problems that can arise were made clear in a paper published in Frontiers in Cell and Developmental Biology in mid-February, in which researchers used Midjourney to depict a rat’s reproductive organs1. The result, which passed peer review, was a cartoon rodent with comically enormous genitalia, annotated with gibberish.

“It was this really weird kind of grotesque image of a rat,” says palaeoartist Henry Sharpe, a palaeontology student at the University of Alberta in Edmonton, Canada. This incident is one of the “biggest case[s] involving AI-generated images to date”, says Guillaume Cabanac, who studies fraudulent AI-generated text at the University of Toulouse, France. After a public outcry from researchers, the paper was retracted.

This now-infamous AI-generated figure featured in a scientific paper that was later retracted.Credit: X. Guo et al./Front. Cell Dev. Biol.

There is also the possibility that AI tools could make it easier for scientific fraudsters to produce fake data or observations, says Rodriguez. Papers might contain not only AI-generated text, but also AI-generated figures, he says. And there is currently no robust method for detecting such images and videos. “It’s going to get pretty scary in the sense we are going to be bombarded by fake and synthetically generated data,” says Rodriguez. To address this, some researchers are developing ways to inject signals into AI-generated images to enable their detection.

Why has there been a backlash from some fields?

Last month, Sharpe launched a poll on social-media platforms including X, Facebook and Instagram that surveyed the views of around 90 palaeontologists on AI-generated depictions of ancient life. “Just one in four professional palaeontologists thought that AI should be allowed to be in scientific publications,” says Sharpe.

AI-generated images of ancient lifeforms or fossils can mislead both scientists and the public, he adds. “It’s inaccurate, all it does is copy existing things and it can’t actually go out and read papers.” Iteratively reconstructing ancient lifeforms by hand, in consultation with palaeontologists, can reveal plausible anatomical features — a process that is completely lost when using AI, Sharpe says. Palaeoartists and palaeontologists have aired similar views on X using the hashtag #PaleoAgainstAI.

How are publishers adapting to the popularity of these tools?

Journals differ in their policies around AI-generated imagery. Springer Nature has banned the use of AI-generated images, videos and illustrations in most journal articles that are not specifically about AI (Nature’s news team is independent of its publisher, Springer Nature). Journals in the Science family do not allow AI-generated text, figures or images to be used without explicit permission from the editors, unless the paper is specifically about AI or machine learning. PLOS ONE allows the use of AI tools but states that researchers must declare the tool involved, how they used it and how they verified the quality of the generated content.

[ad_2]

Source Article Link