[ad_1]

OLED is a much sought-after display technology in consumer products ranging from phones to TVs. OLED TVs are consistently ranked as the best TVs, thanks to their unparalleled contrast, steadily improving brightness with each new generation of sets, dynamic color and refined detail. However, there is one area where OLED TVs suffer: reflections.

The pixels in an OLED display individually dim as required, making them capable of greater light control than LED and mini-LED TVs, which use a separate backlight. But OLED TVs have also lacked brightness compared to mini-LED TVs, and their dimmer screens mean reflections can become a real issue. In recent years, brightness-boosting micro-lens-array (MLA) tech has been introduced into some of the best OLED TVs such as the LG G3 and Panasonic MZ2000 to limit reflections. And while MLA has helped OLED TVs to become brighter, reflections remain a problem.

Beating TV reflections typically involves rearranging lighting and blacking out windows. But another glare-fighting option recently became available when Samsung introduced OLED Glare Free screen technology in its new Samsung S95D OLED TV .

OLED Glare Free is an anti-reflection tech that uses a matte screen. Combined with the S95D’s QD-OLED display, which is brighter than a conventional OLED TV and on par with MLA OLED brightness, the result is an OLED TV capable of dramatically reducing reflections.

I recently tested the S95D alongside the Panasonic MZ1500, an upper mid-range model that uses a conventional W-OLED (White OLED) panel. Below, you’ll see the results when I pitted the two OLED screen types against one another.

Samsung – the reflection beater

Since the TechRadar testing lab has overhead lights and spotlights that can be set to various brightness levels, the natural place to start was at the highest brightness to create the worst possible conditions for reflections.

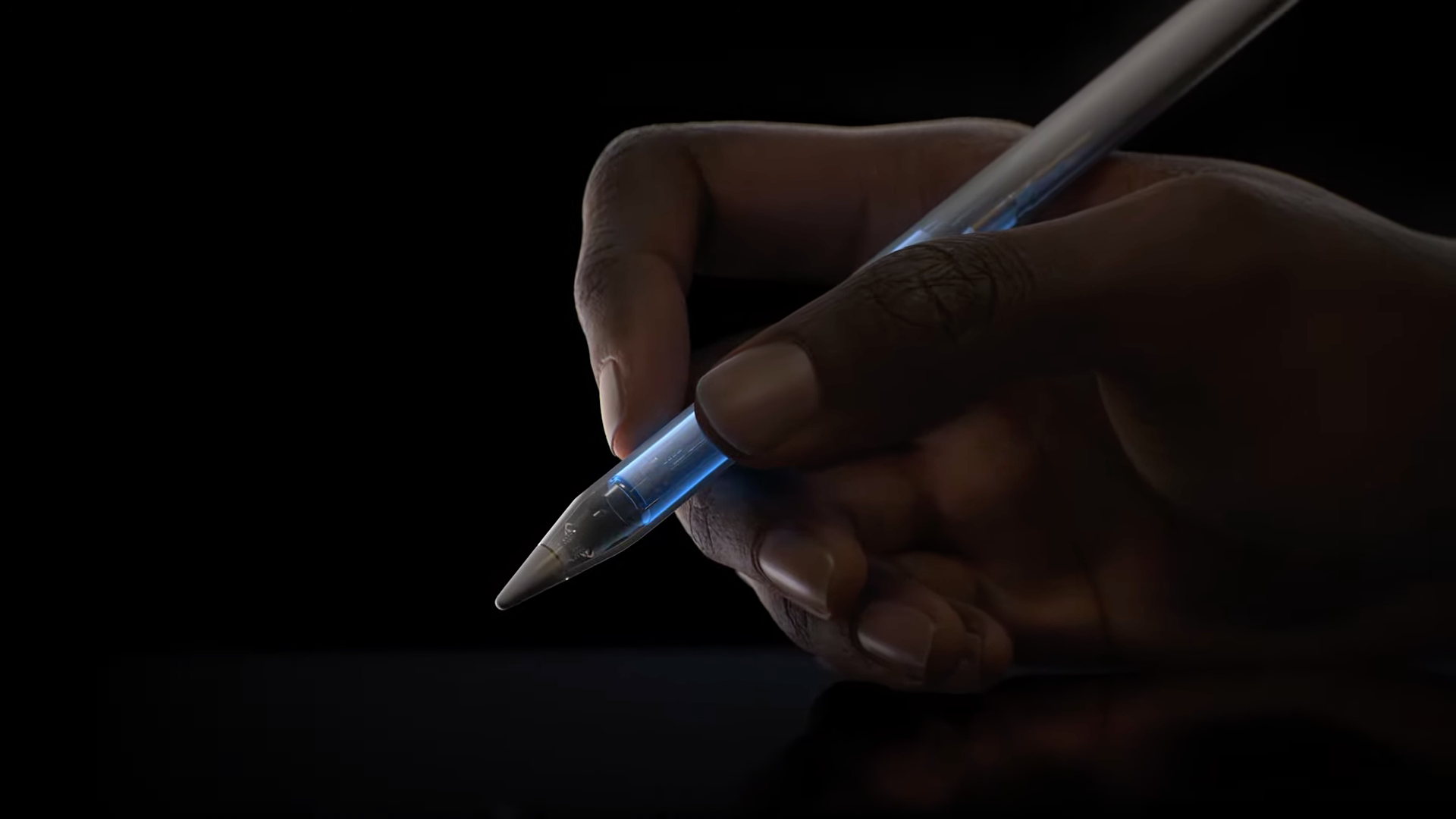

Placing the S95D and MZ1500 side-by-side, I used demo footage from the Spears & Munsil UHD Benchmark Blu-ray to test both sets with images of varying brightness. Viewing footage with predominantly black backgrounds, such as the fountain pen above, the S95D’s anti-reflection tech was clearly shown to advantage.

As can be seen, the lab’s sofa and door are reflected on the MZ1500 (on the left) and there are no objects at all visible on the S95D’s screen. Due to the size difference between the two sets (the S95D is 65 inches and the MZ1500 is 55 inches), it wasn’t easy at first to see how the reflections of the overhead lights fared. After changing the angle, however, the reflections – or lack thereof – became apparent.

On the S95D, reflections were reduced to a ‘haze’ – there was still a reflection from the light source present, but the object itself had diminished. On the MZ1500, however, the light source was obvious, creating a distracting ‘mirror-like’ reflection.

This was even the case with brighter footage, as seen below in a close-up image of a butterfly. Although obscured and not as distracting, the light is still obvious on the MZ1500 (left) and obscured on the S95D (right).

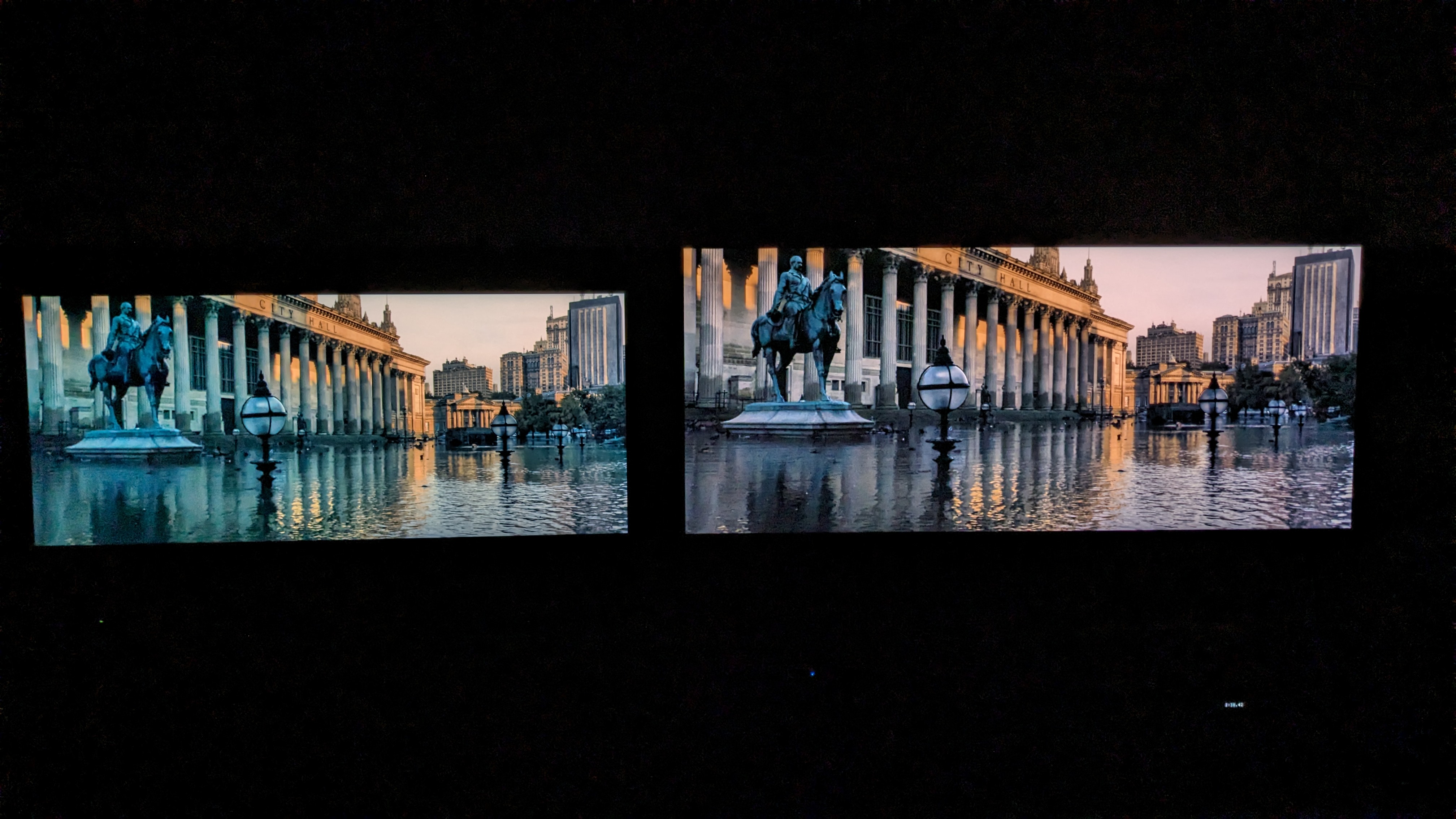

The same held true for movies such as The Batman, which features many scenes with dark tones. With the overhead lights set in turn to the brightest and dimmest levels, the Samsung S95D continued to limit reflections.

Sacrifices are made

One thing that became apparent when I placed the two TVs side-by-side was the effect of the S95D’s matte screen on black levels. Reflections were reduced, but so too was the depth and richness of the S95D’s blacks compared to the MZ1500. Below is an image from the same Spears & Munsil disc of a bright white Ferris wheel against a black night sky.

Interestingly, the dark tones and contrast were still very good on the S95D, but the picture lacked the MZ1500’s punch. Some shadow detail was missing and it even took on a slightly gray-ish tone. Viewing a scene from The Batman, the overall color palette was different as well, and although that may be down to the TVs themselves (both were in the Movie/Cinema picture mode), I can’t help but wonder if the Samsung TV’s matte screen was at fault.

Viewing both these TVs in pitch-black conditions, eliminating the possibility of reflections altogether, the S95D did have more dynamic color and detail, and its contrast gave the picture incredible depth.

But It’s interesting to note that, in brighter conditions, the MZ1500’s strong contrast gave it the more polished sheen expected from an OLED TV, even if screen reflections were far worse.

Final thoughts

Although the Panasonic MZ1500 put up a good fight during my comparison with its deep black levels and strong contrast in most lighting conditions in the lab, the Samsung S95D ably demonstrated the effectiveness of its OLED Glare Free screen technology, which converted mirror-like reflections to less distracting haze-type reflections.

For some, screen reflections aren’t an issue, and conventional OLED TVs, generally available for a cheaper price, will continue to be a fine option. But Samsung’s new anti-reflection tech has now made OLED TVs viable for those who view in brighter environments. As a bonus, the Samsung S95D has superb all-around picture quality, which takes OLED TV performance to the next level.

You might also like…

[ad_2]

Source Article Link