[ad_1]

March 14, 1994: Apple introduces the Power Macintosh 7100, a midrange Mac that will become memorable for two reasons.

March 14, 1994: Apple introduces the Power Macintosh 7100, a midrange Mac that will become memorable for two reasons.

The first is that it is among the first Macs to use new PowerPC processors. The second is that it results in Apple getting taken to court by astronomer Carl Sagan — not once but twice.

Power Macintosh 7100: A solid Mac

The Power Macintosh 7100 was one of three Macs introduced in March 1994, with the other two being the lower-end Power Macintosh 6100 and the high-end 8100 model.

The Power Mac 7100’s PowerPC processor ran at 66 MHz (a spec that Apple upgraded to 80 MHz in January 1995). The computer’s hard drive ranged between 250MB and 700MB in size. The Mac also sported Apple’s then-standard NuBus card slots and 72-pin paired RAM slots.

The Mac 7100 came in a slightly modified Macintosh IIvx case. (The IIvx was the first Mac to come in a metal case and feature an internal CD-ROM drive.)

Costing between $2,900 and $3,500, the Mac 7100 was a solid piece of hardware that bridged the gap nicely between the low-end consumer 6100 and its higher-end 8100 sibling. It was, for example, perfectly capable of running two monitors. However, it could overheat when performing particularly strenuous tasks such as complex rendering of images or videos.

Photo: Apple

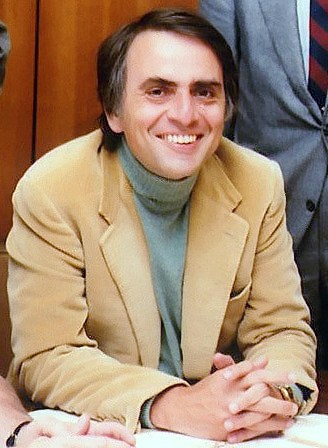

Carl Sagan sues Apple over Power Mac 7100 code name

As many Apple fans will know, the company’s engineers frequently give code names to projects they’re working on, either to maintain secrecy or just for fun. They gave the Power Mac 7100 the code name “Carl Sagan” as a tribute to the famous astronomer.

Unfortunately, the secret in-joke spilled in a 1993 issue of MacWeek that eventually found its way into Sagan’s hands. In a letter to MacWeek, Sagan wrote:

“I have been approached many times over the past two decades by individuals and corporations seeking to use my name and/or likeness for commercial purposes. I have always declined, no matter how lucrative the offer or how important the corporation. My endorsement is not for sale.

For this reason, I was profoundly distressed to see your lead front-page story ‘Trio of Power PC Macs spring toward March release date’ proclaiming Apple’s announcement of a new Mac bearing my name. That this was done without my authorization or knowledge is especially disturbing. Through my attorneys, I have repeatedly requested Apple to make a public clarification that I knew nothing of its intention to capitalize on my reputation in introducing this product, that I derived no benefit, financial or otherwise, from its doing so. Apple has refused. I would appreciate it if you so apprise your readership.”

A new code name: ‘Butt-Head Astronomer’

Photo: Carl Sagan Planetary Society CC

Forced to change the code name, Apple engineers began calling the project “BHA,” which stood for “Butt-Head Astronomer.”

Sagan then sued Apple over the implication that he was a “butt-head.” The judge overseeing the matter made the following statement:

“There can be no question that the use of the figurative term ‘butt-head’ negates the impression that Defendant was seriously implying an assertion of fact. It strains reason to conclude the Defendant was attempting to criticize Plaintiff’s reputation of competency as an astronomer. One does not seriously attack the expertise of a scientist using the undefined phrase ‘butt-head.’”

Still, Apple’s legal team asked the engineers to change the code name once more. They picked “LAW” — standing for “Lawyers Are Wimps.”

Sagan appealed the judge’s decision. Eventually, in late 1995, the famous astronomer reached a settlement with Apple. From that point on, Cupertino appears to have used only benign code names related to activities like skiing.

Do you remember the Power Macintosh 7100? Leave your comments below.

[ad_2]

Source Article Link