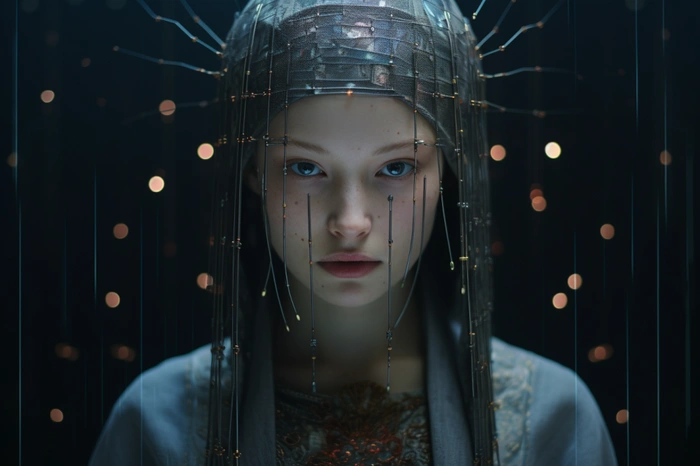

AI artists searching for a way to more accurately control AI art creation in Stable Diffusion might be interested in learning how to use ControlNet. A Stable Diffusion model, that has transformed the way AI artists can generate and manipulate images. This model allows users to copy compositions or human poses from a reference image, providing a level of precision that was previously unattainable. This article will delve into the intricacies of using ControlNet, focusing on the image prompt adapter, and how it can be utilized to customize AI images.

ControlNet is a neural network model designed to control Stable Diffusion models. It adds an extra layer of conditioning to the text prompt, which is the most basic form of using SDXL models. This extra conditioning can take various forms, allowing users to manipulate AI-generated images with precision. The image prompt adapter in ControlNet is a powerful tool that can be used to create a person and a background around an AI-generated face, change the age, hair type and color of a person in a photo, or alter elements in digital art.

How to use customise AI art with SDXL and ControlNet

ControlNet and its image prompt adapter provide a powerful tool for manipulating and generating AI images. Whether you’re looking to change elements in digital art, regenerate AI images, or create a whole body and environment from a face image, ControlNet offers a level of precision and control that was previously unattainable. With the right knowledge and tools, the possibilities for image manipulation and generation are virtually limitless.

Other articles you may find of interest on the subject of Stable Diffusion created by Stability AI :

To use ControlNet, users need to download three IP adapter models from Hugging Face, as well as the IP adapter plus face model. The IP adapter model is an image prompt model for text-to-image-based diffusion models like stable diffusion and can be used in combination with other ControlNet models.

The workflow for using the IP adapter model involves regenerating a reference AI image in SDXL and adding elements to the final image using positive prompts. This process allows users to change elements in digital art using ControlNet. For instance, users can use inpainting to change the hair of a base AI image and inpaint the face from another base image. This technique provides a level of control over the subject’s body and face angle, allowing users to change the subject of an image without inpainting.

ControlNet models can also be used in combination with other models. For example, the Rev animated checkpoint can be used to take an AI-generated vector of a house and regenerate it as anime-style art. This technique can be used to manipulate art in various environments and weather conditions.

One of the most powerful features of ControlNet is the ability to create a whole body and environment from a face image. This is achieved by using the plus face model and a second ControlNet image using open pose. This feature provides users with more control over the subject’s body and face angle, allowing them to create more realistic and detailed images. To learn more about ControlNet on how to install it jump over to the Stability AI website.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.