[ad_1]

Google celebró la sesión magistral de su conferencia anual centrada en desarrolladores E/S de Google El evento es el martes. Durante la sesión, el gigante tecnológico se centró en gran medida en los nuevos desarrollos en inteligencia artificial (IA) e introdujo muchos modelos nuevos de inteligencia artificial, así como nuevas características a la infraestructura existente. Uno de los aspectos más destacados fue la introducción de una ventana contextual de 2 millones de tokens para Gemini 1.5 Pro, que actualmente está disponible para los desarrolladores. Versión más rápida de mellizo Además del modelo Small Model Language (SML) de próxima generación de Google, también se presentó Gemma 2.

El evento fue iniciado por el CEO Sundar Pichai, quien hizo uno de los anuncios más importantes de la noche: la disponibilidad de una ventana contextual de 2 millones de tokens para Gemini 1.5 Pro. La compañía introdujo una ventana contextual que contiene 1 millón de tokens a principios de este año, pero hasta ahora solo ha estado disponible para los desarrolladores. Google Ahora está disponible de forma general en versión preliminar pública y se puede acceder a él a través de Google AI Studio y Vertex AI. En cambio, la ventana de contexto de 2 millones de tokens está disponible exclusivamente a través de la cola para los desarrolladores que utilizan la API y para los clientes de Google Cloud.

Con una ventana contextual de 2 millones, afirma Google, el modelo de IA puede procesar dos horas de vídeo, 22 horas de audio, más de 60.000 líneas de código o más de 1,4 millones de palabras de una sola vez. Además de mejorar la comprensión contextual, el gigante tecnológico también ha mejorado la generación de código, el pensamiento lógico, la planificación y la conversación de varios turnos de Gemini 1.5 Pro, así como la comprensión de imágenes y audio. El gigante tecnológico también está integrando el modelo de IA en sus aplicaciones Gemini Advanced y Workspace.

Google también ha presentado una nueva incorporación a su familia de modelos Gemini AI. El nuevo modelo de IA, llamado Gemini 1.5 Flash, es un modelo liviano diseñado para ser más rápido, con mayor capacidad de respuesta y rentable. El gigante tecnológico dijo que trabajó para mejorar el tiempo de respuesta para mejorar su velocidad. Aunque resolver tareas complejas no será su fuerte, puede manejar tareas como resúmenes, aplicaciones de chat, subtítulos de imágenes y videos, extracción de datos de documentos y tablas extensos, y más.

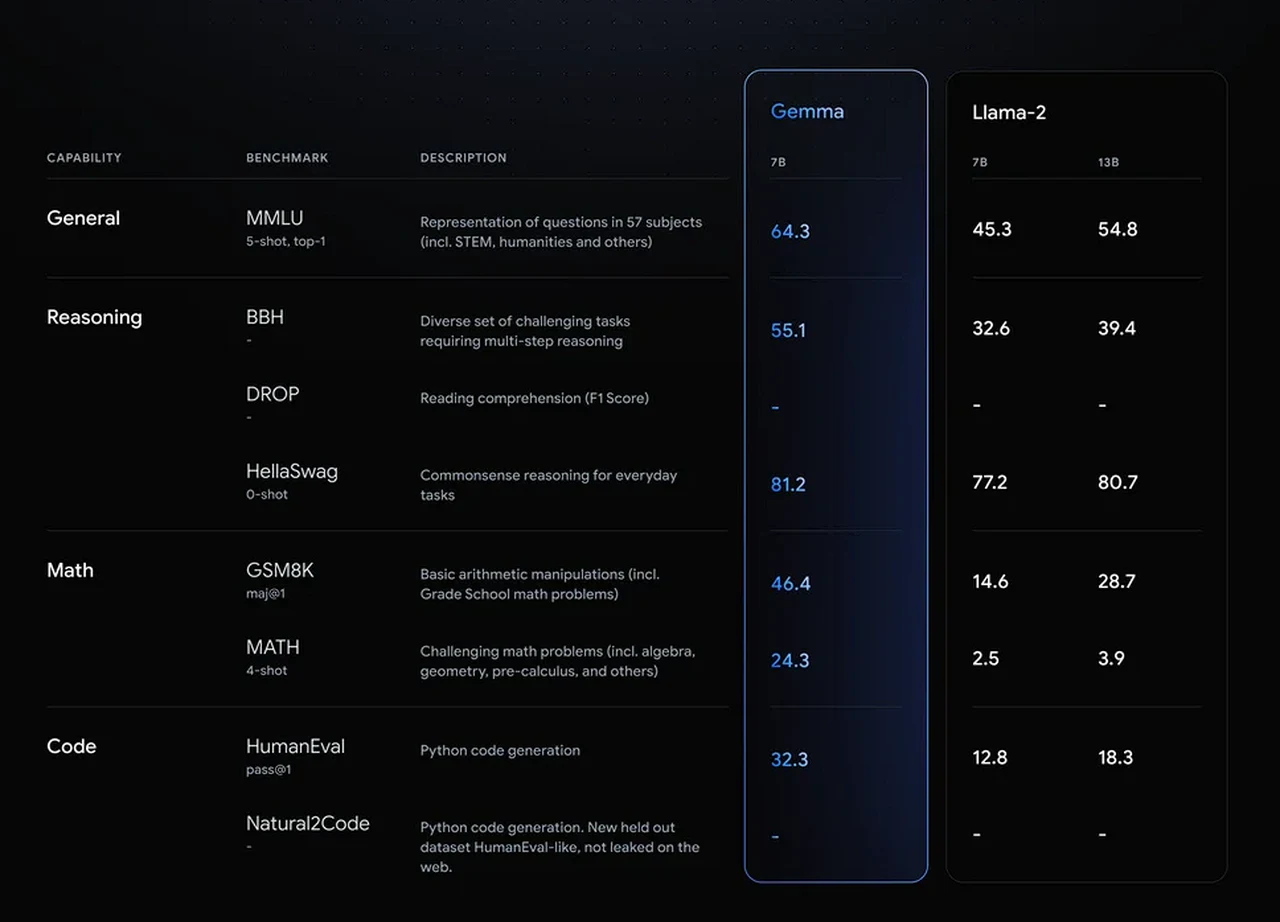

Finalmente, el gigante tecnológico anunció su próxima generación de modelos de IA más pequeños, Gemma 2. El modelo viene con 27 mil millones de parámetros pero puede funcionar de manera eficiente en GPU o una sola TPU. Google afirma que Gemma 2 supera a los modelos que duplican su tamaño. La empresa aún no ha anunciado sus resultados récord.

[ad_2]

Source Article Link