[ad_1]

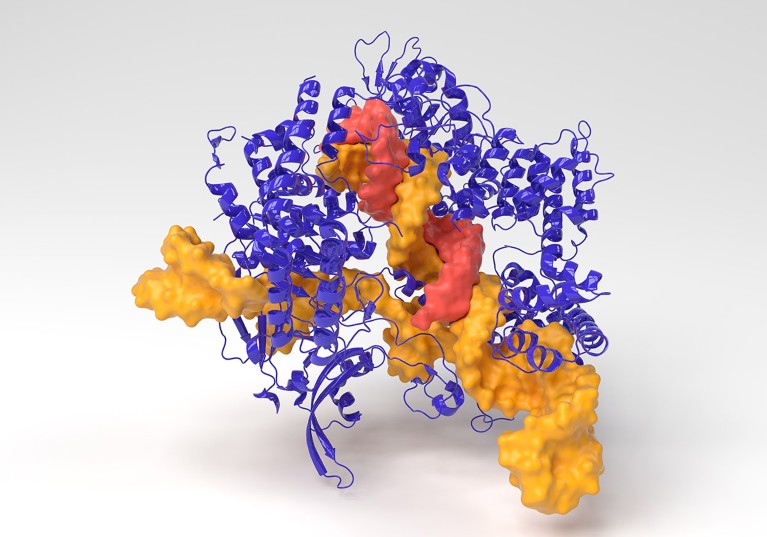

A 3D model of the CRISPR-Cas9 gene editing complex from Streptococcus pyogenes.Credit: Indigo Molecular Images/Science Photo Library

In the never-ending quest to discover previously unknown CRISPR gene-editing systems, researchers have scoured microbes in everything from hot springs and peat bogs, to poo and even yogurt. Now, thanks to advances in generative artificial intelligence (AI), they might be able to design these systems with the push of a button.

This week, researchers published details of how they used a generative AI tool called a protein language model — a neural network trained on millions of protein sequences — to design CRISPR gene-editing proteins, and were then able to show that some of these systems work as expected in the laboratory1.

And in February, another team announced that it had developed a model trained on microbial genomes, and used it to design fresh CRISPR systems, which are comprised of a DNA or RNA-cutting enzyme and RNA molecules that direct the molecular scissors as to where to cut2.

“It’s really just scratching the surface. It’s showing that it’s possible to design these complex systems with machine-learning models,” says Ali Madani, a machine-learning scientist and chief executive of the biotechnology firm Profluent, based in Berkeley, California. Madani’s team reported what it says is “the first successful editing of the human genome by proteins designed entirely with machine learning” in a 22 April preprint1 on bioRxiv.org (which hasn’t been peer-reviewed).

Alan Wong, a synthetic biologist at the University of Hong Kong, whose team has used machine learning to optimize CRISPR3, says that naturally occurring gene-editing systems have limitations in terms of the sequences that they can target and the sort of changes that they can make. For some applications, therefore, it can be a challenge to find the right CRISPR. “Expanding the repertoire of editors, using AI, could help,” he says.

Trained on genomes

Whereas chatbots such as ChatGPT are designed to handle language after being trained on existing text, the CRISPR-designing AIs were instead trained on vast troves of biological data in the form of protein or genome sequences. The goal of this ‘pre-training’ step is to imbue the models with insight into naturally occurring genetic sequences, such as which amino acids tend to go together. This information can then be applied to tasks such as the creation of totally new sequences.

Madani’s team previously used a protein language model they developed, called ProGen, to come up with new antibacterial proteins4. To devise new CRISPRs, his team retrained an updated version of ProGen with examples of millions of diverse CRISPR systems, which bacteria and other single-celled microbes called archaea use to fend off viruses.

Because CRISPR gene-editing systems comprise not only proteins, but also RNA molecules that specify their target, Madani’s team developed another AI model to design these ‘guide RNAs’.

The team then used the neural network to design millions of new CRISPR protein sequences that belong to dozens of different families of such proteins found in nature. To see whether AI-designed CRISPRs were bona fide gene editors, Madani’s team synthesized DNA sequences corresponding to more than 200 protein designs belonging to the CRISPR–Cas9 system that is now widely used in the laboratory. When they inserted these sequences — instructions for a Cas9 protein and a ‘guide RNA’ — into human cells, many of the gene editors were able to precisely cut their intended targets in the genome.

The most promising Cas9 protein — a molecule they’ve named OpenCRISPR-1 — was just as efficient at cutting targeted DNA sequences as a widely used bacterial CRISPR–Cas9 enzyme, and it made far fewer cuts in the wrong place. The researchers also used the OpenCRISPR-1 design to create a base editor — a precision gene-editing tool that changes individual DNA ‘letters’ — and found that it, too, was as efficient as other base-editing systems, as well as less prone to errors.

Another team, led by Brian Hie, a computational biologist at Stanford University in California, and by bioengineer Patrick Hsu at the Arc Institute in Palo Alto, California, used an AI model capable of generating both protein and RNA sequences. Their model, called EVO, was trained on 80,000 genomes from bacteria and archaea, as well as other microbial sequences, amounting to 300 billion DNA letters. Hie and Hsu’s team has not yet tested its designs in the lab. But predicted structures of some of the CRISPR–Cas9 systems they designed resemble those of natural proteins. Their work was described in a preprint2 posted on bioRxiv.org, and has not been peer-reviewed.

Precision medicine

“This is amazing,” says Noelia Ferruz Capapey, a computational biologist at the Molecular Biology Institute of Barcelona in Spain. She’s impressed by the fact that researchers can use the OpenCRISPR-1 molecule without restriction, unlike with some patented gene-editing tools. The ProGen2 model and ‘atlas’ of CRISPR sequences used to fine-tune it are also freely available.

The hope is that AI-designed gene-editing tools could be better suited to medical applications than are existing CRISPRs, says Madani. Profluent, he adds, is hoping to partner with companies that are developing gene-editing therapies to test AI-generated CRISPRs. “It really necessitates precision and a bespoke design. And I think that just can’t be done by copying and pasting” from naturally-occurring CRISPR systems, he says.

[ad_2]

Source Article Link