If you’ve been enjoying creating art using Stable Diffusion or one of the other AI models such as Midjourney or DallE 3 recently added to ChatGPT by OpenAI and available to Jews for free via the Microsoft Image Creator website. You might be interested in a new workflow created by Laura Carnevali which combines Stable Diffusion, ComfyUI and multiple ControlNet models.

Stable Diffusion XL (SDXL), created by the development team at Stability AI is well-known for its amazing image generation capabilities. While SDXL alone is impressive, its integration with ComfyUI elevates it to an entirely new level of user experience. ComfyUI serves as the perfect toolkit for anyone who wants to dabble in the art of image generation, providing an array of features that make the process more accessible, streamlined, and endlessly customizable.

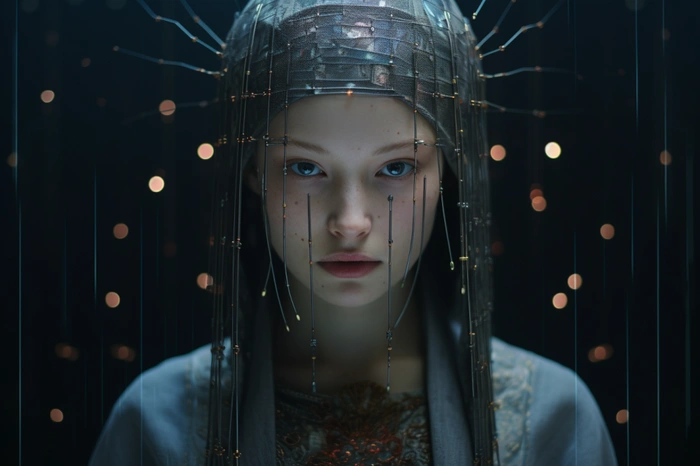

AI art generation using Stable Diffusion, ComfyUI and ControlNet

ComfyUI operates on a nodes/graph/flowchart interface, where users can experiment and create complex workflows for their SDXL projects. What sets it apart is that you don’t have to write a single line of code to get started. It fully supports various versions of Stable Diffusion, including SD1.x, SD2.x, and SDXL, making it a versatile tool for any project.

Other articles we have written that you may find of interest on the subject of Stable Diffusion and Stability AI :

SDXL offers a plethora of ways to modify and enhance your art. From inpainting, which allows you to make internal edits, to outpainting for extending the canvas, and image-to-image transformations, the platform is designed for flexibility. Yet, it’s ComfyUI that truly provides the sandbox environment for experimentation and control.

ComfyUI node-based GUI for Stable Diffusion

The system is designed for efficiency, incorporating an asynchronous queue system that improves the speed of execution. One of its standout features is its optimization capability; it only re-executes the changed parts of the workflow between runs, saving both time and computational power. If you are resource-constrained, ComfyUI comes equipped with a low-vram command line option, making it compatible with GPUs that have less than 3GB of VRAM. It’s worth mentioning that the system can also operate on CPUs, although at a slower speed.

The types of models and checkpoints that ComfyUI can load are quite expansive. From standalone VAEs and CLIP models to ckpt, safetensors, and diffusers, you have a wide selection at your fingertips. It’s rich in additional features like Embeddings/Textual inversion, Loras, Hypernetworks, and even unCLIP models, offering you a holistic environment for creating and experimenting with AI art.

One of the more intriguing features is the ability to load full workflows, right from generated PNG files. You can save or load these workflows as JSON files for future use or collaboration. The nodes interface isn’t limited to simple tasks; you can create intricate workflows for more advanced operations like high-resolution fixes, Area Composition, and even model merging.

ComfyUI doesn’t fall short when it comes to image quality enhancements. It supports a range of upscale models like ESRGAN and its variants, SwinIR, Swin2SR, among others. It also allows inpainting with both regular and specialized inpainting models. Additional utilities like ControlNet, T2I-Adapter, and Latent previews with TAESD add more granularity to your customization efforts.

On top of all these features, ComfyUI starts up incredibly quickly and operates fully offline, ensuring that your workflow remains uninterrupted. The marriage between Stable Diffusion XL and ComfyUI offers a comprehensive, user-friendly platform for AI-based art generation. It blends technological sophistication with ease of use, catering to both novices and experts in the field. The versatility and depth of features available in ComfyUI make it a must-try for anyone serious about the craft of image generation.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.