[ad_1]

The National Science Library of the Chinese Academy of Sciences in Beijing.Credit: Yang Qing/Imago via Alamy

China has updated its list of journals that are deemed to be untrustworthy, predatory or not serving the Chinese research community’s interests. Called the Early Warning Journal List, the latest edition, published last month, includes 24 journals from about a dozen publishers. For the first time, it flags journals that exhibit misconduct called citation manipulation, in which authors try to inflate their citation counts.

Yang Liying studies scholarly literature at the National Science Library, Chinese Academy of Sciences, in Beijing. She leads a team of about 20 researchers who produce the annual list, which was launched in 2020 and relies on insights from the global research community and analysis of bibliometric data.

The list is becoming increasingly influential. It is referenced in notices sent out by Chinese ministries to address academic misconduct, and is widely shared on institutional websites across the country. Journals included in the list typically see submissions from Chinese authors drop. This is the first year the team has revised its method for developing the list; Yang speaks to Nature about the process, and what has changed.

How do you go about creating the list every year?

We start by collecting feedback from Chinese researchers and administrators, and we follow global discussions on new forms of misconduct to determine the problems to focus on. In January, we analyse raw data from the science-citation database Web of Science, provided by the publishing-analytics firm Clarivate, based in London, and prepare a preliminary list of journals. We share this with relevant publishers, and explain why their journals could end up on the list.

Sometimes publishers give us feedback and make a case against including their journal. If their response is reasonable, we will remove it. We appreciate suggestions to improve our work. We never see the journal list as a perfect one. This year, discussions with publishers cut the list from around 50 journals down to 24.

Yang Liying studies scholarly literature at the National Science Library and manages a team of 20 to put together the Early Warning Journal List.Credit: Yang Liying

What changes did you make this year?

In previous years, journals were categorized as being high, medium or low risk. This year, we didn’t report risk levels because we removed the low-risk category, and we also realized that Chinese researchers ignore the risk categories and simply avoid journals on the list altogether. Instead, we provided an explanation of why the journal is on the list.

In previous years, we included journals with publication numbers that increased very rapidly. For example, if a journal published 1,000 articles one year and then 5,000 the next year, our initial logic was that it would be hard for these journals to maintain their quality-control procedures. We have removed this criterion this year. The shift towards open access has meant that it is possible for journals to receive a large number of manuscripts, and therefore rapidly increase their article numbers. We don’t want to disturb this natural process decided by the market.

You also introduced journals with abnormal patterns of citation. Why?

We noticed that there has been a lot of discussion on the subject among researchers around the world. It’s hard for us to say whether the problem comes from the journals or from the authors themselves. Sometimes groups of authors agree to this citation manipulation mutually, or they use paper mills, which produce fake research papers. We identify these journals by looking for trends in citation data provided by Clarivate — for example, journals in which manuscript references are highly skewed to one journal issue or articles authored by a few researchers. Next year, we plan to investigate new forms of citation manipulation.

Our work seems to have an impact on publishers. Many publishers have thanked us for alerting them to the issues in their journals, and some have initiated their own investigations. One example from this year is the open-access publisher MDPI, based in Basel, Switzerland, which we informed that four of its journals would be included in our list because of citation manipulation. Perhaps it is unrelated, but on 13 February, MDPI sent out a notice that it was looking into potential reviewer misconduct involving unethical citation practices in 23 of its journals.

You also flag journals that publish a high proportion of papers from Chinese researchers. Why is this a concern?

This is not a criterion we use on its own. These journals publish — sometimes almost exclusively — articles by Chinese researchers, charge unreasonably high article processing fees and have a low citation impact. From a Chinese perspective, this is a concern because we are a developing country and want to make good use of our research funding to publish our work in truly international journals to contribute to global science. If scientists publish in journals where almost all the manuscripts come from Chinese researchers, our administrators will suggest that instead the work should be submitted to a local journal. That way, Chinese researchers can read it and learn from it quickly and don’t need to pay so much to publish it. This is a challenge that the Chinese research community has been confronting in recent years.

How do you determine whether a journal has a paper-mill problem?

My team collects information posted on social media as well as websites such as PubPeer, where users discuss published articles, and the research-integrity blog For Better Science. We currently don’t do the image or text checks ourselves, but we might start to do so later.

My team has also created an online database of questionable articles called Amend, which researchers can access. We collect information on article retractions, notices of concern, corrections and articles that have been flagged on social media.

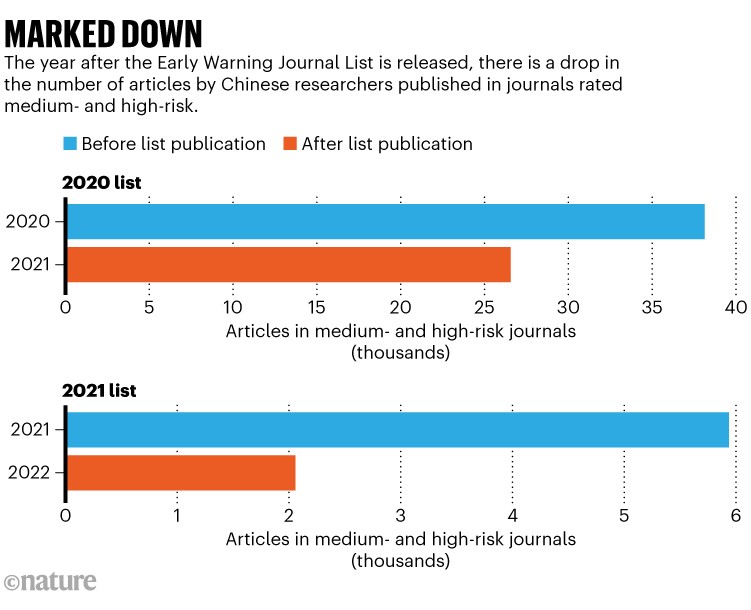

Source: Early Warning Journal List

What impact has the list had on research in China?

This list has benefited the Chinese research community. Most Chinese research institutes and universities reference our list, but they can also develop their own versions. Every year, we receive criticisms from some researchers for including journals that they publish in. But we also receive a lot of support from those who agree that the journals included on the list are of low quality, which hurts the Chinese research ecosystem.

There have been a lot of retractions from China in journals on our list. And once a journal makes it on to the list, submissions from Chinese researchers typically drop (see ‘Marked down’). This explains why many journals on our list are excluded the following year — this is not a cumulative list.

This interview has been edited for length and clarity.

[ad_2]

Source Article Link