The advent of artificial intelligence (AI) and its subsequent growth has brought about a significant shift in the technology landscape. One of the areas experiencing this transformation is cloud computing, where the traditional Ethernet-based cloud networks are being challenged to handle the computational requirements of modern AI workloads. This has led to the emergence of SuperNICs, a new class of network accelerators specifically designed to enhance AI workloads in Ethernet-based clouds.

SuperNICs, or Super Network Interface Cards, have unique features that set them apart from traditional network interface cards (NICs). These include high-speed packet reordering, advanced congestion control, programmable compute on the I/O path, power-efficient design, and full-stack AI optimization. These features are designed to provide high-speed network connectivity for GPU-to-GPU communication, with speeds reaching up to 400Gb/s using RDMA over RoCE technology.

The capabilities of SuperNICs are particularly crucial in the current AI landscape, where the advent of generative AI and large language models has imposed unprecedented computational demands. Traditional Ethernet and foundational NICs, which were not designed with these needs in mind, struggle to keep up. SuperNICs, on the other hand, are purpose-built for these modern AI workloads, offering efficient data transfer, low latency, and deterministic performance.

What is SuperNIC and why does it matter?

The comparison between SuperNICs and Data Processing Units (DPUs) is an interesting one. While DPUs offer high throughput and low-latency network connectivity, SuperNICs take it a step further by being specifically optimized for accelerating networks for AI. This optimization is evident in the 1:1 ratio between GPUs and SuperNICs within a system, a design choice that significantly enhances AI workload efficiency.

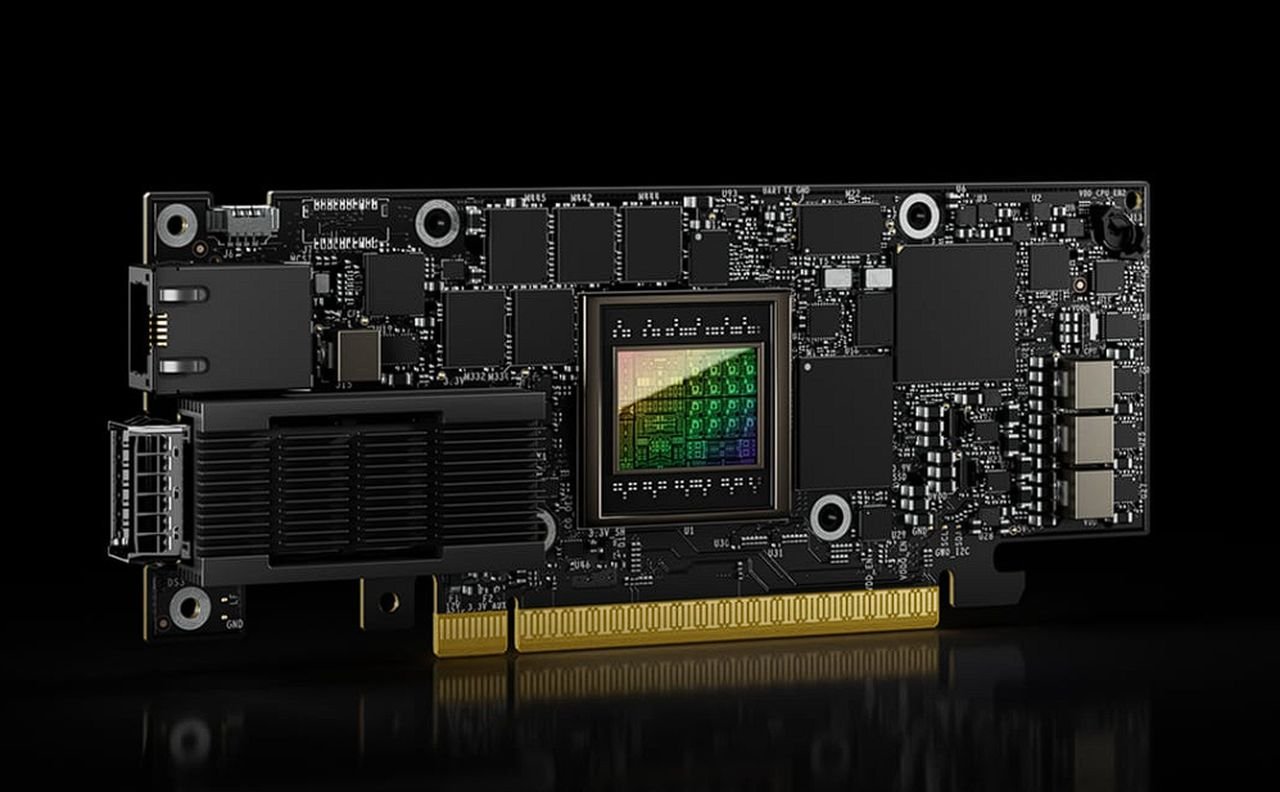

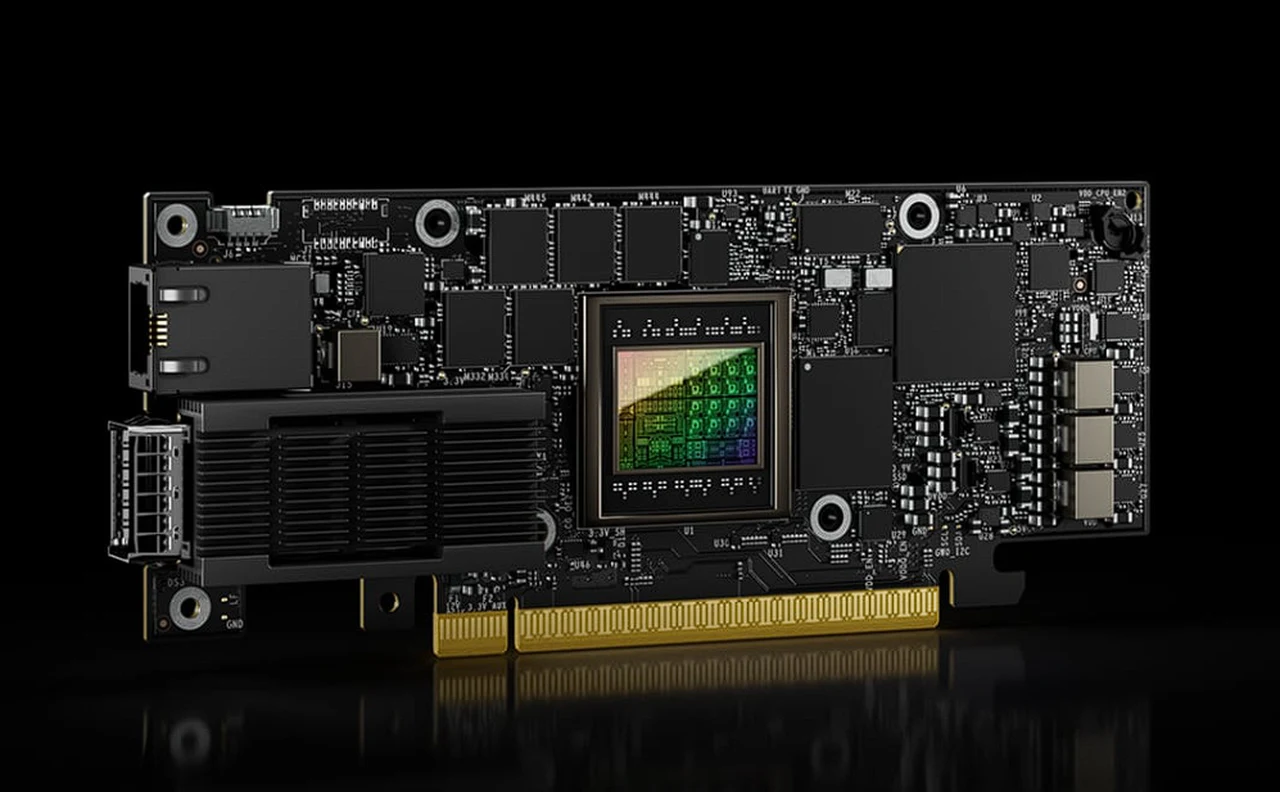

A prime example of this new technology is NVIDIA’s BlueField-3 SuperNIC, the world’s first SuperNIC for AI computing. Based on the BlueField-3 networking platform and integrated with the Spectrum-4 Ethernet switch system, this SuperNIC forms part of an accelerated computing fabric designed to optimize AI workloads.

The NVIDIA BlueField-3 SuperNIC offers several benefits that make it a valuable asset in AI computing environments. It provides peak AI workload efficiency, consistent and predictable performance, and secure multi-tenant cloud infrastructure. Additionally, it offers an extensible network infrastructure and broad server manufacturer support, making it a versatile solution for various AI needs.

The emergence of SuperNICs marks a significant step forward in the evolution of AI cloud computing. By offering high-speed, efficient, and optimized network acceleration, SuperNICs like NVIDIA’s BlueField-3 SuperNIC are poised to revolutionize the way AI workloads are handled in Ethernet-based clouds. As the AI field continues to grow and evolve, the role of SuperNICs in facilitating this growth will undoubtedly become more prominent.

Image Credit : NVIDIA

Filed Under: Hardware, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.