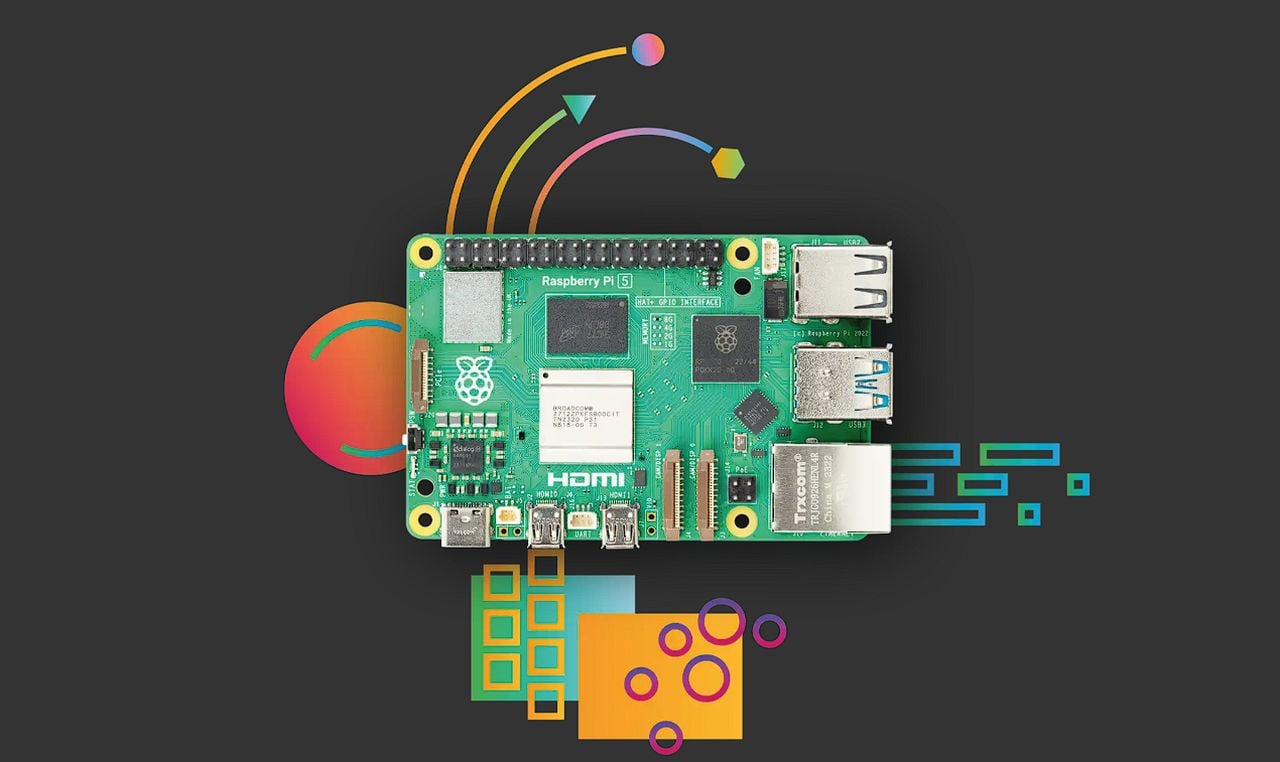

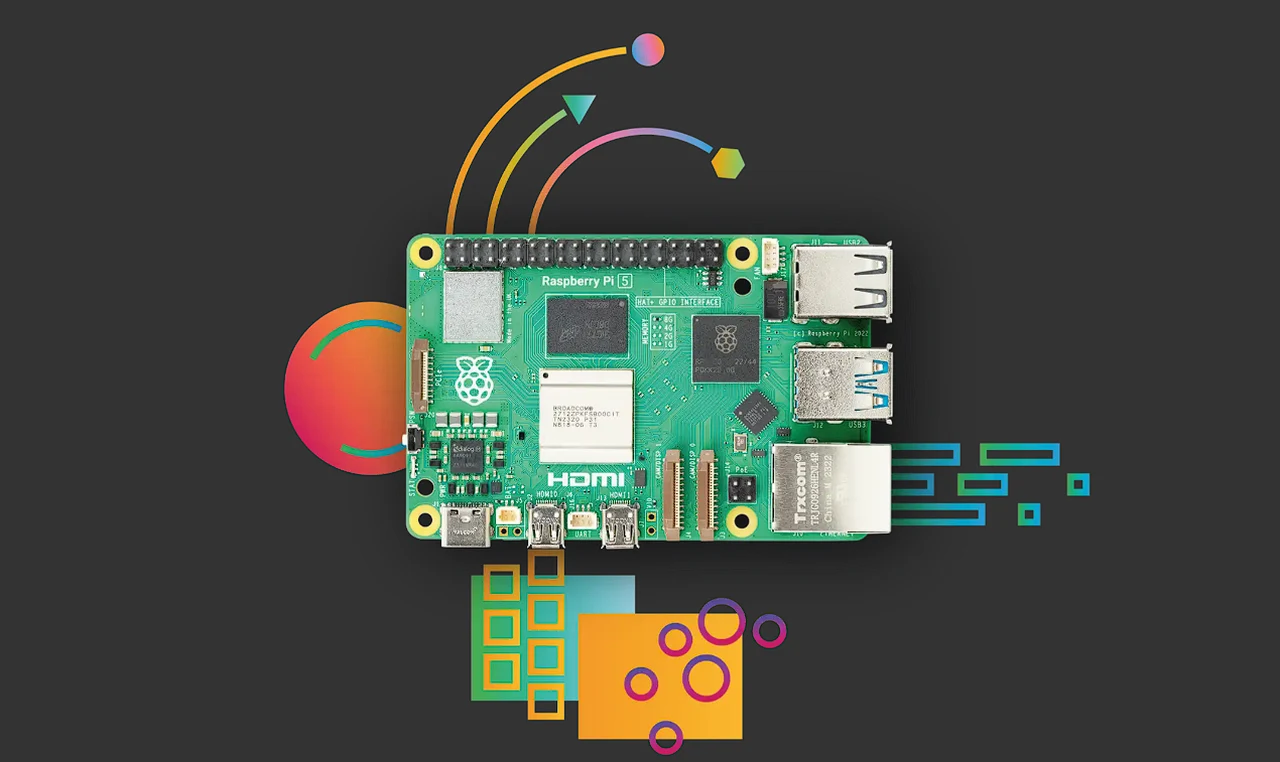

The latest Raspberry Pi mini PC launched late last year is more powerful than anything before it and is capable of carrying out applications that were once impossible. Raspberry Pi 5 is built using the RP1 I/O controller, a package containing silicon designed in-house at Raspberry Pi. It even has enough power to run large language models locally allowing you to embrace the artificial intelligence (AI) revolution by installing AI on a Raspberry Pi 5. You’re probably curious to know more about what can be achieved once you’ve installed AI on your mini PC. Let’s delve into what the Raspberry Pi 5 can do in the realm of AI, focusing on language models that are a good fit for this hardware.

The Raspberry Pi 5 has made strides in performance, but running something as complex as OpenAI’s GPT-4 might be asking too much of it. However, don’t be discouraged. There are smaller open source AI models, like Mistral 7B, that are within the realm of possibility for those keen to experiment with AI on this powerful pocket mini PC.

If you’re looking for more accessible options, consider open-source models like Orca and Microsoft’s Phi-2. These alternatives may not have the vast capabilities of GPT-4, but they still offer valuable AI features. They’re especially useful for developers who need to tap into a wide range of knowledge without relying on an internet connection.

How to run AI on a Raspberry Pi 5

To boost the AI capabilities of your Raspberry Pi 5, you might want to look into the Coral USB Accelerator that adds an Edge TPU coprocessor to your system for just $60. Enabling high-speed machine learning inferencing on a wide range of systems, simply by connecting it to a USB port. These accelerators are designed for edge computing and can significantly enhance AI tasks. Keep in mind, though, that these TPUs also have their limitations, particularly with larger language models.

Here are some other articles you may find of interest on the subject of compact mini PC systems :

Setting up your Raspberry Pi 5 for AI involves a few important steps. You’ll need to install the necessary software, configure the environment, and adjust the model to work with the ARM architecture. Tools like Ollama can help simplify this process, making it more efficient to operate language models on ARM-based devices like the Raspberry Pi 5.

Security and Privacy

One of the major perks of running AI models on a local device is the privacy it provides. By processing data on your Raspberry Pi 5, you keep sensitive information secure and avoid the risks that come with sending data over the internet. This approach is essential when dealing with personal or confidential data.

For more demanding tasks, you can connect multiple Raspberry Pi devices in a cluster to share the computational load. This collaborative setup allows you to use more complex models by leveraging the collective power of several units. Local language models are particularly useful in environments with limited or no internet access. They can hold a vast amount of global knowledge, enabling you to use AI-driven applications even when you’re offline.

The Raspberry Pi 5 presents an intriguing platform for running smaller AI language models, offering a sweet spot between cost-effectiveness and capability. While it might not be the perfect match for top-of-the-line models like GPT-4, the Raspberry Pi 5—whether used on its own, with Coral TPUs, or as part of a cluster—provides a compelling opportunity for deploying AI at the edge. As technology advances, the prospect of running powerful AI models on widely accessible devices is becoming increasingly tangible.

Filed Under: Guides, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.