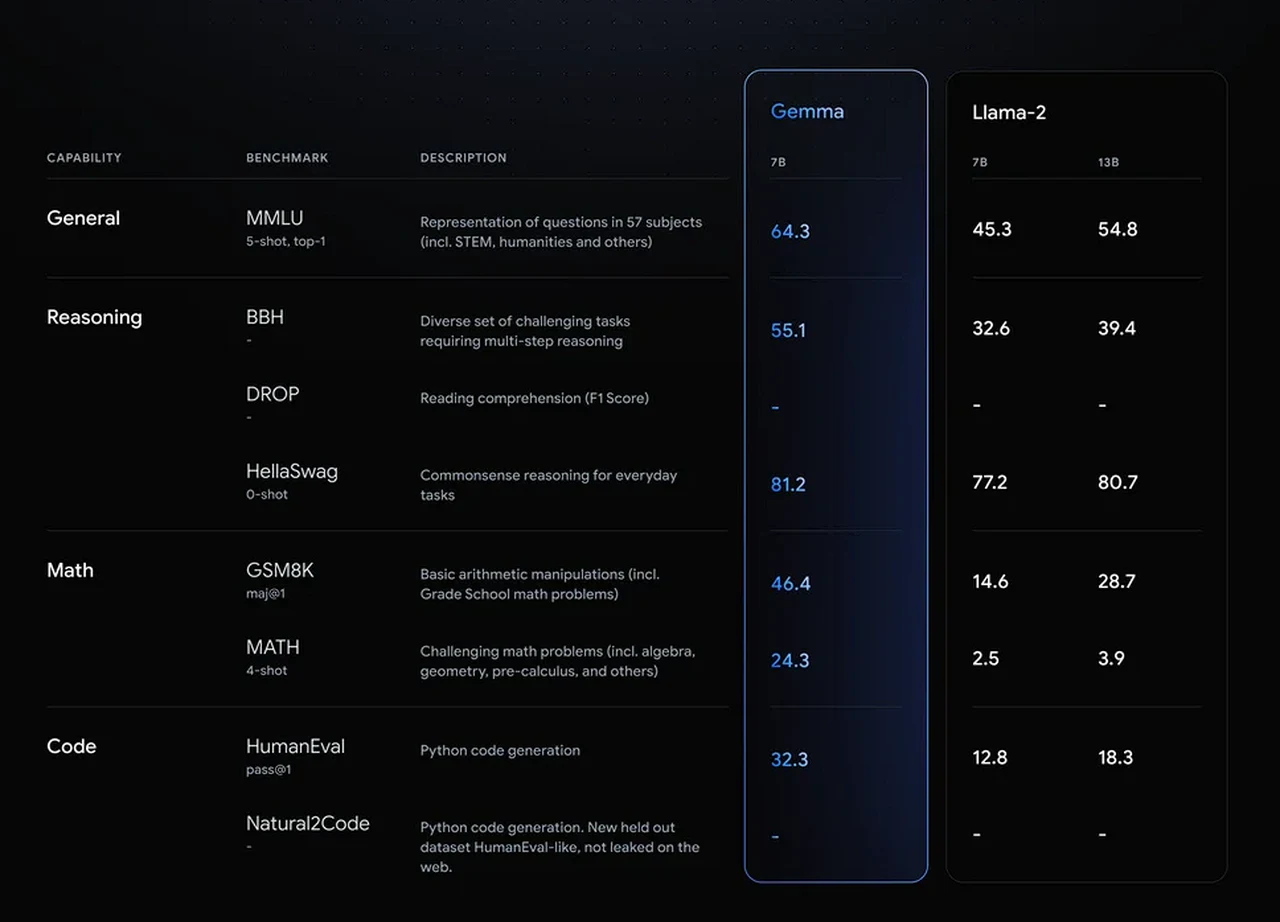

Google has unveiled Gemma, a groundbreaking collection of open-source language models that are reshaping how we interact with machines through language. Gemma is a clear indication of Google’s dedication to contributing to the open-source community and aim to improve how we use machine learning technologies check out the benchmarks comparing Gemma AI vs Llama-2 the table below for performance comparison.

At the heart of Gemma is the Gemini technology, which ensures these models are not just efficient but also at the forefront of language processing. The Gemma AI models are designed to work on a text-to-text basis and are decoder-only, which means they are particularly good at understanding and generating text that sounds like it was written by a human. Although they were initially released in English, Google is working on adding support for more languages, which will make them useful for even more people.

Gemma AI features and usage

- Google has released two versions: Gemma 2B and Gemma 7B. Each size is released with pre-trained and instruction-tuned variants.

- As well as a new Responsible Generative AI Toolkit provides guidance and essential tools for creating safer AI applications with Gemma.

- Google also providing toolchains for inference and supervised fine-tuning (SFT) across all major frameworks: JAX, PyTorch, and TensorFlow through native Keras 3.0.

- Access Gemma via ready-to-use Colab and Kaggle notebooks, alongside integration with popular tools such as Hugging Face, MaxText, NVIDIA NeMo and TensorRT-LLM, make it easy to get started with Gemma.

- Pre-trained and instruction-tuned Gemma models can run on your laptop, workstation, or Google Cloud with easy deployment on Vertex AI and Google Kubernetes Engine (GKE).

- Optimization across multiple AI hardware platforms ensures industry-leading performance, including NVIDIA GPUs and Google Cloud TPUs.

- Terms of use permit responsible commercial usage and distribution for all organizations, regardless of size.

Google Gemma vs Llama 2

The Gemma suite consists of four models. Two of these are particularly powerful, with 7 billion parameters, while the other two are still quite robust with 2 billion parameters. The number of parameters is a way of measuring how complex the models are and how well they can understand the nuances of language.

Open source AI models from Google

Gemma is built for the open community of developers and researchers powering AI innovation. You can start working with Gemma today using free access in Kaggle, a free tier for Colab notebooks, and $300 in credits for first-time Google Cloud users. Researchers can also apply for Google Cloud credits of up to $500,000 to accelerate their projects.

Here are some other articles you may find of interest on the subject of Google Gemini

Training the AI models

To train models as sophisticated as Gemma, Google has used a massive dataset. This dataset includes 6 trillion tokens, which are pieces of text from various sources. Google has been careful to leave out any sensitive information to make sure they meet privacy and ethical standards.

For the training of the Gemma models, Google has used the latest technology, including the TPU V5e, which is a cutting-edge Tensor Processing Unit. The development of the models has also been supported by the JAX and ML Pathways frameworks, which provide a strong foundation for their creation.

The initial performance benchmarks for Gemma look promising, but Google knows there’s always room for improvement. That’s why they’re inviting the community to help refine the models. This collaborative approach means that anyone can contribute to making Gemma even better.

Google has put in place a terms of use policy for Gemma to ensure it’s used responsibly. This includes certain restrictions, like not using the models for chatbot applications. To get access to the model weights, you have to fill out a request form, which allows Google to keep an eye on how these powerful tools are being used.

For those who develop software or conduct research, the Gemma models work well with popular machine learning libraries, such as Keras NLP. If you use PyTorch, you’ll find versions of the models that have been optimized for different types of computers.

The tokenizer that comes with Gemma can handle a large number of different words and phrases, with a vocabulary size of 256,000. This shows that the models can understand and create a wide range of language patterns, and it also means that they’re ready to be expanded to include more languages in the future.

Google’s Gemma models represent a significant advancement in the field of open-source language modeling. With their sophisticated design, thorough training, and the potential for improvements driven by the community, these models are set to become an essential tool for developers and researchers. As you explore what Gemma can do, your own contributions to its development could have a big impact on the future of how we interact with machines using natural language.

Filed Under: Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.