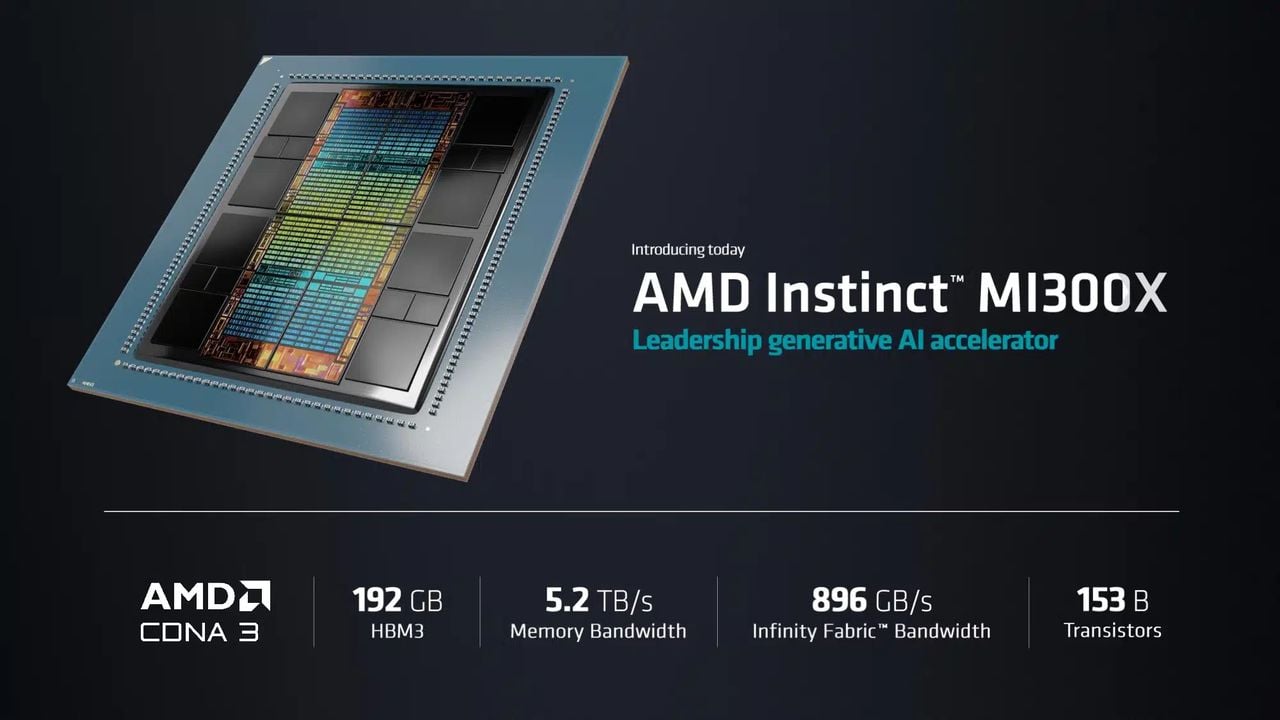

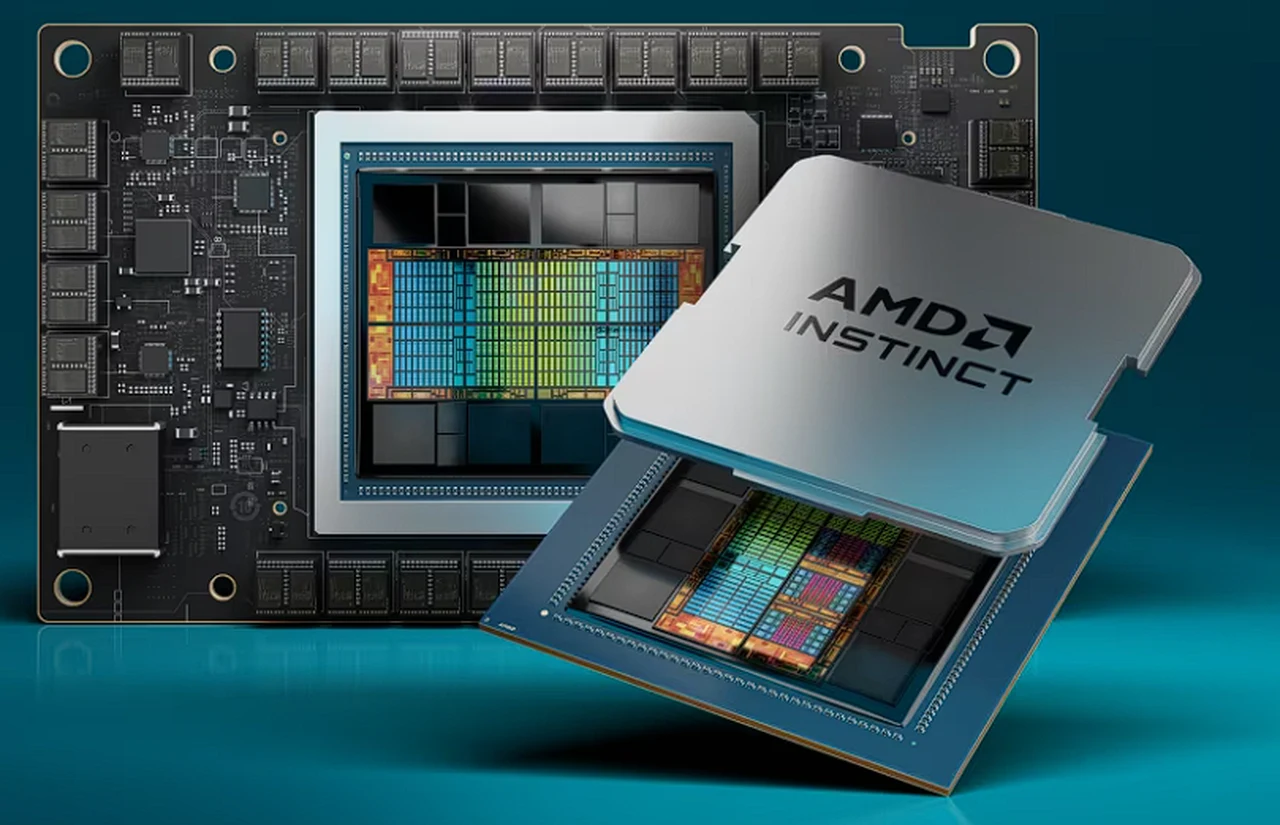

AMD has outlined its AI strategy this week, which includes delivering a broad portfolio of high-performance, energy-efficient GPUs, CPUs, and adaptive computing solutions for AI training and inference. To meet the demand for the explosion of AI applications AMD has launched the Instinct Mi 300X, the world’s highest performance accelerator for generative AI, built on the new CDNA 3 data center architecture optimized for performance and power efficiency.

The Mi 300X features significant improvements over previous generations, including a new compute engine, support for sparsity and the latest data formats, industry-leading memory capacity and bandwidth, and advanced process technologies and 3D packaging.

AMD Instinct Mi 300X designed for generative AI

The AMD Instinct Mi 300X, is built on the CDNA 3 data center architecture, which is tailored for the demands of generative AI. This new accelerator boasts a unique compute engine, substantial memory capacity, and top-tier bandwidth. These features are fine-tuned for the latest data formats and are designed to handle the complexities of sparsity support.

The AI industry is on an upward trajectory, with the data center AI accelerator market expected to balloon from $30 billion in 2023 to a staggering $400 billion by 2027. AMD is strategically positioned to capitalize on this growth. The company’s focus on delivering peak performance, maximizing energy efficiency, and providing a varied computing portfolio is exemplified by the Mi 300X, which excels in generative AI tasks.

World’s most advanced accelerator for generative AI

AMD also recognizes the importance of networking in AI system performance. The company is a proponent of open ethernet, which facilitates efficient system communication. This commitment ensures that AMD’s solutions can scale quickly and maintain high performance levels.

The Mi 300a, AMD’s innovative data center APU for AI and high-performance computing (HPC), has begun volume production. This APU marks a significant advancement in performance and efficiency, furthering AMD’s AI strategy and reinforcing its dedication to cutting-edge innovation.

Software plays a critical role in the AI ecosystem, and AMD’s commitment to an open software environment is clear with the introduction of Rockham 6. This software is specifically designed for generative AI and large language models, providing developers with the tools they need to push the boundaries of AI technology.

AI’s influence is not limited to data centers; it’s also making its way into personal computing. AMD’s Ryzen 8040 series mobile processors, which feature integrated neural processing units (NPUs), are bringing AI capabilities to laptops and other personal devices. This move is democratizing AI technology, making it more accessible to a wider audience.

Collaboration is a key aspect of AMD’s strategy for AI innovation. The company works closely with industry leaders to ensure that its AI solutions are well-integrated and widely available. These partnerships are crucial for the development of next-generation AI applications.

AMD is not merely keeping up with the rapid advancements in AI; it is actively leading the charge. The introduction of pioneering products like the Instinct Mi 300X and the Mi 300a, combined with a strong commitment to open software and collaborative efforts, places AMD in a commanding position in the AI revolution, driving the future of computing forward.

Filed Under: Technology News, Top News

Latest timeswonderful Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, timeswonderful may earn an affiliate commission. Learn about our Disclosure Policy.